‘Flattening the curve’ isn’t just about dealing with viruses, we learn from Will Law. Rather, this is one way to deal with network congestion brought on by the rise in broadband use during the global lockdown. This and other key ways such as per-title encoding and removing the top tier are just two other which are explored in this video from Akamai and Bitmovin.

Will Law starts the talk explaining why congestion happens in a world where ABR (adaptive bitrate streaming) is supposed to deal with this. With Akamai’s traffic up by around 300%, it’s perhaps not a surprise there’s a contest for bandwidth. As not all traffic is a video stream, congestion will still happen when fighting with other, static, data transfers. However deeper than that, even with two ABR streams, the congestion protocol in use has a big impact as will shows with a graph showing Akamai’s FastTCP and BBR where BBR steals all the bandwidth rather than ‘playing fair’.

Using a webpage constructed for the video, Will shows us a baseline video playback and the metrics associated with it such as data transferred and bitrate which he uses to demonstrate the different benefits of bitrate production techniques. The first is covered by Bitmovin’s Sean McCarthy who explains Bitmovin’s per-title encoding technology. This approach ensures that each asset has encoder settings tuned to get the best out of the content whilst reducing bandwidth as opposed to simply setting your encoder to a fairly-high, safe, static bitrate for all content no matter how complex it is. Will shows on the demo that the bitrate reduces by over 50%.

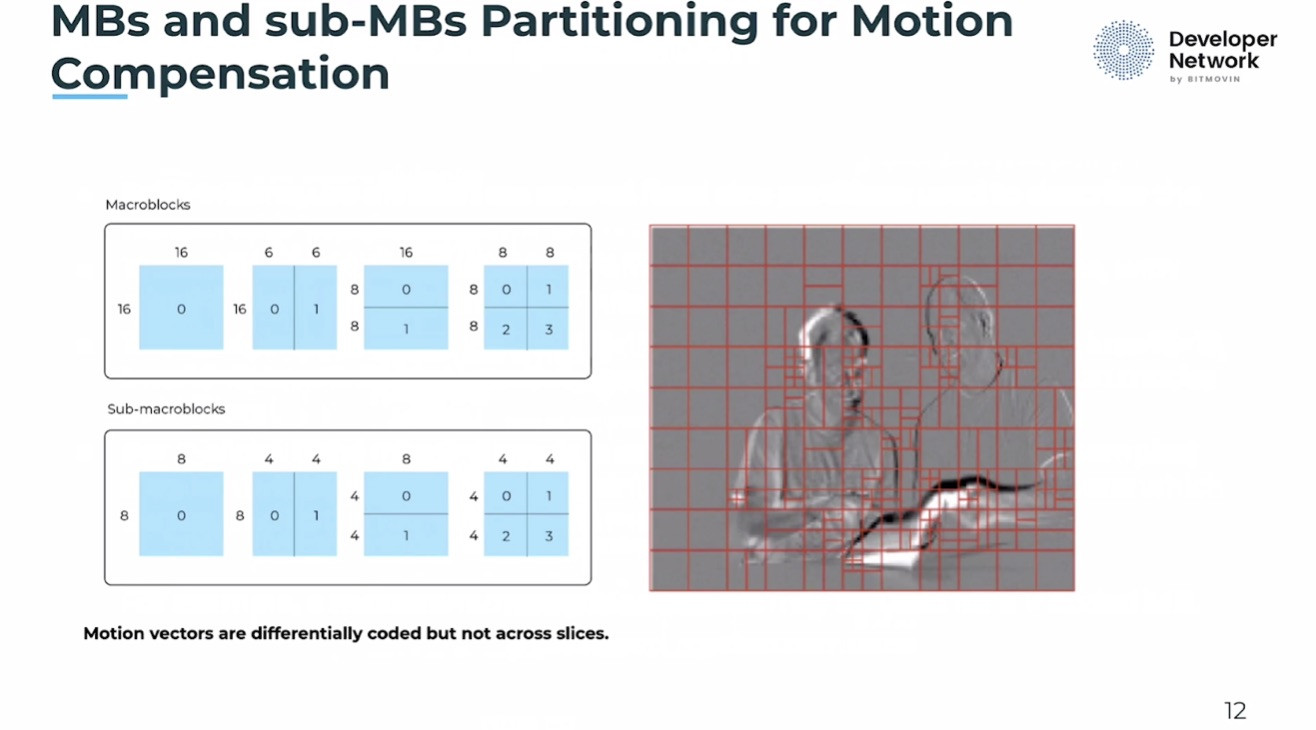

Swapping codecs is an obvious way to reduce bandwidth. Unlike per-title encoding which is transparent to the end-user, using AV1, VP9 or HEVC requires support by the final device. Whilst you could offer multiple versions of your assets to make sure you still cover all your players despite fragmentation, this has the downside of extra encoding costs and time.

Will then looks at three ways to reduce bandwidth by stopping the highest-bitrate rendition from being used. Method one is to manually modify the manifest file. Method two demonstrates how to do so using the Bitmovin player API, and method three uses the CDN itself to manipulate the manifests. The advantage of doing this in the CDN is because this allows much more flexibility as you can use geolocation rules, for example, to deliver different manifests to different locations.

The final method to reduce peak bandwidth is to use the CDN to throttle download speed of the stream chunks. This means that while you may – if you are lucky – have the ability to download at 100Mbps, the CDN only delivers 3- or 5-times the real-time bitrate. This goes a long way to smoothing out the peaks which is better for the end user’s equipment and for the CDN. Seen in isolation, this does very little, as the video bitrate and the data transferred remain the same. However, delivering the video in this much more co-operative way is much more likely to cause knock-on problems for other traffic. It can, of course, be used in conjunction with the other techniques. The video concludes with a Q&A.

Watch now!

Speakers

|

Will Law Chief Architect, Akamai |

|

Sean McCarthy Technical Product Marketing Manager, Bitmovin |