If you get given a video signal, would you know what type it was? Life used to be simple, an SD signal would decode in a waveform monitor and you’d see which type it was. Now, with UHD and HDR, this isn’t all the information you need. Arguably this gets easier with IP and is possibly one of the few things that does. This video from AIMS helps to clear up why IP’s the best choice for UHD and HDR.

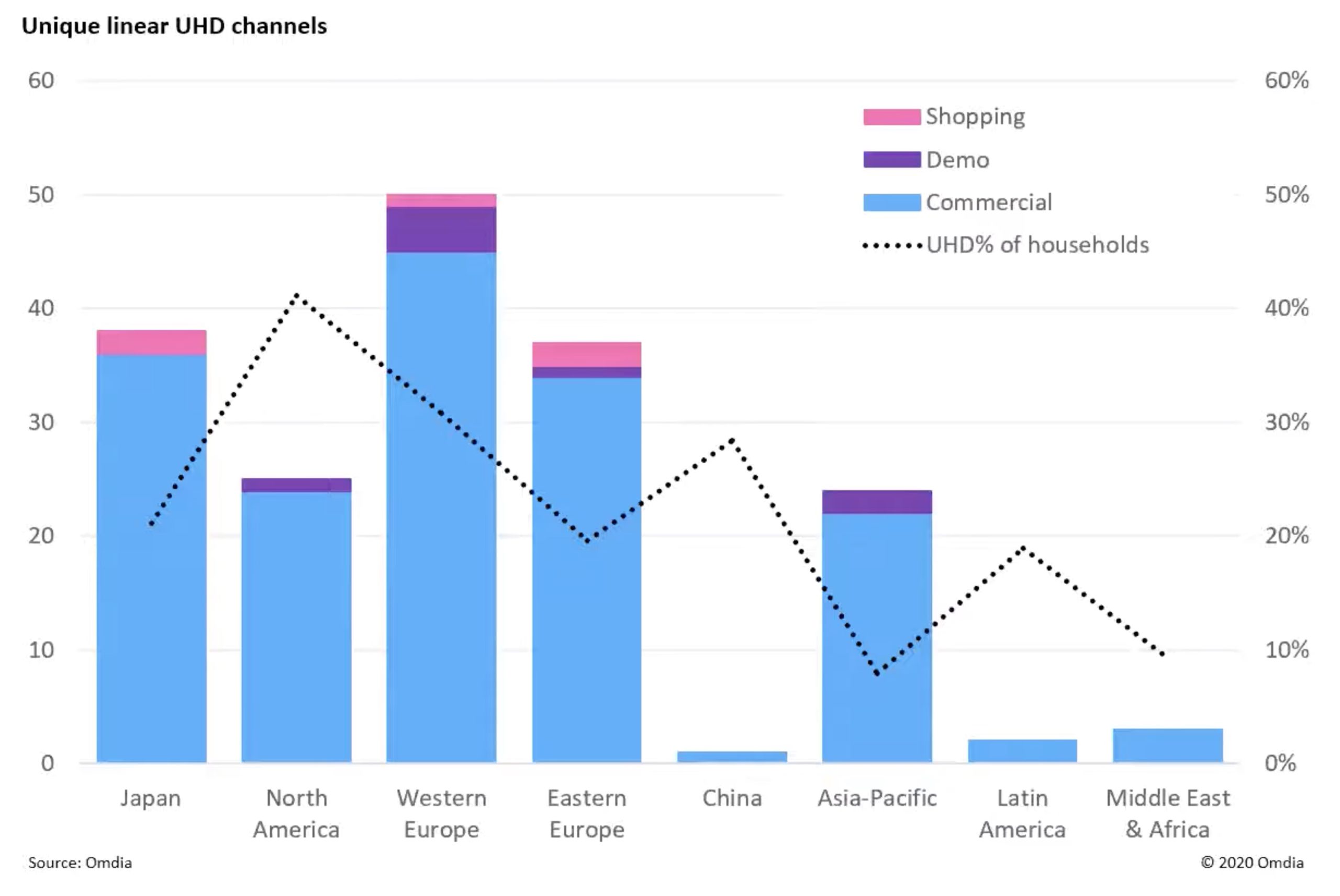

John Mailhot from Imagine Communications joins Wes Simpson from LearnIPVideo.com to introduce us to the difficulties wrangling with UHD and HDR video. Reflecting on the continued improvement of in-home displays’ ability to show brighter and better pictures as well as the broadcast cameras’ ability to capture much more dynamic range, John’s work at Imagine is focussed on helping broadcasters ensure their infrastructure can enable these high dynamic range experiences. Streaming services have a slightly easier time delivering HDR to end-users as they are in complete control of the distribution chain whereas often in broadcast, particularly with affiliates, there are many points in the chain which need to be HDR/UHD capable.

John starts by looking at how UHD was implemented in the early stages. UHD, being twice the horizontal and twice the vertical resolution of HD is usually seen as 4xHD, but, importantly, John points out that this is true for resolution but, as most HD is 1080i, it also represents a move to 1080p, 3Gbps signals. John’s point is that this is a strain on the infrastructure which was not necessarily tested for initially. Given the UHD signal, initially, was carried by four cables, there is now 4 times the chance of a signal impairment due to cabling.

Square Division Multiplexing (SQD) is the ‘most obvious’ way to carry UHD signals with existing HD infrastructure. The picture is simply cut into four quarters and each quarter is sent down one cable. The benefit here is that it’s easy to see which order the cables need to be connected to the equipment. The downsides included a frame-buffer delay (half a frame) each time the signal was received, difficulties preventing drift of quadrants if they were treated differently by the infrastructure (i.e. there was a non-synced hand-off). One important problem is that there is no way to know an HD feed is from a UHD set or just a lone 3G signal.

2SI, two-sample interleave, was another method of splitting up the signal which was standardised by SMPTE. This worked by taking a pair of samples and sending them down cable 1, then the next pair down cable 2, the pair of samples under the first pair went down cable 3 then the pair under 2 went down 4. This had the happy benefit that each cable held a complete picture, albeit very crudely downsampled. However, for monitoring applications, this is a benefit as you can DA one feed and send this to a monitor. Well, that would have been possible except for the problem that each signal had to maintain 400ns timing with the others which meant DAs often broke the timing budget if they reclocked. It did, however, remove the half-field latency burden which SQD carries. The main confounding factor in this mechanism is that looking at the video from any cable on a monitor isn’t enough to understand which of the four feeds you are looking at. Mis-cabling equipment leads to subtle visual errors which are hard to spot and hard to correct.

Enter the VPID, the Video Payload ID. SD SDI didn’t require this, HD often had it, but for UHD it became essential. SMPTE ST 425-5:2019 is the latest document explaining payload ID for UHD. As it’s version five, you should be aware that older equipment may not parse the information in the correct way a) as a bug and b) due to using an old standard. The VPID carries information such as interlaced/progressive, aspect ratio, transfer characteristics (HLG, SDR etc.), frame rate etc. John talks through some of the common mismatches in interpretation and implementation of VPID.

12G is the obvious baseband solution to the four-wires problem of UHD. Nowadays the cost of a 12G transceiver is only slightly more than 3G ones, therefore 12G is a very reasonable solution for many. It does require careful cabling to ensure the cable is in good condition and not too long. For OB trucks and small projects, 12G can work well. For larger installations, optical connections are needed, one signal per fibre.

The move to IP initially went to SMPTE ST 2022-6, which is a mapping of SDI onto IP. This meant it was still quite restrictive as we were still living within the SDI-described world. 12G was difficult to do. Getting four IP streams correctly aligned, and all switched on time, was also impractical. For UHD, therefore SMPTE ST 2110 is the natural home. 2110 can support 32K, so UHD fits in well. ST 2110-22 allows use of JPEG XS so if the 9-11Gbps bitrate of UHDp50/60 is too much it can be squeezed down to 1.5Gbps with almost no latency. Being carried as a single video flow removes any switch timing problems and as 2110 doesn’t use VPID, there is much more flexibility to fully describe the signal allowing future growth. We don’t know what’s to come, but if it’s different shapes of video rater, new colour spaces or extensions needed for IPMX, these are possible.

John finishes his conversation with Wes mentioning two big benefits of moving to IT-based infrastructure. One is the ability to use the free Wireshark or EBU List tools to analyse video. Whilst there are still good reasons to buy test equipment, the fact that many checks can be done without expensive equipment like waveform monitors is good news. The second big benefit is that whilst these standards were being made, the available network infrastructure has moved from 25 to 100 to 400Gbps links with 800Gbps coming in the next year or two. None of these changes has required any change in the standards, unlike with SDI where improvements in signal required improvements in baseband. Rather, the industry is able to take advantage of this new infrastructure with no effort on our part to develop it or modify the standards.

Watch now!

Speakers

|

John Mailhot

Systems Architect, IP Convergence,

Imagine Communications

|

|

Wes Simpson

RIST AG Co-Chair, VSF

President & Founder, LearnIPvideo.com

|