Advertising has been the mainstay of TV for many years. Like it or loathe it, ad-support VoD (AVoD) delivers free to watch services that open up content to a much wider range of people than otherwise possible just like ad-supported broadcast TV. Even people who can afford subscriptions have a limit to the number of services they will subscribe to. Having an AVoD offering means you can draw people in and if you also have SVoD, there’s a path to convince them to sign up.

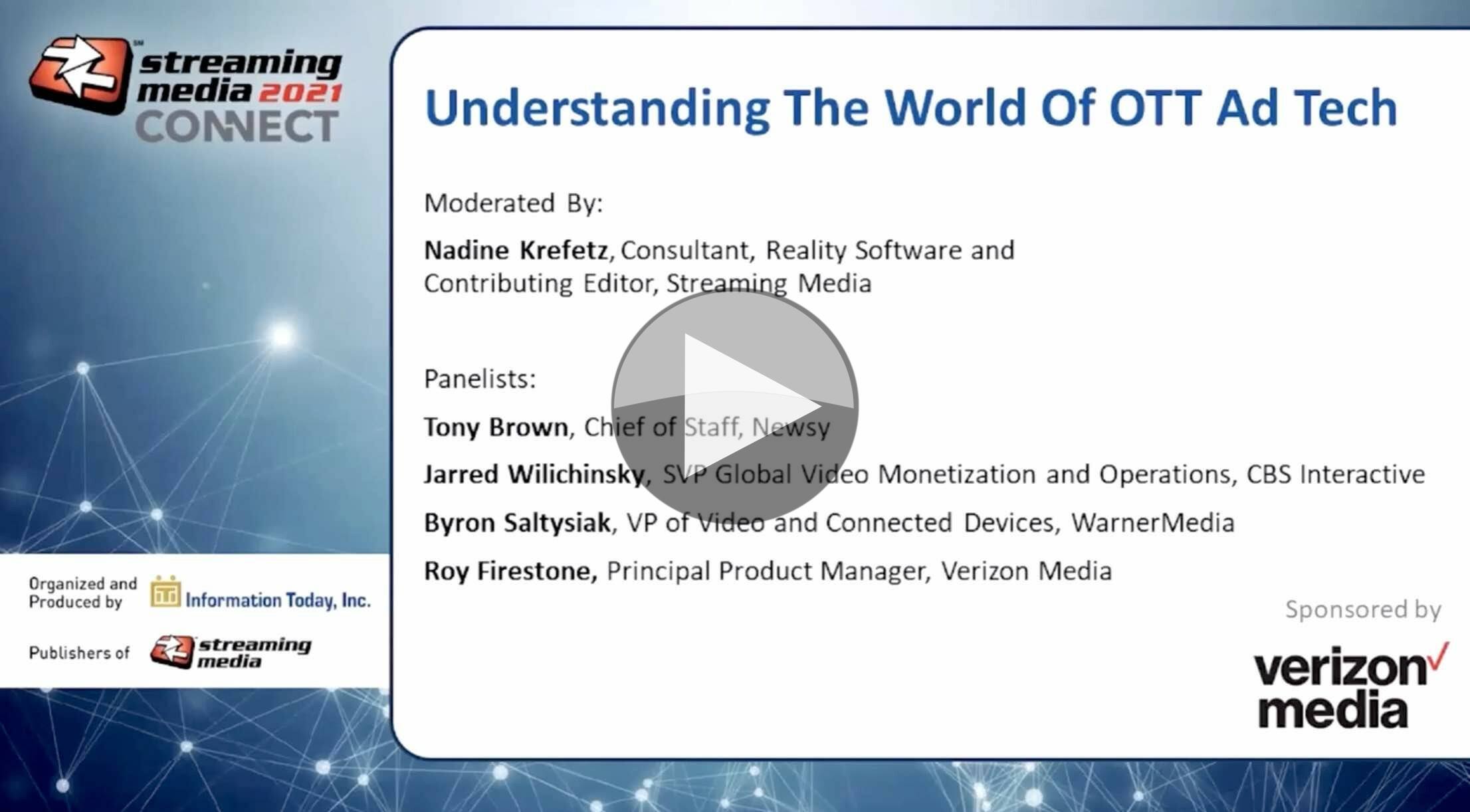

To look at where ad tech is today and what problems still exist, Streaming Media contributing editor Nadine Krefetz has brought together Byron Saltysiak from WarnerMedia, Verizon Media’s Roy Firestone, CBS Interactive’s Jarred Wilichinksy and Newsy’s Tony Brown to share their daily experience of working with OTT ad tech.

Nadine is quick to ask the panel what they feel the weakest link is in ad tech. ‘Scaling up’ answered Jarred who’s seen from massive events how quickly parts of the ad ecosystem fail when millions of people need an ad break at the same time. Bryon adds that with the demise of flash came the loss of an abstraction layer. Now, each platform has to be targetted directly leading to a lot of complexity. Previously, as long as you got flash right, it would work on all platforms. Lastly, redundancy came up as a weakness. Linked to Jarred’s point about the inability to scale easily, the panel’s consensus is they are far off broadcast’s five-nines uptime targets. In some ways, this is to be expected as IT is a more fragmented, faster-moving market than consumer TVs making it all the harder to keep up and match the changing patterns.

A number of parts of the conversation centred around ad tech as an ecosystem. This is a benefit and a drawback. Working in an ecosystem means that as much as the streaming provider wants to invest in bolstering their own service to make it able to cope with millions upon millions of requests, they simply can’t control what the rest of the ecosystem does and if 2 million people all go for a break at once, it doesn’t take much for an ad provider’s servers to collapse under the weight. On the other hand, points out Byron, what is a drawback is also a strength whereby streaming has the advantage of scale which broadcasters don’t. Roy’s service delivered one hundred thousand matches last year. Byron asks how many linear channels you’d need to cover that many.

Speed is a problem given that the ad auction needs to happen in the twenty seconds or so leading up to the ad being shown to the viewer. With so many players, things can go wrong starting off simply with slow responses to requests. But also with ad lengths. Ad breaks are built around 15 seconds segments so it’s difficult when companies want 6 or 11 seconds and it’s particularly bad when five 6-second ads are scheduled for a break: “no-one wants to see that.”

Jarred laments that despite the standards and guidelines available that “it’s still the wild west” when it comes to ad quality and loudness where viewers are the ones bearing the brunt of these mismatched practices.

Nadine asks about privacy regulations that are increasingly reducing the access advertisers have to viewer data. Byron points out that they do in some way need a way to identify a user such that they avoid showing them the same ad all the time. It turns out that registered/subscribed users can be tracked under some regulations so there’s a big push to have people sign up.

Other questions covered by the panel include QA processes, the need for more automation in QA, how to go about starting your own service, dealing with Roku boxes and how to deal with AVoD downloaded files which, when brought online, need to update the ad servers about which ads were watched.

Watch now!

Speakers

|

Tony Brown Chief of Staff, Newsy |

|

Jarred Wilichinsky SVP Global Video Monetization and Operations, CBS Interactive |

|

Byron Saltysiak VP of Video and Connected Devices, WarnerMedia |

|

Roy Firestone Principal Product Manger, Verizon Media |

|

Nadine Krefetz Contributing Editor, Streaming Media |