IPMX is bringing a standards-based, software deployable connectivity solution to ProAV. It stands itself in contrast to hardware-based IP technologies such as Zeevee and SDVoE which both aim to create de facto standards by building large alliances of companies based on their chips. IPMX’s aim is to open up the market to a free-to-access technology that can be implemented in hardware and software alike. In this way vendors have more freedom in implementation with the hope of wider interoperability, depending on IPMX adoption. These are amongst some of the business reasons behind IPMX which are covered in this talk Matrox’s David Chiappini.

In today’s video, Matrox’s Jean Lapierre looks at the technical side of IPMX to answer some of the questions from those who have been following its progress. AIMS, the Alliance for IP Media Solutions, are upfront about the fact that IPMX is a work in progress with important parts of the project dealing with carriage of HDCP and USB still being worked on. However, much has already been agreed so it makes sense to start thinking about how this would work in real life when deployed. For a primer on the technical details of IPMX, check out this video from Andreas Hildebrand.

Jean starts by outlining the aims of the talk; to answer questions such as whether IPMX requires a new network, expensive switches and PTP. IPMX, he continues, is a collection of standards and specifications which enable transport of HD, 4K or 8K video in either an uncompressed form or lightly compressed, visually lossless form with a latency of <1ms. Because you can choose to enable compression, IPMX is compatible with 1GB, CAT5e networks as well as multi-gigabit infrastructure. Moreover, there’s nothing to stop mixing compressed and uncompressed signals on the same network. In fact, the technology is apt for carrying many streams as all media (also known as ‘essences’ to include metadata) is sent separately which can lead to hundreds of separate streams on the network. The benefit of splitting everything up is that in the past if you wanted to read subtitles, you would have to decode a 3Gbps signal to access a data stream better measured in bytes per second. Receiving just the data you need allows servers or hardware chips to minimise costs.

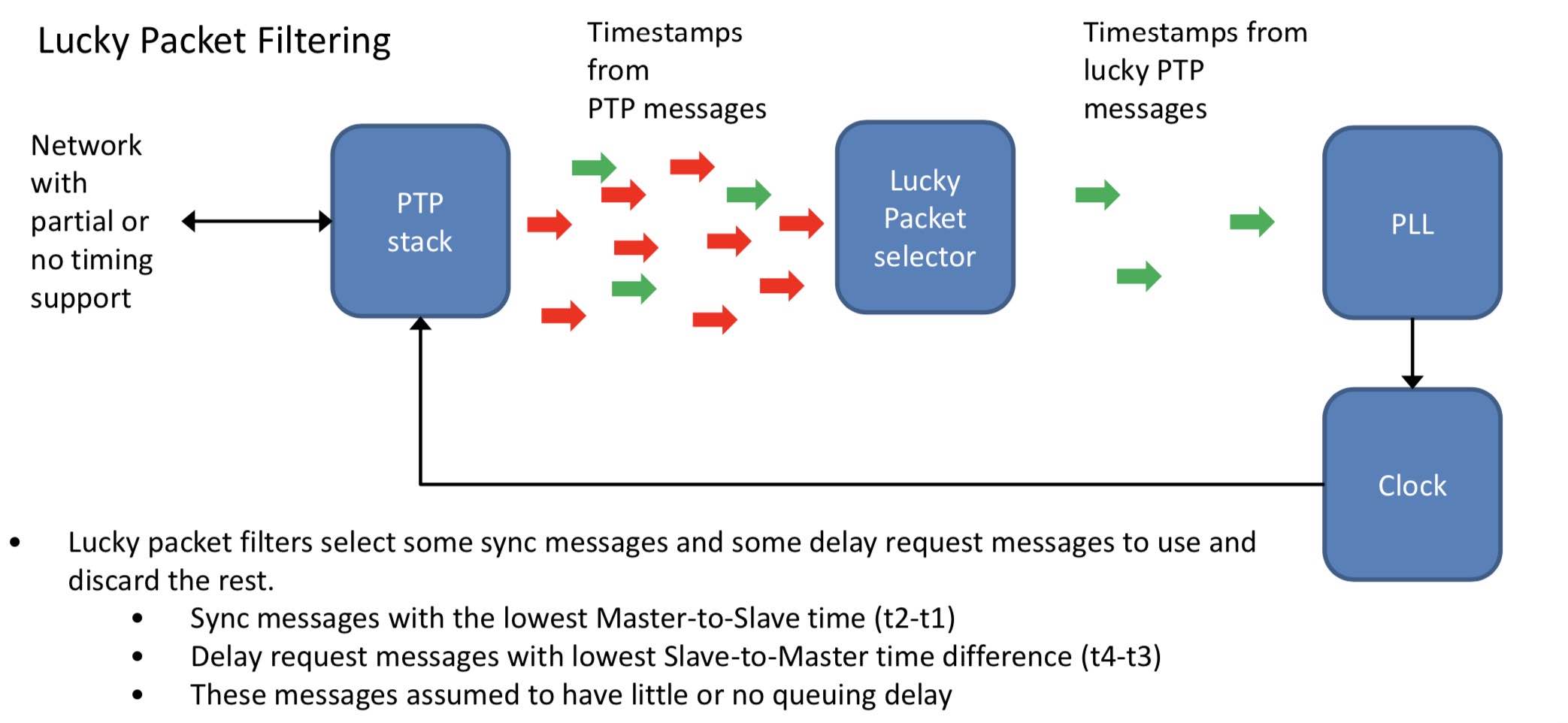

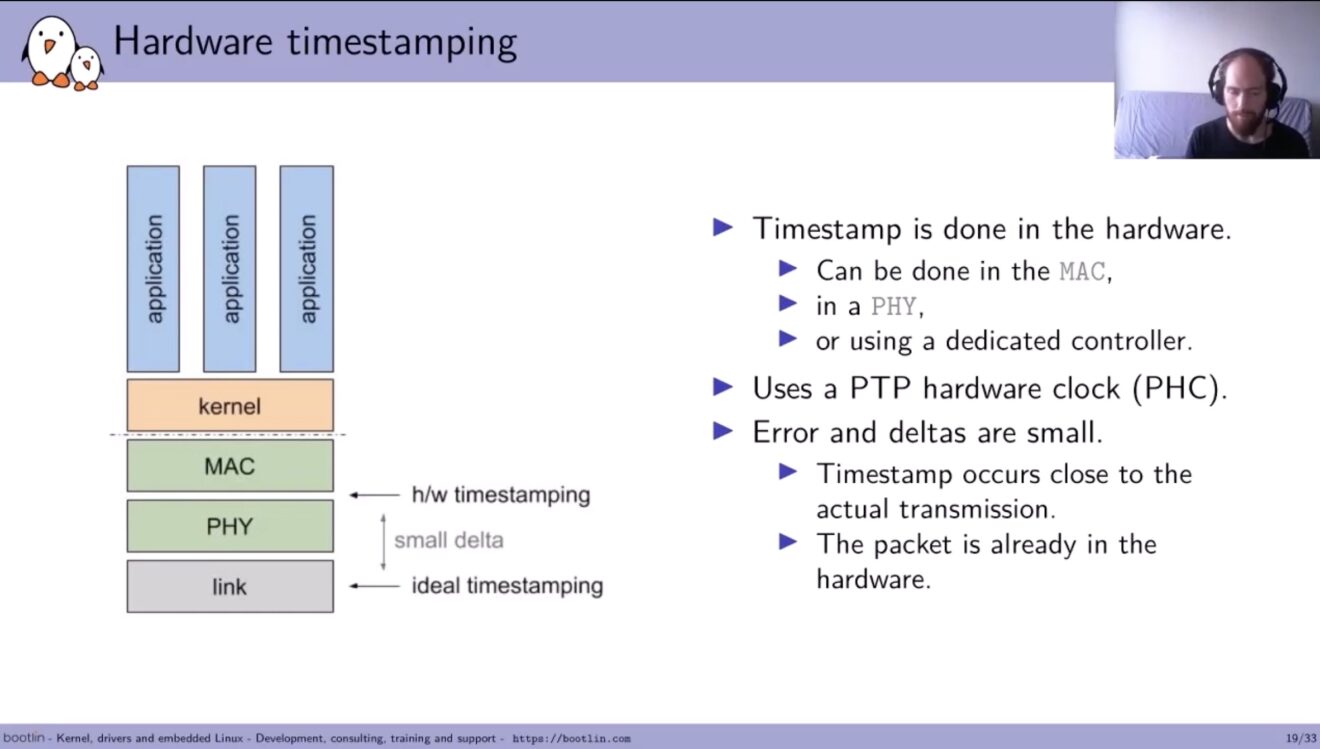

Jean explains how multicast is used to deliver streams to multiple receivers and how receivers can subscribe to multiple streams. A lot of the time, video streams are used separately such as from a computer to a projector meaning exact timing isn’t needed. Even coming into a vision mixer/board doesn’t always need to be synchronised because for many situations, having a frame synchroniser on all inputs works well. There are, however, times when frame-accurate sync is important and for those times, PTP can be used. PTP stands for the Precise Time Protocol and if you’re unfamiliar, you can find out more here.

The upshot of using PTP with IPMX is that you can unlock perfect synchronisation for applications like video walls; any time you need to mix signals really. IMPX relaxes some of the rules of PTP that SMPTE’s ST 2059 employs to reduce the load on the grandmaster clocks. PTP is a very accurate timing mechanism but it’s fundamentally different from black and burst because it’s a two-way technology that relies on an ongoing dialogue between the devices and the clock. This is why Jean says that for anything more than a small network, you are likely to need a switch that is PTP aware and can answer the queries which would normally go to the single, central switch. In summary then, Jean explains that for many IPMX implementations you don’t need a new network, a PTP grandmaster or PTP aware switches. But for those wanting to mix signals with perfect sync or those who have a large network, new investment would reap benefits.

Watch now!

Speaker

|

Jean Lapierre Senior Director, Advanced Technolgies Matrox |