Standards in media go back to the early days of cinema standardising the sprocket holes in rolls of film with the intent of making it easier for the US Army to distribute training films. This standardisation work marked the beginning of SMPTE, though the acronym lacked a T at the time since television hadn’t yet been invented. There is a famous XKCD comic that mocks standards or at least standards that promise to replace all that went before. This underlines why it’s more important what standards don’t say than what they do. Giving the market room to evolve, advance and innovate is a vital aspect of good standards.

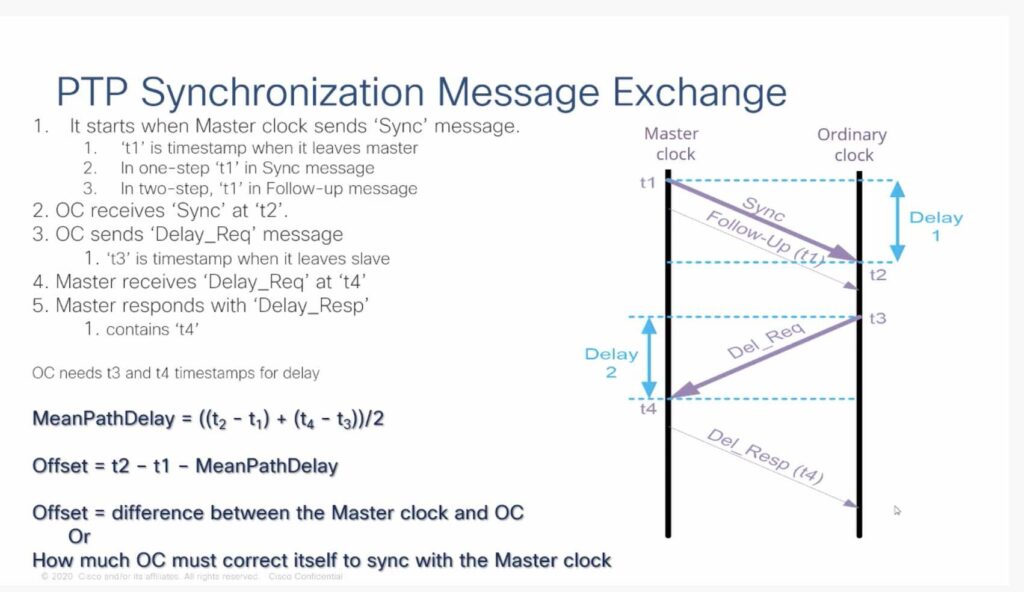

The broadcast industry is emerging from a time of great stability thanks to a number of standards that have been around for ages. SDI is a decades-old technology that is ubiquitous in the industry. Likewise, H.264 has become the only codec to use unless you have a specific use case for HEVC, AV1, VP9 etc. thanks to its almost universal presence in devices. Black and burst is now being replaced by PTP in IP installations. This is novel, despite PTP’s upcoming twentieth birthday, because it doesn’t matter if PTP is four decades old, its launch in the broadcast sector is recent, support will be low.

This panel from SMPTE Hollywood features two members of SMPTE deeply involved with standardisation within the industry: Bruce Devlin, Standards Vice President and Thomas Bause Mason Director of Standards Development. . They are joined by IP specialist JiNan Glasgow George and moderator Maureen O’Rourke from Disney.

In a sometimes frank discussion, we hear about the attempt by standards bodies to try and keep up with the shift form hardware to software within the whole industry, the use of patents within standards, how standards bodies are financed and the cost of standards, software versus hardware patents, standardisation of AI models, ensuring standards are realistic & useful with plugfests, the difference between standards bodies such as ANSI, ISO, SMPTE etc.,

Watch now!

Speaker

|

Thomas Bause Mason Director of Standards Development, SMPTE |

|

Bruce Devlin Standards Vice-President, SMPTE |

|

JiNan Glasgow George Patent Attorney, Neo IP |

|

Maureen O’Rourke Technical Lead Quality Control Officer, The Walt Disney Company |