If you’ve ever tried to implement your own player, you’ll know there’s a big gap between understanding the HLS/DASH spec and getting an all-round great player. Finding the best, most elegant, ways of dealing with problems like buffer exhaustion takes thought and experience. The same is true for low-latency playback.

Fortunately, Akamai’s Will Law is here to give us the benefit of his experience implementing his own and helping customers monitor the performance of their players. At the end of the day, the player is the ‘kingpin’ of streaming, comments Will. Without it, you have no streaming experience. All other aspects of the stream can be worked around or mitigated, but if the player’s not working, no one watches anything.

Will’s first tip is to implement ‘segment abandonment’. This is when a video player foresees that downloading the current segment is taking too long; if it continues, it will run out of video to play before the segment has arrived. A well-programmed player will sport this and try to continue the download of this segment from another server or CDN. However, Will says that many will simply continue to wait for the download and, in the meantime, the download will fail.

Tip two is about ABR switching in low-latency, chunked transfer streams. The playback buffer needs to be longer than the chunk duration. Without this precaution, there will not be enough time for the player to make the decision to switch down layers. Will shows a diagram of how a 3-second playback buffer can recover as long as it uses 2-second segments.

Will’s next two suggestions are to put your initial chunk in the manifest by base64-encoding it. This makes the manifest larger but removes the round-trip which would otherwise be used to request the chunk. This can significantly improve the startup performance as the RTT could be a quarter of a second which is a big deal for low-latency streams and anyone who wants a short time-to-play. Similarly, advises Will, make those initial requests in parallel. Don’t wait for the init file to be downloaded before requesting the media segment.

Whilst many of points in this talk focus on the player itself, Will says it’s wise for the player to provide metrics back to the CDN, hidden in the request headers or query args. This data can help the CDN serve media smarter. For instance, the player could send over the segment duration to the CDN. Knowing how long the segment is, the CDN can compare this to the download time to understand if it’s serving the data too slow. Perhaps the simplest idea is for the player to pass back a GUID which the CDN can put in the logs. This helps identify which of the millions of lines of logs are relevant to your player so you can run your own analysis on a player-by-player level.

Will’s other points include advice on how to avoid starting playing at the lowest bandwidth and working up. This doesn’t look great and is often unnecessary. The player could run its own speed test or the CDN could advise based on the initial requests. He advises never trusting the system clock; use an external clock instead.

Regarding playback latency, it pays to be wise when starting out. If you blindly start an HLS stream, then your latency will be variable within the duration of a segment. Will advocates HEAD requests to try to see when the next chunk is available and only then starting playback. Another technique is to vary your playback rate o you can ‘catch up’. The benefit of using rate adjustment is that you can ask all your players to be at a certain latency behind realtime so they can be close to synchronous.

Two great tips which are often overlooked: Request multiple GOPs at once. This helps open up the TCP windows giving you a more efficient download. For mobile, it can also help the battery allowing you to more efficiently cycle the radio on and off. Will mentions that when it comes to GOPs, for some applications its important to look at exactly how long your GOP should be. Usually aligning it with an integer number of audio frames is the way to choose your segment duration.

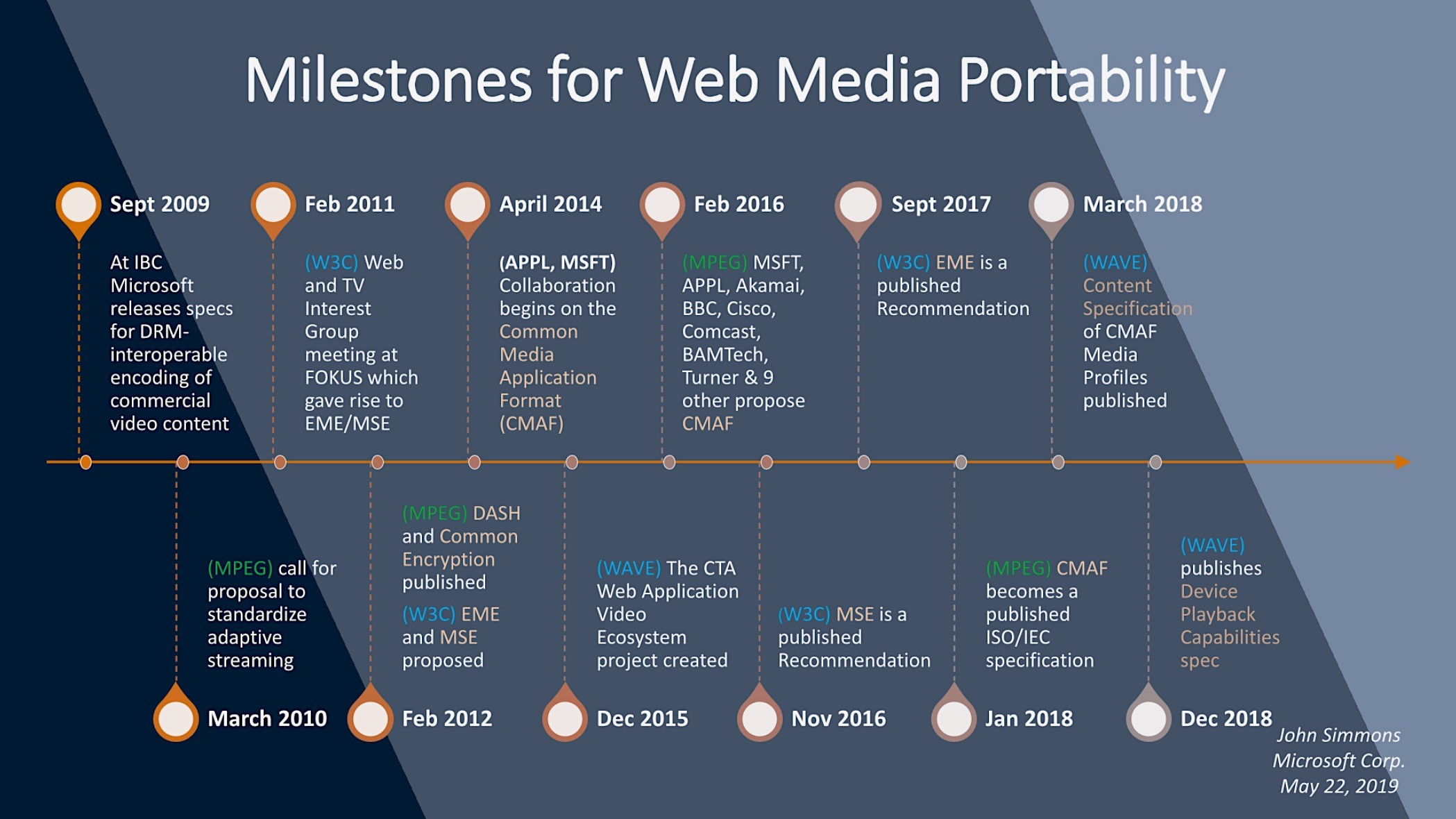

The talk finishes with an appeal to move to using CMAF containers for streaming ask they allow you to deliver HLS and DASH streams from the same media segments and move to a common DRM. Will says that CBCS encrypted content is now becoming nearly all-pervasive. Finally, Will gives some tips on how players are best to analyse which CDN to use in multi-CDN environments.

Watch now!

Speaker

|

Will Law Chief Architect, Akamai |