The internet has been a continuing story of proprietary technologies being overtaken by open technologies, from the precursors to TCP/IP, to Flash/RTMP video delivery, to HLS. Understanding the history of why these technologies appear, why they are subsumed by open standards and how boost in popularity that happens at that transition is important to help us make decisions now and foresee how the technology landscape may look in five or ten years’ time.

This talk, by Jonn Simmons, is a talk of two halves. Looking first at the history of how our standards coalesced into what we have today will fill in many blanks and make the purpose of current technologies like MPEG DASH & CMAF clearer. He then looks at how we can understand what we have today in light of similar situations in the past answering the question of whether we are at an inflexion point in technology.

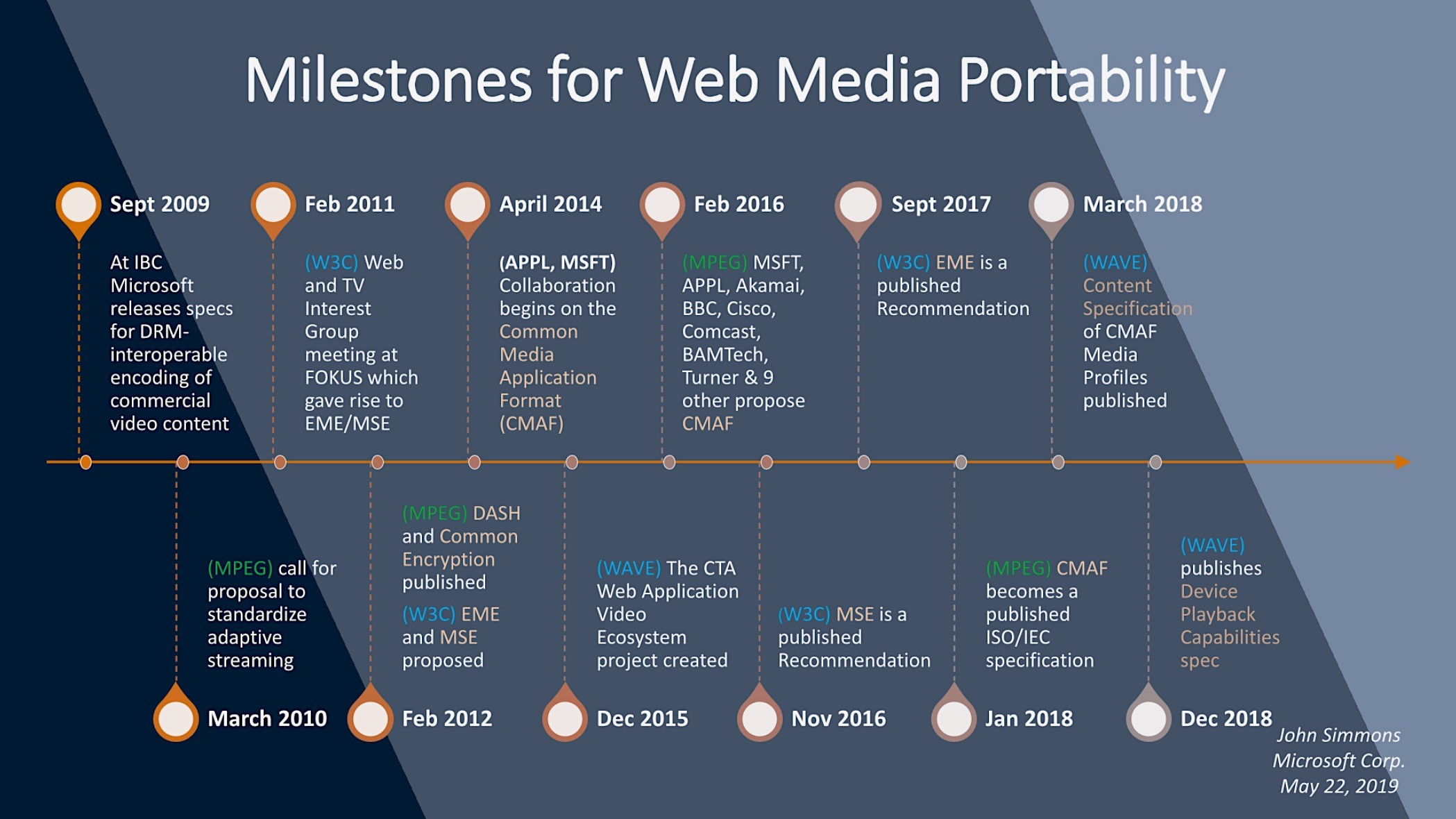

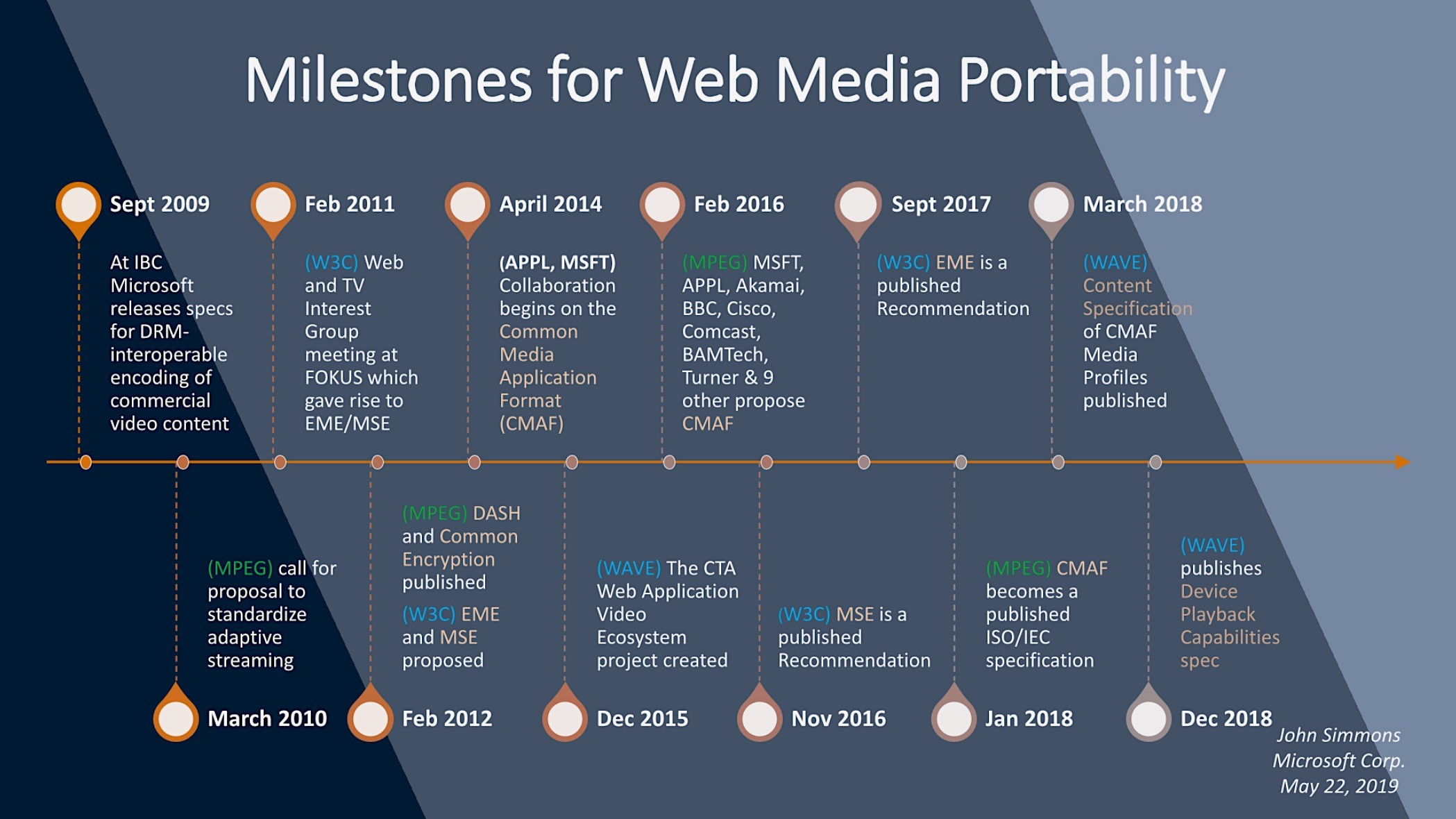

John first looks at the importance of making DRM-protected content portable in the same way as non-protected content was easy to move between computers and systems. This was in response to a WIPO analysis which, as many would agree, concluded that this was essential to enable legal video use on the internet. In 2008, Mircosoft analysed all the elements needed, beyond the simple encryption, to allow such media to be portable. It would require HTML extensions for delivery, DRM signalling, authentication, a standard protocol for Adaptive Delivery (also known as ABR) and an adaptive container format. We then take a walk through the timeline starting in 2009 through to 2018 seeing the beginnings and published availability of such technologies Common Encryption, MPEG DASH and CMAF.

John then walks through these key technologies starting with the importance of Common Encryption (also known as CENC). Previously all the DRM methods had their own container formats. Harmonisation of DRM is, likely, never going to happen so we’ll always have Apple’s own, Google’s own, Microsoft’s and plenty of others. For streaming providers, it’s a major problem to deliver all the different formats and makes for messy, duplicative workflows. Common Encryption allows for one container format which can contain any DRM information allowing for a single workflow with different inputs. On the player side, the player can, now, simply accept a single stream of DRM information, authenticate with the appropriate service and decode the video.

CMAF is another key technology called out by John in enabling portability of media. It was co-developed with Apple to enable a common media format for HLS and DASH. We’ve covered this before on The Broadcast Knowledge starting with the ISO BMFF format on which DASH and CMAF are based, Will Law’s famous ‘Chunky Monkey’ talk and many more. We recently covered FuboTV’s talk on how they distribute HLS & DASH multi-codec encoding and packaging.

Also highlighted by John. are the JavasScript Media Source Extensions and Encrypted Media Extensions which allow interaction from browsers/JavaScript with both ABR/Adaptive Streaming and DRM. He then talks about CTA WAVE which is a project that specifically aims to improve streamed media experiences on consumer devices, CTA being the Consumer Technology Association who are behind the annual CES exhibition in Las Vegas.

What is often less apparent is the current work happening developing new standards and specifications. John calls out a number of different projects within W3C and MPEG such as Low latency support for CMAF, MSE and codec switching in MSE. Work on ad signalling period boundaries and SCTE-35 is making its debut into JavaScript with some ongoing work to create the link between ad markers and JS applications. He also calls out VVC and AV1 mappings into CMAF.

In the second part of the presentation, John asked ‘where will we end up?’ John draws upon two examples. One is the number of TCP/IP hosts between 1980 and 1992. He shows it was clear that when TCP/IP was publicly available there was an exponential increase in adoption of TCP/IP, moving on from proprietary network interfaces available in the years before. Similarly with websites between 1990 and 1997. Exponential growth happened after 1993 when the standard was set for Web Clients. This did take a few years to have a marked effect, but the number of websites moved from a flat ‘less than 100’ number to 600, then 10,000 in 1994 increasing to a quarter of a million by 1995 and then over one million in 1996. This shows the difference between the power ‘walled garden’ environments and the open internet.

John sees media technology today as still having a number of ‘traditional’ walled gardens such as DISH and Sky TV. He sees people self-serving multiple walled gardens to create their own larger pool of media options, typically known as ‘cord cutters’. He, therefore, sees two options for the future. One is ever larger walled gardens where large companies aggregate the content of smaller content owners/providers. The other option is having cloud services that act as a one-stop-shop for your media, but dynamically authenticate against whichever service is needed. This is a much more open environment without the need to be separately subscribing to each and every outlet in the traditional sense.

Watch now!

Speakers

|

John Simmons

W3C Evangelist, Media & Entertainment

W3C

|