VMAF, from Netflix, has become a popular tool for evaluating video quality since its launch as an Open Source project in 2017. Coming out of research from the University of Southern California and The University of Texas at Austin, it’s seen as one of the leading ways to automate video assessment.

Netflix’s Christos Bampis gives us a brief overview of VMAF’s origins and its aims. VMAF came about because other metrics such as MS-SSIM and, in particular, PSNR aren’t close enough indicators of quality. Indeed, Christos shows that when it comes to animated content (i.e. anime and cartoons) subjective scores can be very high, but if we look at the PSNR score it can be the same as the PSNR of score another live-action video clip which humans rate a lot lower, subjectively. Moreover, in less extreme examples, Christos explains. PSNR is often 5% or so away from the actual subjective score in either direction.

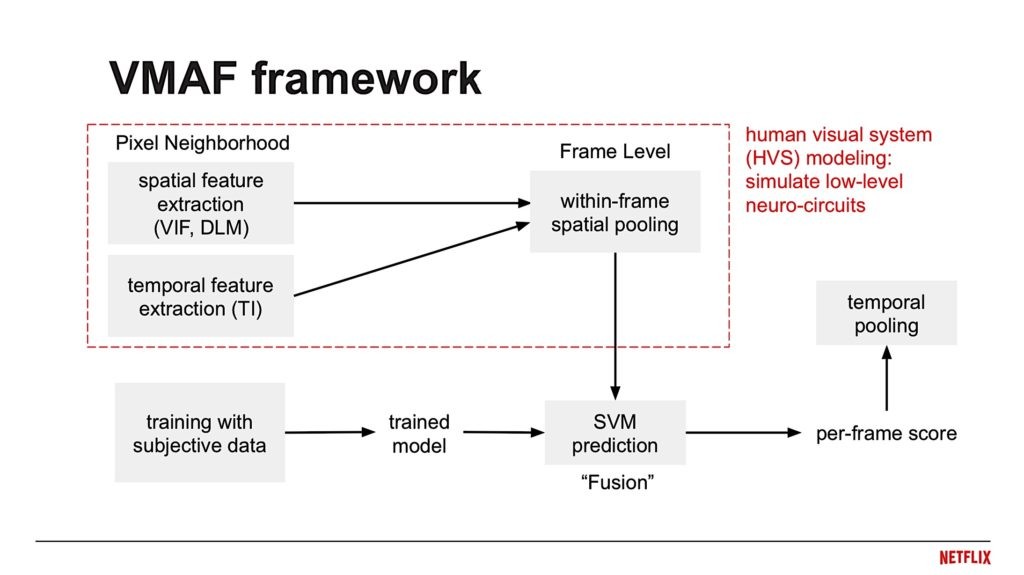

To a simple approximation, VMAF is a method of bringing out the spatial and temporal information from a video frame in a way which emphasises the types of things humans are attuned to such as contrast masking. Christos shows an example of a picture where artefacts in the trees are much harder to see than similar artefacts on a colour gradient such as a sky or still water. These extraction methods take account of situations like this and are then fed into a trained model which matches the results of the model with the numbers that humans would have given it. The idea being that when trained on many examples, it can correctly predict a human’s score given a set of data extracted from a picture. Christos shows examples of how well VMAF out-performs PSNR in gauging video quality.

Challenges are in focus in the second half of the talk. What are the things which still need working on to improve VMAF? Christos zooms in on two: design dimensionality and noise. By design dimensionality, he means how can VMAF be extended to be more general, delivering a number which has a consistent meaning in different scenarios? As the VMAF model has been trained on AVC, how can we deal with different artefacts which are seen with different codecs? Do we need a new model for HDR content instead of SDR and how should viewing conditions, whether ambient light or resolution and size of the display device, be brought into the metric? The second challenge Christos highlights is noise as he reveals VMAF tends to give lower scores than it should to noisy sources. Codecs like AV1 have film-grain synthesis tools and these need to be evaluated, so behaving correctly in the presence of video noise is important.

The talk finishes with Christos outlining that VMAF’s applicability to the industry is only increasing with new codecs coming out such as LCEVC, VCC, AV1 and more – such diversity in the codec ecosystem wasn’t an obvious prediction in 2014 when the initial research work was started. Christos underlines the fact that VMAF is a continually evolving metric which is Open Source and open to contributions. The Q&A covers failure cases, super-resolution and how to interpret close-call results which are only 1% different.

Watch now!

Download the presentation

Speaker

|

Christos Bampis Senior Software Engineer, Netflix |