This video looks at the whole streaming stack asking what’s now, what trends are coming to the fore and how are things going to be done better in the future? Whatever part of the stack you’re optimising, it’s vital to have a way to measure the QoE (Quality of Experience) of the viewer. In most workflows, there is a lot of work done to implement redundancy so that the viewer sees no impact despite problems happening upstream.

The Streaming Video Alliance’s Jason Thibeault diggs deeper with Harmonic’s Thierry Fautier, Brenton Ough from Touchstream, SSIMWAVE’s Hojatollah Yeganeh and Damien Lucas from Ateme.

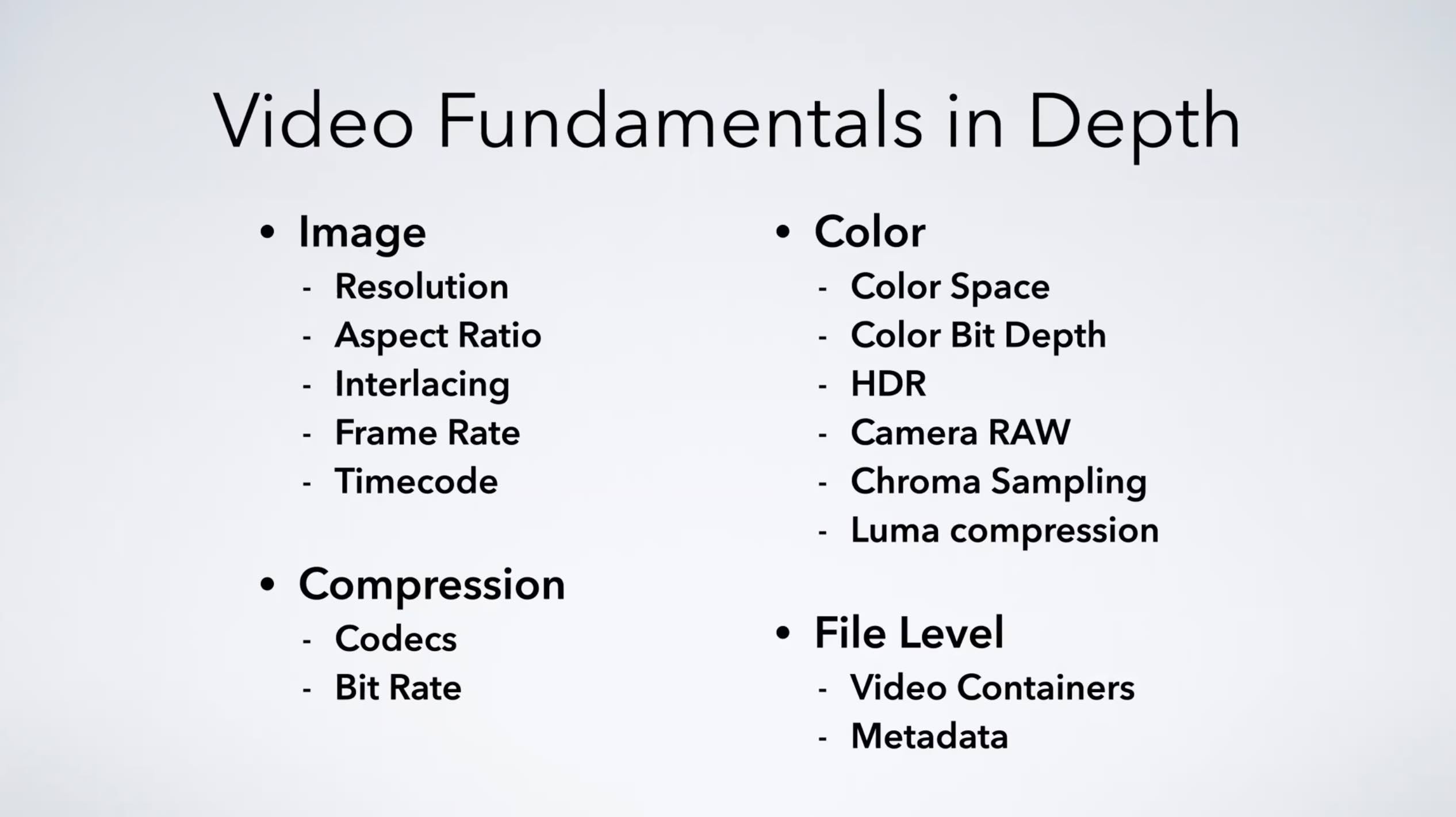

Talking about Codecs, Thierry makes the point that only 7% of devices can currently support AV1 and with 10 billion devices in the world supporting AVC, he sees a lot of benefit in continuing to optimise this rather than waiting for VVC support to be commonplace. When asked to identify trends in the marketplace, moving to the cloud was identified as a big influencer that is driving the ability to scale but also the functions themselves. Gone are the days, Brenton says, that vendors ‘lift and shift’ into the cloud. Rather, the products are becoming cloud-native which is a vital step to enable functions and products which take full advantage of the cloud such as being able to swap the order of functions in a workflow. Just-in-time packaging is cited as one example.

Examining the OTT Technology Stack from Streaming Video Alliance on Vimeo.

Other changes are that server-side ad insertion (SSAI) is a lot better in the cloud and sub partitioning of viewers, where you do deliver different ads to different people, is more practical. Real-time access to CDN data allowing you near-immediate feedback into your streaming process is also a game-changer that is increasingly available.

Open Caching is discussed on the panel as a vital step forward and one of many areas where standardisation is desperately needed. ISPs are fed up, we hear, of each service bringing their own caching box and it’s time that ISPs took a cloud-based approach to their infrastructure and enabled multiple use servers, potentially containerised, to ease this ‘bring your own box’ mentality and to take back control of their internal infrastructure.

HDR gets a brief mention in light of the Euro soccer championships currently on air and the Japan Olympics soon to be. Thierry says 38% of Euro viewership is over OTT and HDR is increasingly common, though SDR is still in the majority. HDR is more complex than just upping the resolution and requires much more care over which screen it’s watched. This makes adopting HDR more difficult which may be one reason that adoption is not yet higher.

The discussion ends with a Q&A after talking about uses for ‘edge’ processing which the panel agrees is a really important part of cloud delivery. Processing API requests at the edge, doing SSAI or content blackouts are other examples of where the lower-latency response of edge compute works really well in the workflow.

Watch now!

Speakers

|

Thierry Fautier VP Video Strategy. Harmonic Inc. |

|

Damien Lucas CTO, Ateme |

|

Hojatollah Yeganeh Research Team Lead SSIMWAVE |

|

Brenton Ough CEO & Co-Founder, Touchstream |

|

Moderator: Jason Thibeault Executive Director, Streaming Video Alliance |