Whether you feel your productivity has gone up down during Covid lockdowns, most people seem to be busier than ever making it all the harder to get the depth of knowledge needed to change from knowing how to get things done to know why it works and being able to adapt to new demands. However you work with video, whether in the edit suite, in a transmission MCR or otherwise, knowing how video is processed, represented and stored is really valuable and can speed up your work and faultfinding.

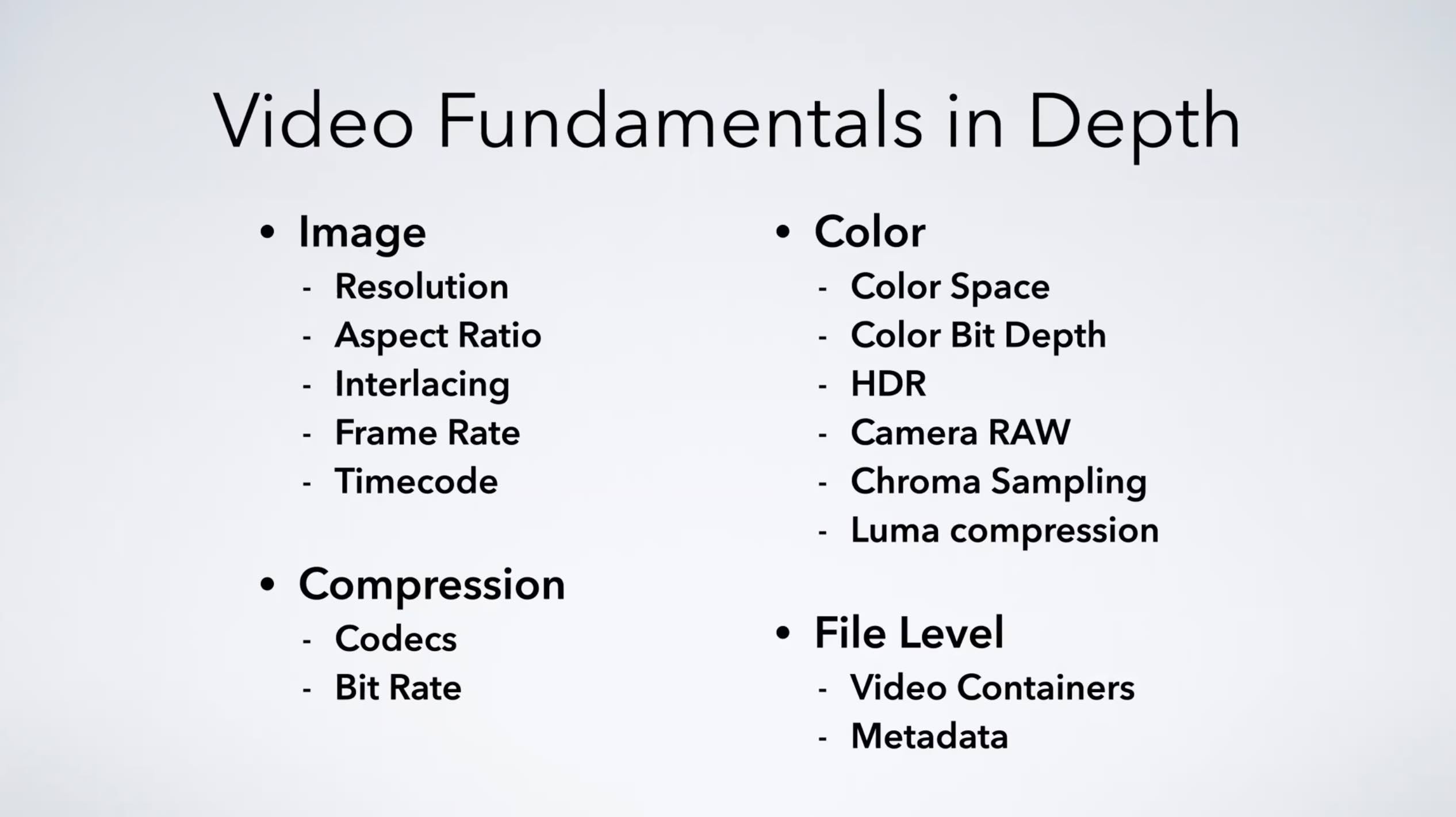

Noah Chamow from the Assistant Editor’s Bootcamp, is here with a true video fundamentals course. In four sections, this three-hour course takes you through how images are structured, compressed, stored and looks at colour. The first portion is a look at resolution, aspect ratio (including cameras, lenses and delivery aspect ratios), interlaced images, frame rates and timecode.

The codecs section explains the basics of compression and then moves on to post-production codecs such as ProRes, DNxHD etc. before moving on to looking at the bitrates contained within. Colour is a big topic which Noah introduces with standard 2D and 3D colour charts building up to the idea of colour depth such as 8 bit, 10 bit etc. If you’re interested in more detail on colour spaces and fundamentals, this SMPTE deep dive should deliver the goods. Noah moves on to HDR next, talking about both the extended luminance brightness as well as the Wide Colour Gamut aspects such as BT.2020 which all links back to his previous section about on image bit depth.

For a deeper dive into codecs, check out this SMPTE video and for a closer look at HDR, Tektronix’s Steve Holmes does so in this video.

Important in the post-production side of the industry is understanding formats like Blackmagic RAW and ARRIRAW which are discussed. They are large files that can push computers to the extremes and need processing such as debayering. Chroma sampling is an important concept as all of the video getting to viewers has had colour information removed. If you’re interested in editing, you need to understand this so you know which files will give you the best output. If you’re in contribution or transmission, it’s important to know the difference between video coming to you with either 4:2:2 or 4:2:0. Noah explains what all this means and why we do this in the first place.

The video concludes by looking at video containers. Noah starts by talking about the Mov, also known as ‘Quicktime’, container. If you have and ‘off-beat’ sense of humour you may enjoy this video on containers and codecs from Adult Swim. With a lot more decorum, Noah covers MXF and moving between container formats in your editing software.

Whether you’ve been asking “Which codec should I use?”, struggling to understand steps in your workflow or just trying to find ways to get your work done better for faster, this training session should help deepen your understanding of video giving you the context that you need.

Watch now!

Speaker

|

Noah Chamow Co-Founder, Assistant Editors’ Bootcamp Assistant Online Editor, Level 3 Post |