Let’s face it, there are a lot of streaming protocols out there both for contribution and distribution. Internet ingest in RTMP is being displaced by RIST and SRT, whilst low-latency players such as CMAF and LL-HLS are vying for position as they try to oust HLS and DASH in existing services streaming to the viewer.

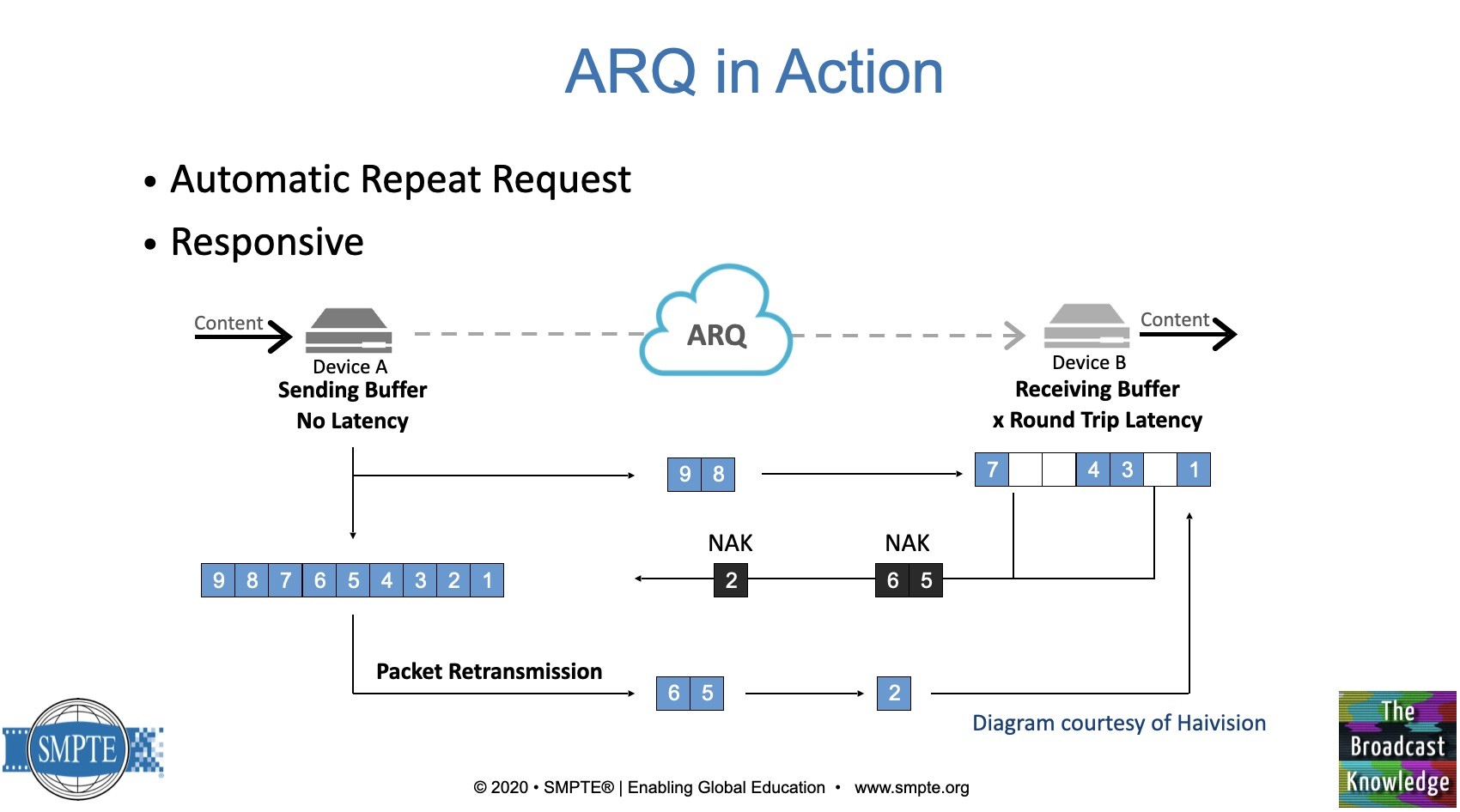

This panel, hosted by Jason Thibeault from the Streaming Video Alliance, talks about all these protocols and attempts to put each in context, both in the broadcast chain and in terms of its features. Two of the main contribution technologies are RIST and SRT which are both UDP-based protocols which implement a method of recovering lost packets whereby packets which are lost are re-requested from the sender. This results in a very high resilience to packet loss – ideal for internet deployments.

First, we hear about SRT from Maxim Sharabayko. He lists some of the 350 members of the SRT Alliance, a group of companies who are delivering SRT in their products and collaborating to ensure interoperability. Maxim explains that, based on the UDT protocol, it’s able to do live streaming for contribution as well as optimised file transfer. He also explains that it’s free for commercial use and can be found on github. SRT has been featured a number of times on The Broadcast Knowledge. For a deeper dive into SRT, have a look at videos such as this one, or the ones under the SRT tag.

Next Kieran Kunhya explains that RIST was a response to an industry request to have a vendor-neutral protocol for reliable delivery over the internet or other dedicated links. Not only does vendor-neutrality help remove reticence for users or vendors to adopt the technology, but interoperability is also a key benefit. Kieran calls out hitless switching across multiple ISPs and cellular. bonding as important features of RIST. For a summary of all of RIST’s features, read this article. For videos with a deeper dive, have a look at the RIST tag here on The Broadcast Knowledge.

Demystifying Video Delivery Protocols from Streaming Video Alliance on Vimeo.

Barry Owen represents WebRTC in this webinar, though Wowza deal with many protocols in their products. WebRTC’s big advantage is sub-second delivery which is not possible with either CMAF or LL-HLS. Whilst it’s heavily used for video conferencing, for which it was invented, there are a number of companies in the streaming space using this for delivery to the user because of it’s almost instantaneous delivery speed. Whilst a perfect rendition of the video isn’t guaranteed, unlike CMAF and LL-HLS, for auctions, gambling and interactive services, latency is always king. For contribution, Barry explains, the flexibility of being able to contribute from a browser can be enough to make this a compelling technology although it does bring with it quality/profile/codec restrictions.

Josh Pressnell and Ali C Begen talk about the protocols which are for delivery to the user. Josh explains how smoothstreaming has excited to leave the ground to DASH, CMAF and HLS. They discuss the lack of a true CENC – Common Encryption – mechanism leading to duplication of assets. Similarly, the discussion moves to the fact that many streaming services have to have duplicate assets due to target device support.

Looking ahead, the panel is buoyed by the promise of QUIC. There is concern that QUIC, the Google-invented protocol for HTTP delivery over UDP, is both under standardisation proceedings in the IETF and is also being modified by Google separately and at the same time. But the prospect of a UDP-style mode and the higher efficiency seems to instil hope across all the participants of the panel.

Watch now to hear all the details!

Speakers

|

Ali C. Begen

Technical Consultant, Comcast

|

|

Kieran Kunhya

Founder & CEO, Open Broadcast Systems

Director, RIST Forum

|

|

Barry Owen

VP, Solutions Engineering

Wowza Media Systems

|

|

Josh Pressnell

CTO,

Penthera Technologies

|

|

Maxim Sharabayko

Senior Software Developer,

Haivision

|

|

Moderator: Jason Thibeault

Executive Director,

Streaming Video Alliance

|