SRT’s ability to make lossy networks seem like perfect video circuits is increasingly well known, testified to by the SRT Alliance having just surpassed 400 member companies. But this isn’t your average ‘overview’, it dispenses with the technology introductions and goes straight into the detail so is ideal for people who already know the basics and want some deeper knowledge plus a look at the new features to come.

For those wanting an introduction, this article What is SRT? is a good starter which also links to two other intro videos. But today we’re going to join Haivision’s Maxim Sharabayko to look below the surface of SRT.

Maxim starts by introducing the open-source Git repository and the open-source integrations available before heading into the feature matrix. This shows what is and isn’t in SRT. We see that on top of ARQ, it has FEC, encryption, stream multiplexing and, soon, connection bonding. Addressing the major feature areas one by one, we start with connectivity.

SRT has two modes to establish a connection which Maixm shows on handshake diagrams. We can see that establishment need only take 2x round trips so is quick to establish. This allows Maxim to show how firewall traversal is accomplished, though NAT traversal is not yet implemented.

Next on the list of topics is access control whereby we need to ensure that only authorised users can gain access. This is achieved using the Stream ID field within SRT control packets which can contain up to 512 characters meaning it can be used to transfer usernames, passwords (in the form of keys) and requests. Maxim then explains the AES PSK encryption function and discusses the potential implementation of TLS and DTLS.

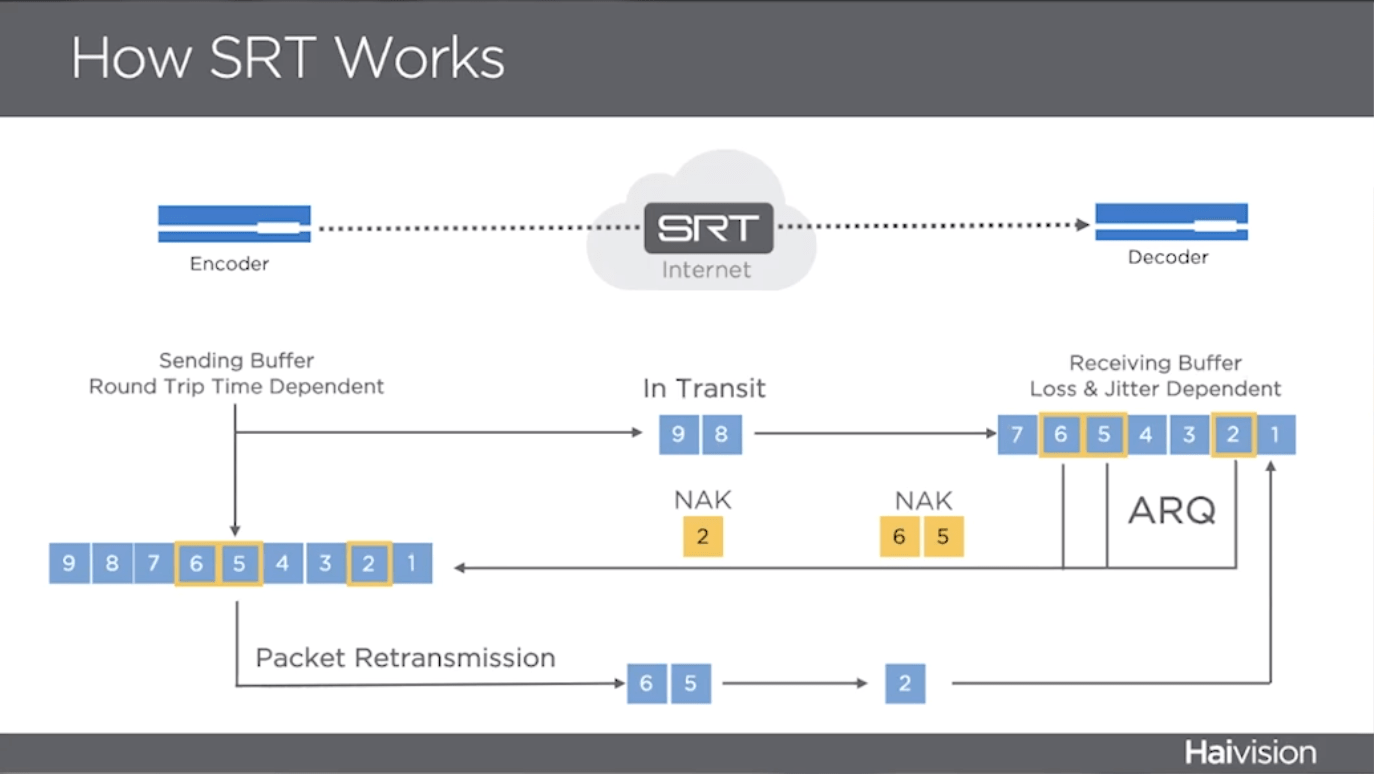

Content delivery is next under the magnifying glass starting with the structure of SRT packets and the difference between the two types: Data and Control, the former being restricted to only containing payload or FEC data. Maxim covers the positive acknowledgement which is contained with SRT with the range of received packets being acknowledged every 10ms and, where 64 packets come in less than 10ms, a low-overhead acknowledgement being sent for each group of 64 data packets. But of course, it’s the NAK packets which are the most important part of the protocol. Maxim explains they are able to send back one sequence number or a range of lost packets and talks about when they are sent. We see how this then fits into the Timestamp Based Packet Delivery (TSBPD) mechanism which itself is a feature of SRT which delivers packets to the receiver with the same timing as they arrived at the sender. The last thing we look at in the section is a worked example of Too-Late Packet Drop which explains when and why packets are dropped.

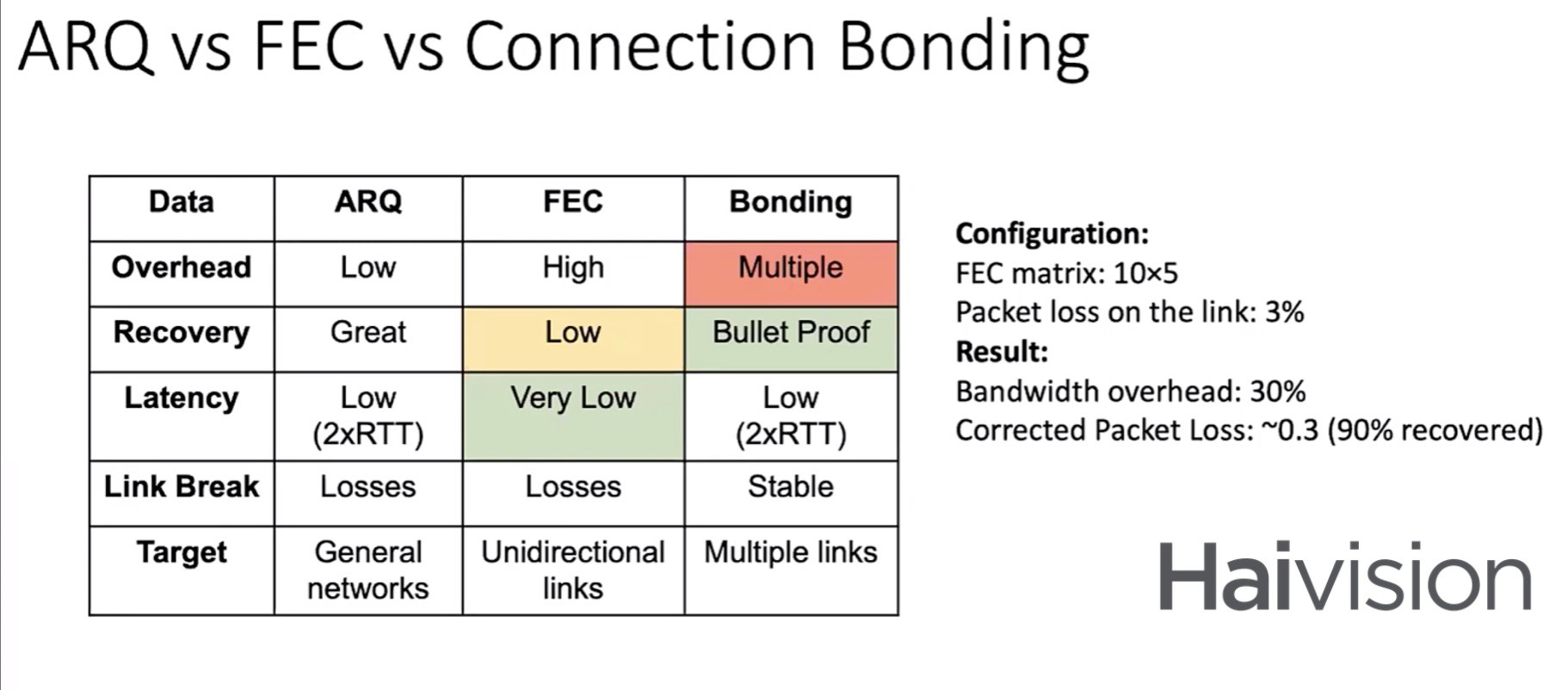

ARQ isn’t the only recovery mechanism in SRT, it also provides FEC and, soon, channel bonding. FEC’s can be useful but do have downsides which should be understood. There is a permanent bandwidth overhead, even when the circuit is working well, and a further latency is needed in order to generate the necessary recovery packets. Bonding allows you to stream the same stream over more than one circuit and use data from circuit B to fill in any gaps in circuit A, this technique is used in SMPTE ST 2022-7. Connection bonding, though, can also be used with multiple connections at once and having dynamic balancing across them. Maxim sums up the pros and cons of the different techniques in the table below.

The talk finishes with a look at stream multiplexing, congestion control and ways in which you can use the SRT statistics which are constantly updated to manage your connectivity.

Watch now!

Speakers

|

Maxim Sharabayko Senior Software Developer, Havision |