Multicast ABR (mABR) is a way of delivering standard HTTP-based streams like HLS and DASH over multicast. This can be done using an ISP’s managed network to multicast to thousands of homes and only within the home itself does the stream gets converted into unicast HTTP. This allows devices in the home to access streaming services in exactly the same way as they would Netflix or iPlayer, but avoiding strain on the core network. Streaming is a point-to-point service so each device takes its own stream. If you have 3 devices in the home watching a service, you’ll be sending 3 streams out to them. With mABR, the core network only ever sees one stream to the home and the linear scaling is done internally.

Guillaume Bichot from Broadpeak explains how this would work with a multicast server that picks up the streaming files from a CDN/the internet and converts it into multicast. This then needs a gateway at the other end to convert back into multicast. The gateway can run on a set-top-box in the home, as long as multicast can be carried over the last mile to the box. Alternatively, it can be upstream at a local headend or similar.

At the beginning of the talk, we hear from BBC R&D’s Richard Bradbury who explains the current state of the work. Published as DVB Bluebook A176, this is currently written to account for live streaming, but will be extended in the future to deal with video on demand. The gateway is able to respond with a standard HTTP redirect if it becomes overloaded which seamlessly pushes the player’s request directly to the relevant CDN endpoint.

DVB also outlines how players can contact the CDN for missing data or video streams that are not provided, currently, via the gateway. Guillaume outlines which parts of the ecosystem are specified and which are not. For instance, the function of the server is explained but not how it achieves this. He then shows where all this fits into the network stack and highlights that this is protocol-agnostic as far as delivery of media. Whilst they have used DVB-DASH as their assumed target, this could as easily work with HLS or other formats.

Guillaume finishes by showing deployment examples. We see that this can work with uni-directional satellite feeds with a return channel over the internet. It can also work with multiple gateways accessible to a single consumer.

The webinar ends with questions though, during the webinar, Richard Bradbury was answering questions on the chat. DVB has provided a transcript of these questions.

Watch now!

Download the slides from this presentation

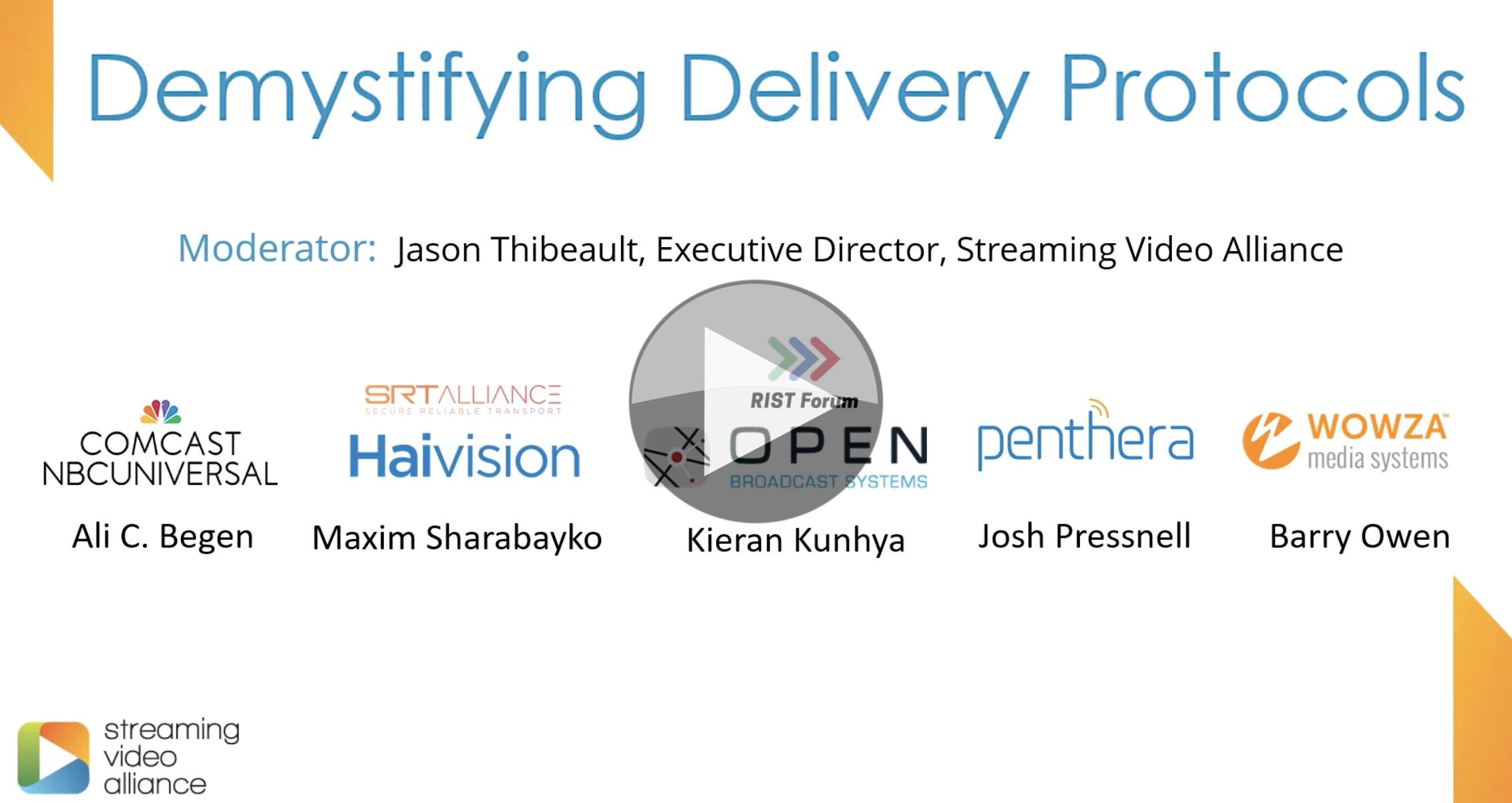

Speakers

|

Richard Bradbury Lead Research Engineer, BBC R&D |

|

Guillaume Bichot Principal Engineer, Head of Exploration, Broadpeak |