Is it possible to improve on CMAF’s offer of an ultra-low-latency, scalable protocol with good viewer experience? This is what HESP, the High-Efficiency Streaming Protocol, promises. With almost instant channel change times and sub-second latency, it’s worth taking a look at those protocol created by THEOPlayer to understand where it might work in your workflows.

Presented by Pieter-Jan Speelmans and Johan Vounckx from THEO, we hear some more detail surrounding HESP’s inception. Quality, latency and bitrate are often referred to as a triangle where if you improve one or even two, the remaining factor will get worse to compensate. HESP plays in the triangle connecting ‘viewer experience’, ‘low latency’ and ‘scalability’. If you compare WebRTC with CMAF, you see that WebRTC prioritises low-latency streaming but suffers in terms of scalability. CMAF, being 2-5 seconds higher latency, has much better scalability but the channel zapping times are high which affects viewer experience as well as overall latency. HESP, contests Pieter-Jan, actually improves all three. It’s able to do this because it’s not extending existing protocols which weren’t designed to meet all these requirements, rather it’s bringing in new techniques which shift the whole equation.

THEOPlayer has created the HESP Alliance which is devoted to standardising the HESP technology through the IETF or other avenue, promoting adoption through marketing and the creation of tools, certification and management of intellectual property. The talk outlines the decoder royalties which can be payable by subscriber, per subscriber per hour, or per device.

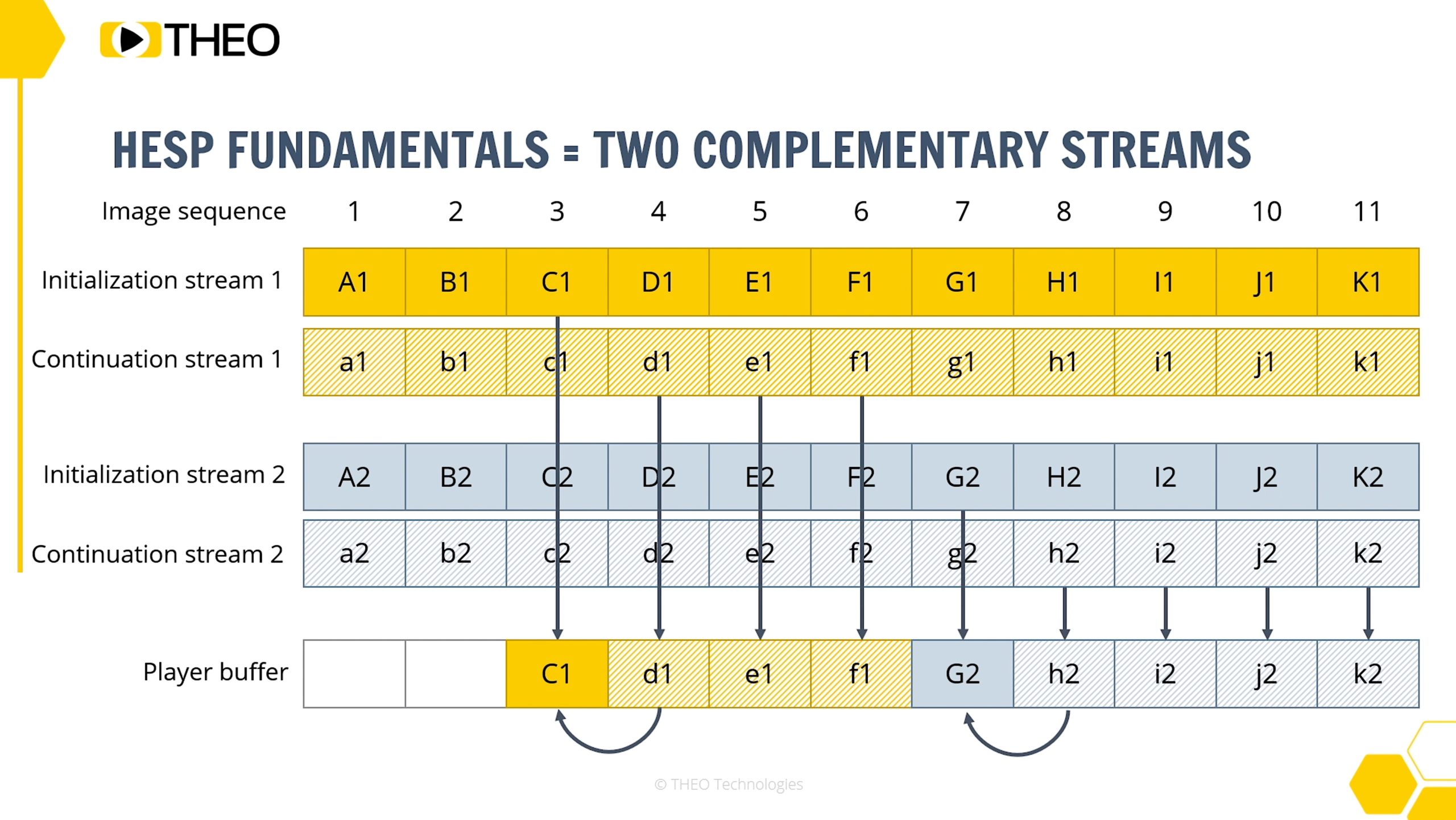

Looking at the technical details, we find out that you can actually start playing an HESP stream without downloading the manifest. While HESP does have manifest files, they change very infrequently. If a new one is changed at short notice, the server can ask players to download one by embedding a message in the stream. The channel zapping speed is achieved using two streams, an initialisation stream and a continuation stream. The initialisation stream just I and P frames allowing you to start playing immediately. The continuation stream is intended to be the low-bitrate stream used after the establishment of the stream.

HESP uses two modes: Maximal Gain and Maximal Compatability. Maximal gain aims to have the lowest latency, lowest bandwidth and lowest zapping times. It has long segments with 1 frame chunks containing one I or P frame. The Maximal Compatability mode, however, allows you to reuse Low-Latency DASH and LLHLS streams and uses 6-second segments with 200msec chunks including B frames.

THEOPlayer claim 7x less delivery delay, 20x lower zapping times and a 20% bandwidth saving over CMAF with broad compatibility with many TVs, android, iOS, Web, streaming devices.

Watch now!

Speakers

|

Pieter-Jan Speelmans CTO & Founder, THEOPlayer |

|

Johan Vounckx Vice President, Innovation, THEOPlayer |