With his usual entertaining vigour, Will Law explains the differences to the three approaches to low-latency streaming: DASH, LHLS and LL-HLS from Apple. Likening them partly to religions that all get you to the same end, we see how they differ and some of the reasons for that.

Please note: Since this video was recorded, Apple has released a new draft of LL-HLS. As described in this great article from Mux, the update’s changes are

- “Delivering shorter sub-segments of the video stream (Apple call these parts) more frequently (every 0.3 – 0.5s)

- Using HTTP/2 PUSH to deliver these smaller parts, pushed in response to a blocking playlist request

- Blocking playlist requests, eliminating the current speculative manifest request polling behaviour in HLS

- Smaller, delta rendition playlists, which reduces playlist size, which is important since playlists are requested more frequently

- Faster rendition switching, enabled by rendition reports, which allows clients to see what is happening in another playlist without requesting it in its entirety”[0]

Read the full article for the details and implications, some of which address some points made in the talk.

Furthermore, THEOplayer have released this talk explaining the changes and discussing implementation.

Anyone who saw last year’s Chunky Monkey video, will recognise Will’s near-Oscar-winning animation style as he sets the scene explaining the contenders to the low-latency streaming crown.

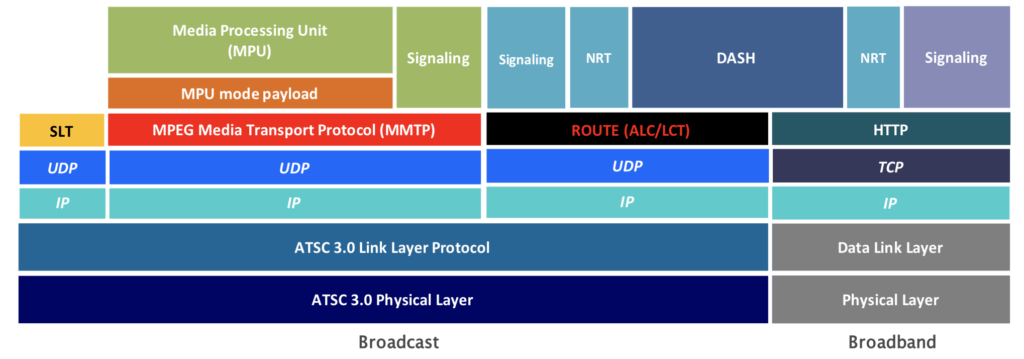

We then look at a bullet list of features across each of the three low latency technologies (note Apple’s recent update) which leads on to a discussion on chunked transfer delivery and the challenges of line-rate delivery. A simple view of the universe would say that the ideal way to have a live stream, encoded at a constant bitrate, would be to stream it constantly at that bitrate to the receiver. Whilst this is, indeed, the best way to go, when we stream we’re also keeping one eye on whether we need to change the bitrate. If we get more bandwidth available it might be best to upgrade to a better quality and if we suddenly have contested, slow wifi, it might be time for an emergency drop down to the lowest bitrate stream.

When you are delivered a stream as individual files, you can measure how long they take to download to estimate your available bandwidth. If a file can be downloaded at 1Gbps, then it should always arrive at 1Gbps. Therefore if it arrives at less than 1Gbps we know that there is a bandwidth restriction and can make adjustments. Will explains that for streams delivered with chunked transfer or in real time such as in LL-HLS, this estimation no longer works as the files simply are never available at 1Gbps. He then explains some of the work that has been undertaken to develop more nuanced ways of estimating available bandwidth. It’s well worth noting that the smaller the files you transfer, the less accurate the bandwidth estimation as TCP takes time to speed up to line rate so small 320ms-length video segments are not ideal for maximising throughput.

Continuing to look at the differences, we next look at request rates with DASH at 20 requests per second compared to LL-HLS at 720. This leads naturally to an analysis of the benefits of HTTP/2 PUSH technology used in LL-HLS and the savings that can offer. Will explores the implications, and some of the problems, with last year’s version of the LL-HLS spec, some of which have been mitigated since.

The talk concludes with some work Akamai has done to try and establish a single, common workflow with examples and a GitHub repository. Will shows how this works and the limitations of the approach and finishes with a look at the commonalities in approaches.

Watch now!

Speakers

|

Will Law Chief Architect, Akamai |