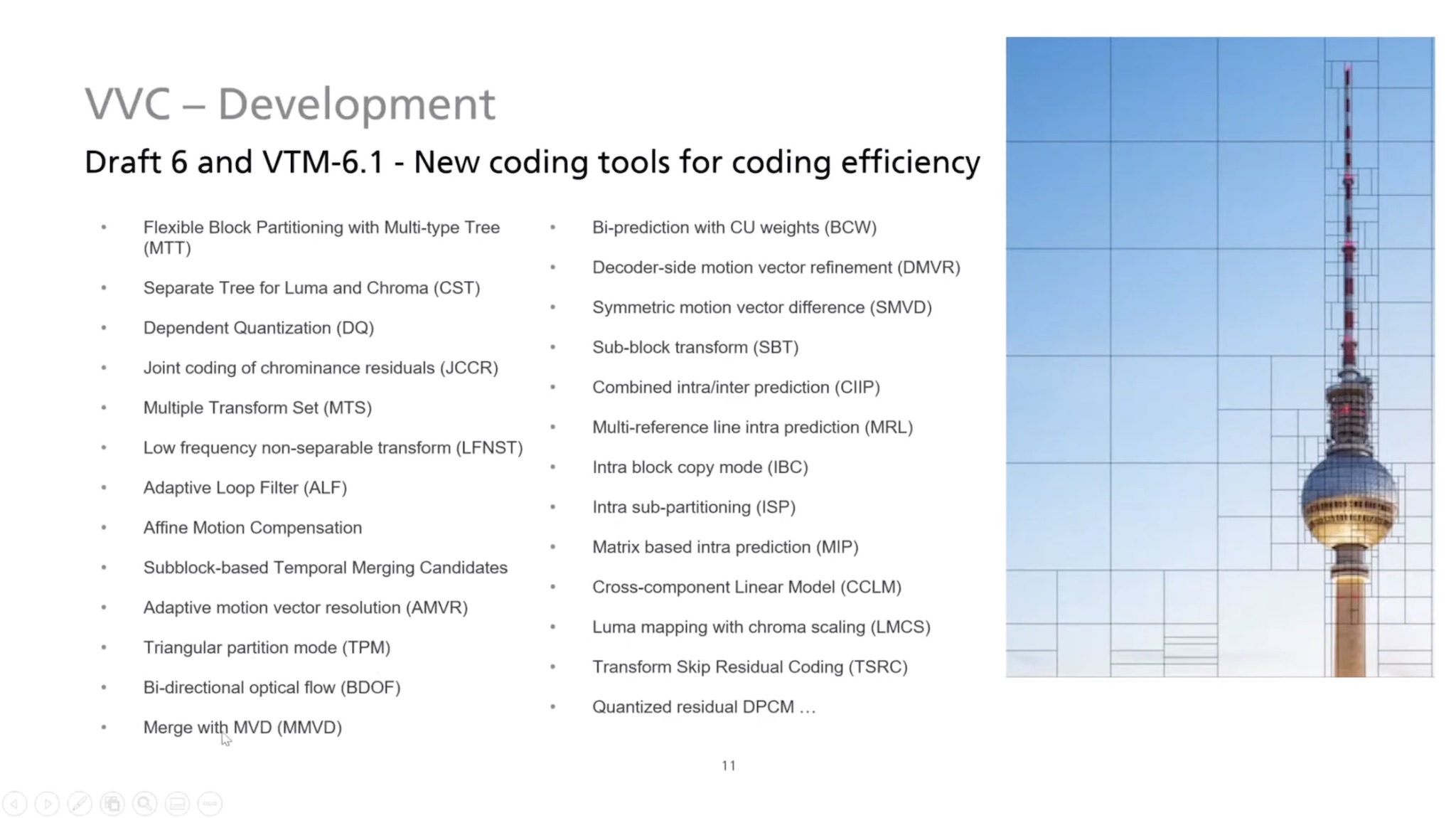

We saw in this week’s AV1 panel, AV1 encoding times have dropped into a practical range and it’s starting to gain traction. One of the key differentiators of the codec, along only with VVC is the inclusion by default of tools aimed at encoding screens and computer graphics rather than natural video.

Zoe Liu, CEO of Visionular talks at RTE2020 about these special abilities of AV1 to encode screen content. The video starts with a refresher on AV1 in general, it’s arrival on the scene from the Alliance of Open Media and the en/decoder ecosystem around it such as SVT-AV1 we talked about two days ago, dav1d, rav1e etc. as well as a look at the hardware encoders being readied from the likes of Samsung.

Turning her focus to screen content, Zoe explains that screen content is different for a number of reasons. For content like this presentation, much of the video stays static a lot of the time, then there is a peak as the slide changes. This gives rise to the idea of allowing for variable frame rates but also optimising for the depth of the colour palette. Motion on screens can be smoother and also has more distinct patterns in the form of identical letters. This seems to paint a very specific picture of what screen content is, when we all know that it’s very variable and usually has mixed uses. However, having tools to capture these situations as they arise is critical for the times when it matters and it’s these coding tools that Zoe highlights now.

One common technique is to partition the screen into variable-sized blocks and AV1 brings more partition shapes than in HEVC. Motion compensation has been the mainstay of MPEG encoding for a long time. AV1 also uses motion compensation and for the first time brings in motion vectors which allow for rotation and zooming. Zoe explains the different modes available including compound motion modes of which there are 128.

Capitalising on the repetitive nature screen content can have, Intra Block Copy (IntraBC) is a technique used to copy part of a frame to other parts of the frame. Similar to motion vectors which point to other frames, this helps replication within the frame. This is used as part of the prediction and therefore can be modified before the decode is finished allowing for small variations. Palette Mode CFL (Chrome from Luma) is a predictor for colour based on the luma signal and some signalling from the encoder.

Zoe highlights to areas where screen content reacts badly to encoding tools normally beneficial such as temporal filtering which is usually associated with 8% gains in efficiency at the encoder, but this can make motion vectors much more complicated in screen content and hurt compression efficiency. Similarly, when partitioning screen content lower sizes often work well for natural video, but the opposite is true for screen content.

The talk finishes with Zoe explaining how Visionular’s own AV1 implementation performed on standardised 4K against other implementations, their implementation of scalable video coding for RTC and the overall compression improvements.

Zoe Liu also contributed to this more detailed overview

Watch now!

Speaker

|

Zoe Liu CEO, Visionular |