Workflows changed when we moved from tapes to files, so it makes sense they will change as they increasingly move to the cloud. What if they changed to work in the cloud, but also benefited on-prem workflows at the same time? The work at MovieLabs is doing just that. With the cloud, infrastructure can be dynamic and needs to work between companies so data needs to be able to flow between companies easily and in an automated way. If this can be achieved, the amount of human interaction can be reduced and focused on the creative parts of content making.

This panel from at this SMPTE Hollywood section meeting discusses the work underway moderated by Greg Ciaccio at ETC. First off, Jim Helman from MoveLabs gives an overview of the 2030 vision. Kicked off at IBC 2019 with the publication of ‘Evolution of Media Creation‘, 10 principles were laid out for building the future of production covering topics like realtime engines, remote working, cloud deployments, security access and software-defined workflows (SDWs). This was followed up by a white paper covering ‘The Evolution of Production Security‘ which laid out the need for a new, zero-trust approach to security and then, in 2020, the jointly run industry lab released ‘The Evolution of Production workflows‘ which talked about SDWs.

“A software-defined workflow (SDW) uses a highly configurable set of tools and processes to support creative tasks by connecting them through software-mediated collaboration and automation.”

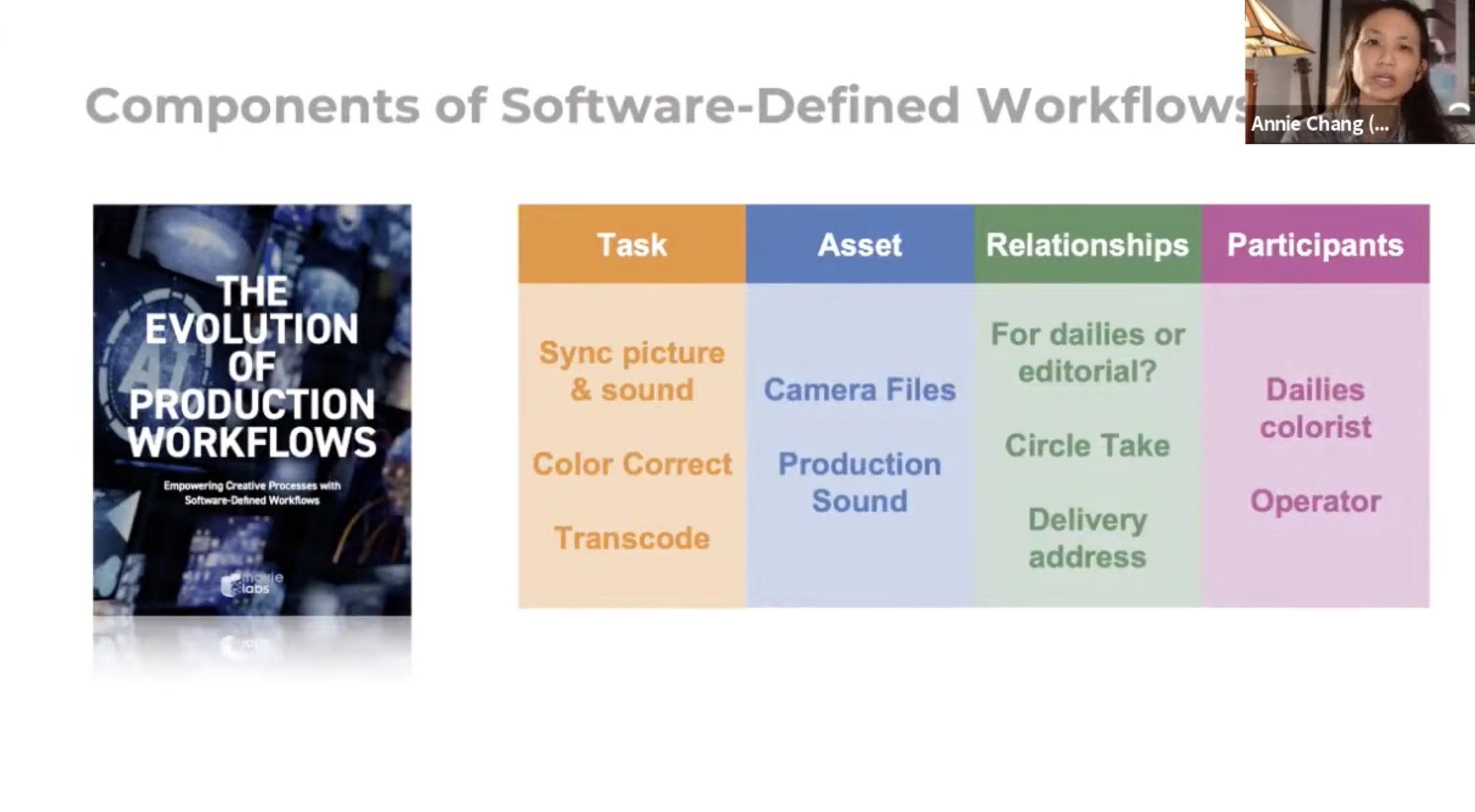

Source – Annie Change, Universal Pictures

This SDW thread of the MovieLabs 2030 vision aims to standardise workflows at the data-model level, and, in the future, API level to allow for data to be easily exchanged and understood. Annie Chang from Universal Pictures explains that the ‘Evolution of Production Workflows’ publication deals with Tasks, Assets, Relationships and participants. If you can define your work with these four areas, you have enough information to get computers to understand the workflows and external data.

This leads to the idea of building each scene in an object model showing relationships between them. The data would describe key props and stage elements (for instance, if they were important for VFX), actors and their metadata and technical information such as colour correction, camera files and production staff.

Once modelled, a production can be viewed in many ways. For instance, the head of a studio may just be interested in high-level stories, people involved and distribution routes. Whereas a VFX producer would have a different perspective needing more detail about the scene. A VFX asset curator, on the other hand, would just need to know about the shots down to the filenames and storage locations. This work promises to allow all of these views of the same, dynamic data. So this not only improves workflows’ portability between vendor systems but is also a way of better organising any workflow irrespective of automation. Dreamworks is currently using this method of working with the aim of trying it out on live-action projects soon.

Annie finishes by explaining that there are efficiencies to be had in better organising assets. It will help reduce duplicates both by uncovering duplicate files but also stopping duplicate assets being produced. AI and similar technology will be able to sift through the information to create clips, uncover trivia and, with other types of data mining, create better outputs for inclusion in viewer content.

Sony Pictures’ Daniel De La Rosa then talks about the Avid platform in the cloud that they build in response to the Covid crisis and how cloud infrastructure was built in order of need and, often, based on existing solutions which were scaled up. Daniel makes the point the working in the cloud is different because it’s “bringing the workflows to the data” as opposed to the ‘old way’ where the data was brought to the machine. In fact, cloud or otherwise, with the globalisation of production there isn’t any way of doing things the ‘old way’ any more.

This reliance on the cloud – and to be clear, Daniel talks of multi-cloud working within the same production – does prompt a change in the security model employed. Previously a security perimeter would be set up around a location, say a building or department, to keep the assets held within safe. They could then be securely transferred to another party who had their own perimeter. Now, when assets are in the cloud, they may be accessed by multiple parties. Although this may not always happen simultaneously, through the life of the asset, this will be true. Security perimeters can be made to work in the cloud, but they don’t offer the fine-grained control that’s really needed where you really need to be able to restrict the type of access as well as who can access on a file-by-file basis. Moreover, as workflows are flexible, these security controls need to be modified throughout the project and, often, by the software-defined workflows themselves without human intervention. There is plenty of work to do to make this vision a reality.

The Q&A section covers exactly feedback from the industry into these proposals and specifications, the fact they will be openly accessible, and a question on costs for moving into the cloud. On the latter topic, Daniel said that although costs do increase they are offset when you drop on-premise costs such as rent and utilities. Tiered storage costs in the cloud will be managed by the workflows themselves just like MAMs manage asset distribution between online, near-line and LTO storage currently.

The session finishes talking about how SDWs will help automation and spotting problems, current gaps in cloud workflow tech (12-bit colour grading & review to name but one) and VFX workflows.

Watch now!

Speakers

|

Jim Helman MovieLabs CTO |

|

Daniel De La Rosa Sony Pictures’ Vice President of Post Production, Technology Development |

|

Annie Chang Universal Pictures Vice President of Creative Technologies |

|

Moderator: Greg Ciaccio ASC Motion Imaging Technology Council Workflow Chair EP and Head of Production Technology & Post, ETC at University Southern California |