Even before the pandemic, post-production was moving into the cloud. With the mantra of bringing your apps to the media, remote working was coming to offices and now it’s also coming to homes. As with any major shift in an industry, it will suit some people earlier than others so while we’re in this transition, it’s work taking some time to ask why people are doing this, why some people are not and what problems are still left unsolved. For a wider context on the move to remote editing, watch this video from IET Media.

This video sees Key Code Media CTO,Jeff Sengpiehl talking to Bebop Technology’s Michael Kammes, Ian McPherson from AWS and Ian Main from Teradici. After laying the context for the discussion, he asks the panel why consumer cloud solutions aren’t suitable for professionals. Michael picks this up first by picking on consumer screen sharing solutions which are optimised for getting a task done and they don’t offer the fidelity and consistency you need for media workflows. When it comes to storage at the consumer level, the cost usually prevents investment in the hardware which would give the low-latency, high-capacity storage which is needed for many professional video formats. Ian then adds that security plays a much bigger role in professional workflows and the moment you bring down some assets to your PC, you’re extending the security boundary into your consumer software and to your house.

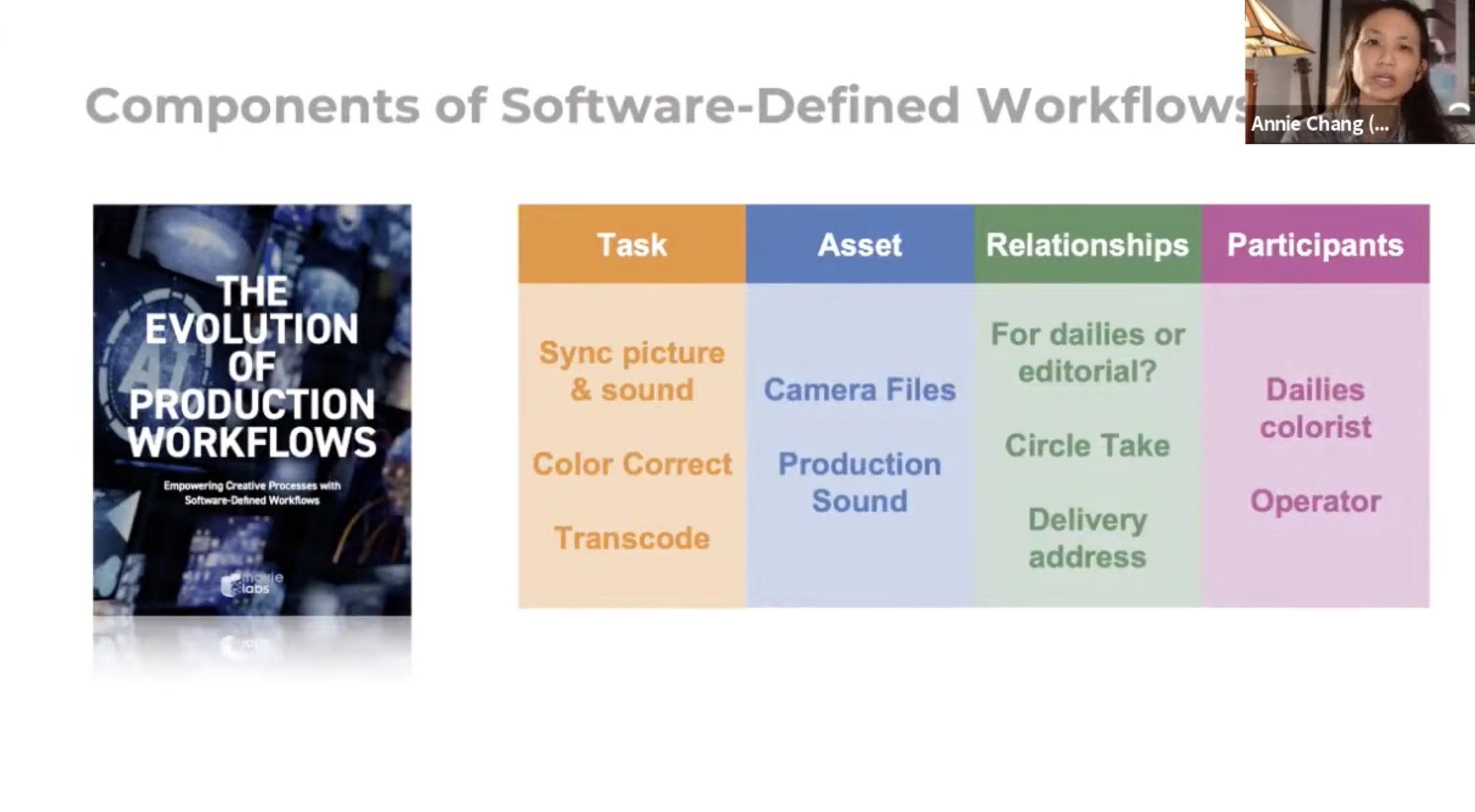

The security topic features heavily in this conversation and Michael talks about the Trusted Partner Network who are working on a security specification which, it is hoped, will be a ‘standard’ everyone can work to in order to show a product or solution is secure. The aim is to stop every company having their own thick document detailing their security needs because that means each vendor has to certify themselves time and time again against similar demands but which are all articulated differently and therefore defended differently. Ian explains that cloud providers like AWS provide better physical security than most companies could manage and offer security tools for customers to secure their solution. Many are hoping to form their workflows around the Movielabs 2030 vision which recommends ways to move content through the supply chain with security and auditing in mind.

“What’s stopping people from adopting the cloud for post-production?”, poses Jeff. Cost is one reason people are adopting the cloud and one reason others aren’t. Not dissimilar to the ‘IP’ question in other parts of the supply chain, at this point in the technology’s maturity, the cost savings are most tangible to bigger companies or those with particularly high demands for flexibility and scalability. For a smaller operation, there may simply not be enough benefit to justify the move. Particularly as it would mean adopting tools that take time to learn so, even if temporary, slow down an editor’s ability to deliver a project in the time they’re used to. But on top of that, there’s the issue of cost uncertainty. It’s easy to say how much storage will cost in the cloud, but when you’re using dynamic amounts of computation and moving data in and out of the cloud, estimating your costs becomes difficult and in a conservative industry this uncertainty can form part of a blocker to adoption.

Starting to take questions from the audience, Ian outlines some of the ways to get terabytes of media quickly into the cloud whilst Michael explains his approach to editing with proxies to at least get you started or even for the whole process. Conforming to local, hi-res media may still make sense out of the cloud or you have time to upload the files whilst the project is underway. There’s a brief discussion on the rise of availability of Macs for cloud workflows and a discussion about the difficulty, but possibility, of still having a high-quality monitoring feed on a good monitor even if your workstation is totally remote.

Watch now!

Speakers

|

Ian Main Technical Marketing Principal, Teradici Corporation |

|

Ian McPherson Head of Global Business Development, Media Supply Chain |

|

Michael Kammes VP Marketing & Business Development, BeBop Technology |

|

Jeff Sengpiehl CTO Key Code Media |