WebRTC is now a W3C standard providing sub-second peer-to-peer video and audio streaming with NAT traversal. Widely used for video conferencing, its sub-second latency has also been the focus of video streaming companies such as Millicast and Limelight (to name but two) who aim to deliver this otherwise peer-to-peer technology to thousands or millions of people in under a second enabling interactive video, gamefied streams, auctions and ultra-low-latency sports.

Addressing directly people using other streaming protocols, Pion creator Sean DuBois spoke at SF Video Tech about what WebRTC brings over and above protocols like RTMP, SRT and RIST. At the heart of it, WebRTC, like SRT and RIST, creates a connection over which it can send a variety of data. Whilst we expect media to be sent, actually, file transfer can be easily achieved – let’s not forget the whole of SRT is build upon UDT which is specifically a file delivery utility. Where file transfer can be achieved, so can real-time data & metadata transfer.

Sean quickly summarises WebRTC as a Protocol between (typically) browsers, an peer-to-peer secure connection over which multiple audio & video streams can flow. In common with RIST and other recent protocols, it’s based on many pre-existing

technologies such as SRTP, DTLS, ICE and SDP to deliver signalling, connection management, encryption and communication.

The list of improvements over RTMP is very long. They’re spelt out concisely in the video so we will highlight just a few here. Importantly, low-latency is key. RTMP was low-latency for its time, but not by today’s standards. Google’s Stadia can boast 125ms video latency for a keypress, explains Sean. DTLS and SRTP are essential for security but are well understood, trusted methods of securing your data. DTLS is pretty much exactly the same as the TLS which secures your bank transfers, just moved into UDP instead of TCP. However, WebRTC can work by exchanging ‘fingerprints’ (DTLS-SRTP) instead of the full trusted certificate infrastructure that underpins TLS on the web. Removing the requirement for certs is a big boost for flexibility and agility as long as you are confident you can exchange fingerprints securely ahead of time.

NAT traversal is also a big boon where, even with both endpoints behind a firewall, endpoints can always find a way to communicate although this does mean that ICE servers are needed to facilitate connectivity. Within broadcasting, however, it’s more likely that you’ll have control of one end so this is less needed. Sean highlights the ability to send multiple quality levels within the same stream using the ‘simulcast’ ability of WebRTC.

Sean then looks at SRT and RIST. Both of these are low-latency streaming protocols which can, both, also provide sub-second streaming for good connections with a relatively low RTT. Sean highlights the lack of SRT and RIST to negotiate the codec in use and their optional security. Being focused more on delivering contribution feeds, they tend to have a more static configuration often created after a programme of testing to ensure the quality will be acceptable to the broadcaster/streaming provider.

To finish, Sean highlights a whole series of interesting, innovative uses of WebRTC from informal group streaming to drones to shared online games to file transfers and more.

Watch now!

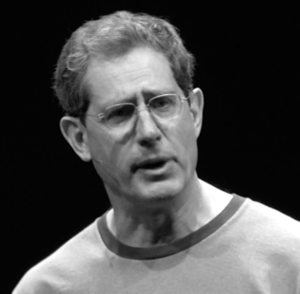

Speaker

|

Sean DuBois Developer, Apple Creator of Pion WebRTC |