Many of the bottlenecks in processing video today are related to bandwidth but most codecs that solve this problem require a lot of compute power and/or add a lot of latency. For those that wish to work with high-quality video such as within cameras and in TV studios, what’s really needed is a ‘zero’ latency codec that maintains lossless video but drops the data rate from gigabits to megabits. This is what JPEG XS does and Jean-Baptiste Lorent joined the NVIDIA GTC21 conference to explain why this is so powerful.

Created by intoPIX who are not only active in compression intellectual property but also within standards bodies such as JPEG, MPEG, ISO, SMPTE and others, JPEG XS is one of the latest technologies to come to market from the company. Lorent explains that it’s designed both to live inside equipment compressing video as it moves between parts of a device such as a phone where it would enable higher resolutions to be used and minimise energy use, and to drive down bandwidths between equipment in media workflows. We’ve featured case studies of JPEG XS in broadcast workflows previously.

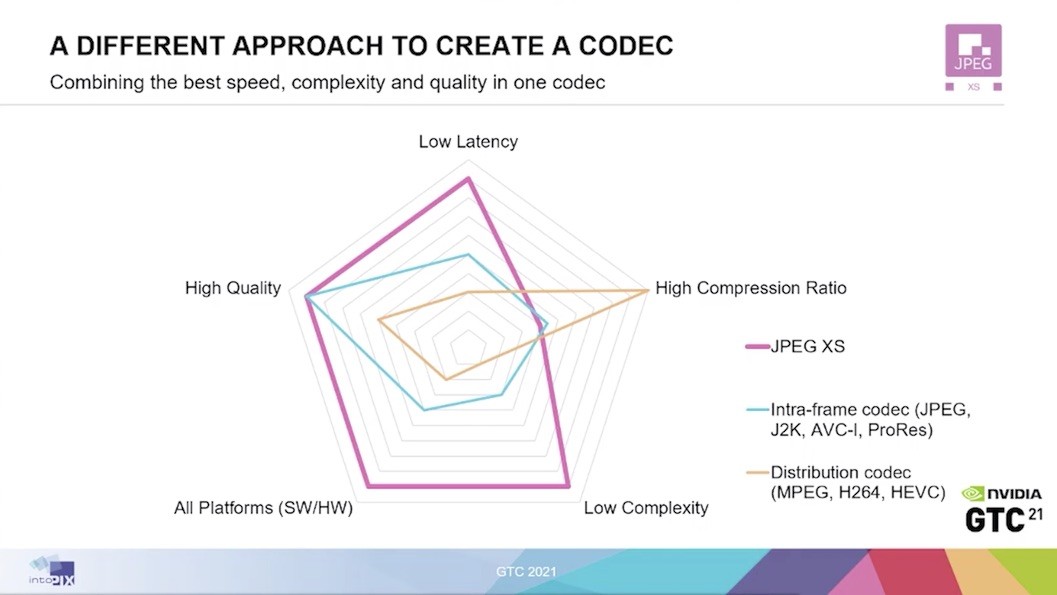

The XS in JPEG XS stands for Xtra Small, Xtra Speed. And this underlines the important part of the technology which looks at compression in a different way to MPEG, AV1 and similar codecs. As discussed in

this interview the codec market is maturing and exploiting other benefits rather than pure bitrate. Nowadays, we need codecs that make life easy for AI/ML algorithms to quickly access video, we need low-complexity codecs for embedded devices like old set-top boxes and new embedded devices like body cams. We also need ulta-low delay codecs, with an encode delay in the microseconds, not milliseconds so that even multiple encodes seem instantaneous. JPEG XS is unique in delivering the latter.

With visually lossless results at compression levels down to 20:1, JPEG XS is expected to be used by most at 10:1 at which point it can render uncompressed HD 1080i at around 200Mbps, down from 1.5Gbps or can bring 76Gbps down to 5Gbps or less. Lorent explains that the maths in the algorithm has low complexity and is highly paralellisable which is a key benefit in modern CPUs which have many cores. Moreover, important for implementation in GPUs and FPGAs, it doesn’t need external memory and is low on logic.

The talk finishes with Lorent highlighting that JPEG XS has been created flexibly to be agnostic to colour space, chroma subsampling, bit depths, resolution and more. It’s also been standardised to be carried in SMPTE ST 2110-22, under ISO IEC 21122, carriage over RTP, in an MPEG TS and in the file domain as MXF, HEIF, JXS and MP4 (in ISO BMFF).

Free Registration required. Easiest way to watch is to click above, register, come back here and click again.

If you have trouble, use the chat on the bottom right of this website and we can send you a link

Speakers

|

Jean-Baptiste Lorent Director Marketing & Sales intoPIX |