The streaming industry is on an ever-evolving quest to reduce latency to bring it in line with, or beat linear broadcasts and to allow business models such as gaming to flourish. When streaming started, latency of a minute or more was not uncommon and whilst there are some simple ways to improve that, getting down to the latency of digital TV, approximately 5 seconds, is not without challenges. Whilst the target of 5 seconds works for many use cases, it’s still not enough for auctions, gambling or ‘gamification’ which need sub-second latency.

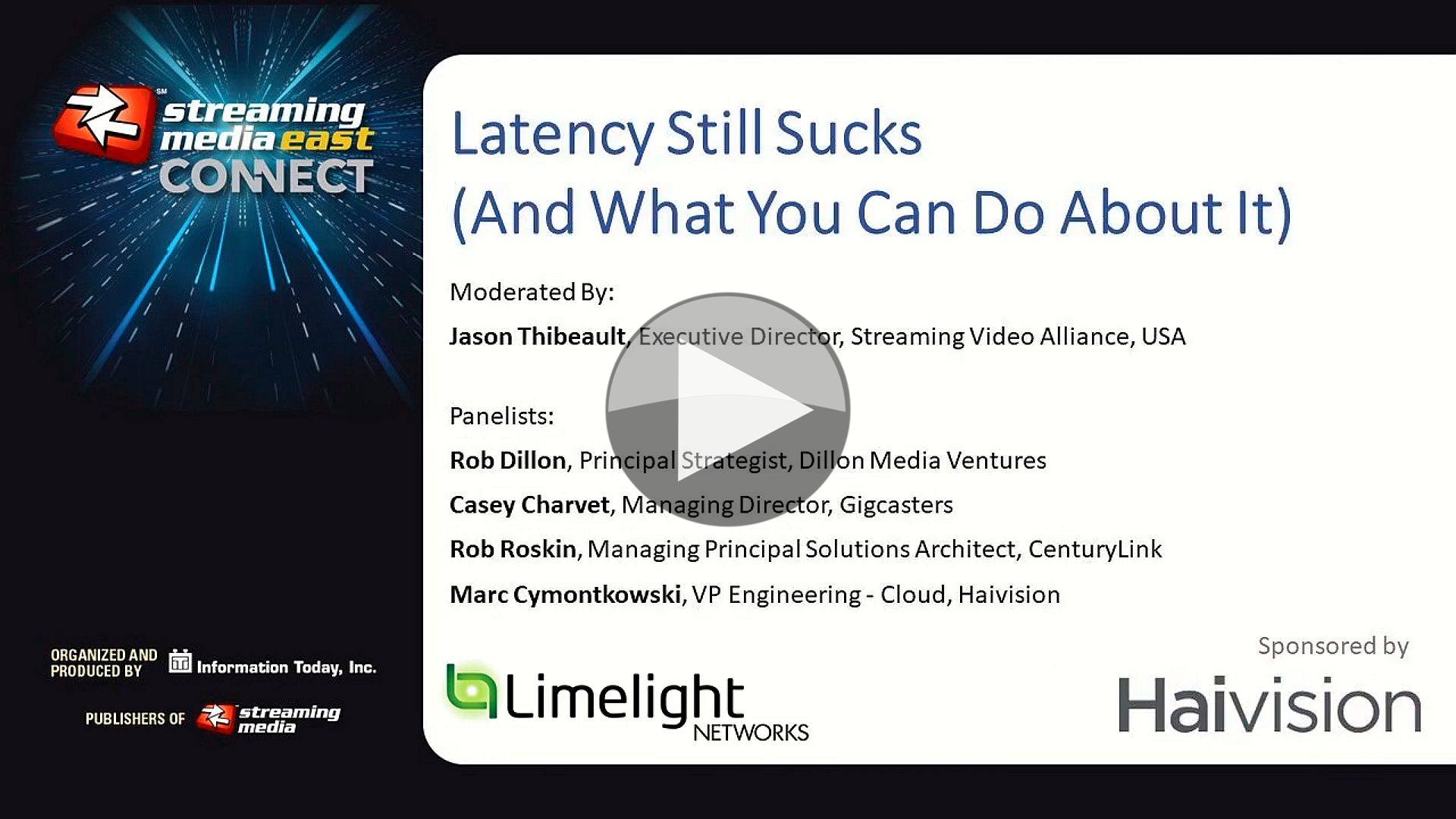

In this panel, Jason Thielbaut explores how to reduce latency with Casey Charvet from Gigcasters, Rob Roskin from CenturyLink and Haivision VP Engineering, Marc Cymontkowski. This wide-ranging discussion covers CDN caching, QUIC and HTTP/3, encoder settings, segmented Vs. non-segmented streaming, ingest and distribution protocols.

Key to the discussion is differentiating the ingest protocol from the distribution protocol. Often, just getting the content into the cloud quickly is enough to bring the latency into the customer’s budget. Marc from Haivision explains how SRT achieves low-latency contribution. SRT allows unreliable networks like the Internet to be used for reliable, encrypted video contribution. Created by Haivision and now an Open Source technology with an IETF draft spec, the alliance of SRT users continues to grow as the technology continues to develop and add features. SRT is a ‘re-request’ technology meaning it achieves its reliability by re-requesting from the encoder any data it missed. This is in contrast to TCP/IP which acknowledges every single packet of data and is sent missing data when acknowledgements aren’t received. Doing it the SRT, way really makes the protocol much more efficient and able to cope with real-time media. SRT can also encrypt all traffic which, when sending over the internet, is extremely important even if you’re not sending live-sports. In this video, Marc makes the point that SRT also recovers the timing of the stream so that the data comes out the SRT pipe in the same ‘shape’ as it went in. Particularly with VBR encoding, your decoder needs to receive the same peaks and troughs as the encoder created to save it having to buffer the input as much. All this included, SRT manages to deliver a transport latency of around 2.5 times the round trip time.

Haivision are members of RIST which is a similar technology to SRT. Marc explains that RIST is approaching the problem from a standards perspective; taking IETF RFCs and applying them to RTP. SRT took a more pragmatic way forward by creating a binary which implemented the features and by making this open source for interoperability.

The video finishes with a Q&A covering HTTP Header compression, recommended size of HLS chunks, peer-to-peer streaming and latency requirements for VoD.

Watch now!

Speakers

|

Rob Roskin Principal Solutions Architect, Level3 Communications |

|

Marc Cymontkowski VP Engineering – Cloud, Haivision |

|

Casey Charvet Managing Director, Gigcasters |

|

Jason Thibeault Executive Director, Streaming Video Alliance |