The pandemic has obviously hurt live broadcaster, sports particularly but as the world starts its slow fight back to normality we’re seeing sports back on the menu. How has streaming suffered and benefited? This video looks at how technology has changed in response, how pirating of content changed, how close we are to business as usual.

Jason Thibeault from the Streaming Video Alliance brings together Andrew Pope from Friend MTS, Brandon Farley from Streaming Global, SSIMWAVE’s Carlos Bacquet, Synamedia’s Nick Fielibert and Will Penson with Conviva to get an overview of the industry’s response to the pandemic over the last year and its plans for the future.

The streaming industry has a range of companies including generalist publishers, like many broadcasters and specialists such as DAZN and NFL Gamepass. During the pandemic, the generalist publishers were able to rely more on their other genres and back catalogues or even news which saw a big increase in interest. This is not to say that the pandemic made life easy for anyone. Sports broadcasters were undoubtedly hit, though companies such as DAZN who show a massive range of sports were able dig deep in less mainstream sports from around the world in contrast with services such as NFL Game Pass which can’t show any new games if the season is postponed. We’ve heard previously how esports benefited from the pandemic

The panel discusses the changes seen over the last year. Mixed views on security with one company seeing little increase in security requests, another seeing a boost in requests for auditing and similar so that people could be ready for when sports streaming was ‘back’. There was a renewed interest in how to make sports streaming better where better for some means better scaling, for others, lower latency, whereas many others are looking to bake in consistency and quality; “you can’t get away with ‘ok’ anymore.”

SSIMWAVE pointed out that some customers were having problems keeping the channel quality high and were even changing encoder settings to deal with the re-runs of their older footage which was less good quality than today’s sharp 1080p coverage. “Broadcast has set the quality mark” and streaming is trying to achieve parity. Netflix has shown that good quality goes on good devices. They’re not alone being a streaming service 50 per cent of whose content is watched on TVs rather than streaming devices. When your content lands on a TV, there’s no room for compromise on quality.

Crucially, the panel agrees that the pandemic has not been a driver for change. Rather, it’s been an accelerant of the intended change already desired and even planned for. If you take the age-old problem of bandwidth in a house with a number of people active with streaming, video calls and other internet usage, any bitrate you can cut out is helpful to everyone.

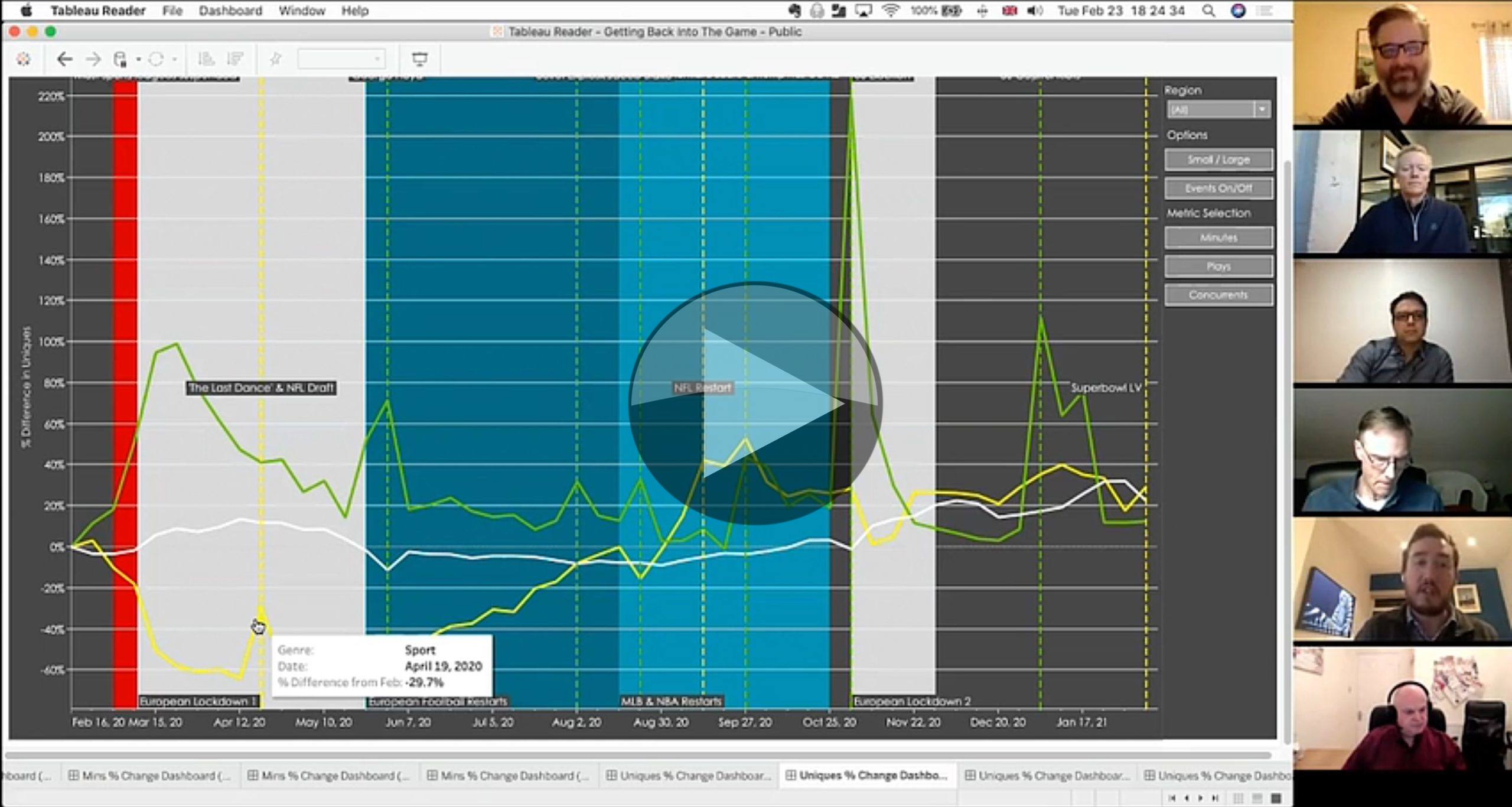

Next, Carlos from Conviva takes us through graphs for the US market showing how sports streaming dropped 60% at the beginning of the lockdowns only to rebound after spectator-free sporting events started up now running at around 50% higher than before March 2020. News has shown a massive uptick and currently retains a similar increase as sports, the main difference being that it continues to be very volatile. The difficulties of maintaining news output throughout the pandemic are discussed in this video from the RTS.

Before hearing the panel’s predictions, we hear their thoughts on the challenges in improving. One issue highlighted is that sports is much more complex to encode than other genres, for instance, news. In fact, tests show that some sports content scores 25% less than news for quality, according to SSIMWAVE, acknowledging that snooker is less challenging than sailing. Delivering top-quality sports content remains a challenge particularly as the drive for low-latency is requiring smaller and smaller segment sizes which restrict your options for GOP length and bandwidth.

To keep things looking good, the panel suggests content-aware encoding where machine learning analyses the video and provides feedback to the encoder settings. Region of interest coding is another prospect for sports where close-ups tend to want more detail in the centre as you look at the player but wide shots intent to capture all detail. WebRTC has been talked about a lot, but not many implementations have been seen. The panel makes the point that advances in scalability have been noticeable for CDNs specialising in WebRTC but scalability lags behind other tech by, perhaps, 3 times. An alternative, Synamedia points out, is HESP. Created by THEOPlayer, HESP delivers low latency, chunked streaming and very low ‘channel change’ times.

Watch now!

Speakers

|

Andrew Pope Senior Solutions Architect, Friend MTS |

|

Brandon Farley SVP & Chief Revenue Officer, Streaming Global |

|

Carlos Bacquet Manager, Sales Engineers, SSIMWAVE |

|

Nick Fielibert CTO, Video Network Synamedia |

|

Will Penson Vice President, GTM Strategy & Operations, Conviva |

|

Jason Thibeault Executive Director, Streaming Video Alliance |