Will streaming really be any better with 5G? What problems won’t 5G solve? Just a couple of the questions in this panel from the Streaming Video Alliance. There are so many aspects of 5G which are improvements, it can be very hard to clearly articulate for a given use case which are the main ones that matter. In this webinar, the use case is clear: streaming to the consumer.

Moderating the session, Dom Robinson kicks off the conversation asking the panellists to dig below the hype and talk about what 5G means for streaming right now. Brian Stevenson is first up explaining that the low-bandwidth 5G option really useful as it allows operators to roll out 5G offerings with the spectrum they already have and, given its low frequency, get a good decent a propagation distance. In the low frequencies, 5G can still give a 20% improvement bandwidth. Whilst this is a good start, he continues, it’s really delivering in the mid-band – where bandwidth is 6x – that we can really start enabling the applications which are discussed in the rest of the talk.

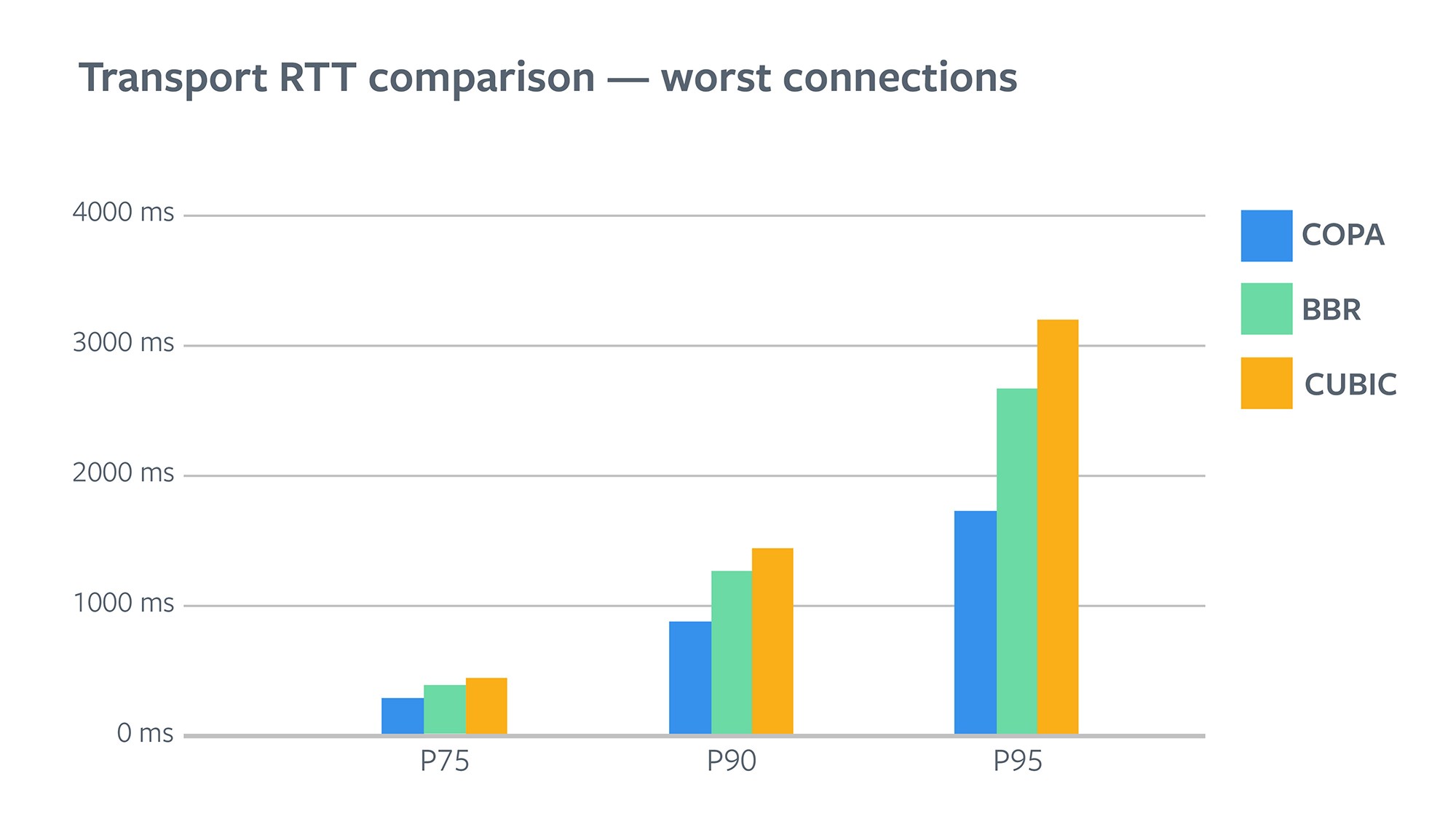

Humberto la Roche from Cisco says that in his opinion, the focus needs to be on low-latency. Latency at the network level is reduced when working in the millimetre wavelengths, reducing around 10x. This is important even for video on demand. He points out, though that delay happens within the IP network fabric as well as in the 5G protocol itself and the wavelength it’s working on. Adding buffers into the network drives down the cost of that infrastructure so it’s important to look at ways of delivering the overall latency needed at a reasonable cost. We also hear from Sanjay Mishra who explains that some telcos are already deploying millimetre wavelengths and focussing on advancing edge compute in high-density areas as their differentiator.

The panel discusses the current technical challenges for operators. Thierry Fautier draws from his experience of watching sports in the US on his mobile devices. The US has a zero-rating policy, he explains, where a mobile operator waives all data charges when you use a certain service, but only delivers the video at SD resolution at 1.5 Mbps. Whilst the benefits to this are obvious, it means that as people buy new, often larger phones, with better screens, they expect to reap the benefits. At SD, Thierry says, you can’t see the ball in Tennis, so there 5G will offer the over-the-air network bandwidth needed to allow the telcos to offer HD as part of these deals.

Preparing for 5G Video Streaming from Streaming Video Alliance on Vimeo.

The panel discusses the problems seen so far in delivering MBMS – multicast for mobile networks. MBMS has been deployed sporadically around the world in current LTE networks (using eMBMS) but has faced a typical chicken and egg problem. Given that both cell towers and mobile devices need to support the technology, it hasn’t been worth the upgrade cost for the telcos given that eMBMS is not yet supported by many chipsets including Apple’s. Thierry says there is hope for a 5G version of MBMS since Apple is now part of the 3GPP.

CMAF had a similar chicken and egg situation when it was finalised, there was hesitance in using it because Apple didn’t support it. Now with iOS 14 supporting HLS in CMAF, there is much more interest in deploying such services. This is just as well, cautions Thierry, as all the talk of reduced latency in 5G or in the network itself won’t solve the main problem with streaming latency which exists at the application layer. If services don’t abandon HLS/DASH and move to LL-HLS and LL-DASH/CMAF then the improvements in latency lower down the stack will only convey minimal benefits to the viewer.

Sanjay discusses the problem of coverage and penetration which will forever be a problem. “All cell towers are not created equal.” The challenge will remain as to how far and wide coverage will be there.

The panel finishes looking at what’s to come and suggests more ‘federations’ of companies working together, both commercially and technically, to deliver video to users in better ways. Thierry sums up the near future as providing higher quality experiences, making in-stadia experiences great and enabling immersive video.

Watch now!

Speakers

|

Brian Stevenson SME, Streaming Video Alliance |

|

Humberto La Roche Principal Engineer, Cisco |

|

Sanjay Mishra Associate Fellow, Verizon |

|

Thierry Fautier President-Chair at Ultra HD Forum VP Video Strategy Harmonic at Harmonic |

|

Moderator: Dom Robinson Co-Founder, Director, and Creative Firestarter id3as |