Where is UHD? Whilst the move to HD for US primetime slots happened very quickly, HD had actually taken many years to gain a hold on the market. Now, though SD services are still numerous, top tier channels all target HD and in terms of production, SD doesn’t really exist. Is UHD successfully building the momentum needed to dominate the market in the way that HD does or are there blockers? Is there the will but not the bandwidth? Can we show that UHD makes financial sense for a business? This video from the DVB Project and UltraHD Forum answers these questions.

Ian Nock takes the mic first and explains the UltraHD Forum’s role in the industry ahead of introducing Dolby’s Jason Power. Ian explains that the UltraHD Forum isn open organisation focused on all aspects of Ultra High Definition including HDR, Wide Colour Gamut (WCG), Next Generation Audio (NGA) and High Frame Rate (HFR). Jason Power is the chair of the DVB Commercial Module AVC. See starts by underlining the UHD-1 Phase 1 and Phase 2 specifications. Phase 1 defines the higher resolution and colour gamut, but phase 2 delivers higher frame rate, better audio and HDR. DVB works to produce standards that define how these can be used and the majority of UHD services available are DVB compliant.

On the topic of available services, Ben Schwarz takes the stand next to introduce the UltraHD Forum’s ‘Service Tracker‘ which tracks the UHD services available to the public around the world. Ben underlines there’s been a tripling of services available between 2018 to 2020. It allows you to order by country, look at resolution (from 2K to 8L) and more. Ben gives a demo and explains the future plans.

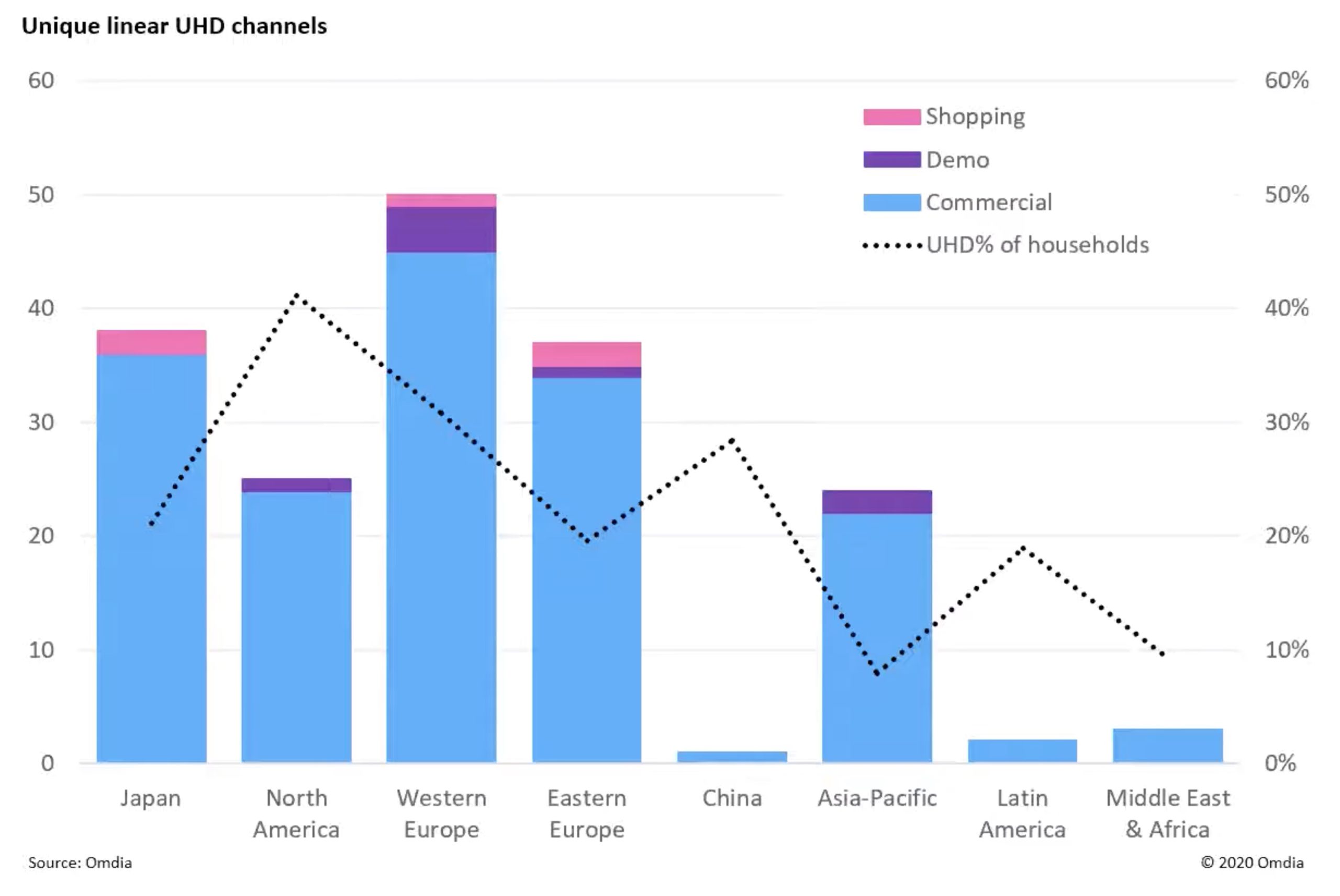

Paul Bray focusses on the global television set business. He starts looking at how the US and Europe have caught up with China in terms of shipments but the trend of buying a TV set – on average – an inch larger than the year before, shows little sign of abating. A positive for the industry, in light of Covid-19, is that the market is not predicted to shrink. Rather, the growth that was expected will be stunted. The US replaces TVs more often than other countries, so the share of TVs there which are UHD is higher than anywhere else. Europe still has a large proportion of people who are happy with 32″ TVs due to the size and HD is perfectly ok for them. Paul shows a great graph which shows the UHD Penetration of each market against the number of UHD services available. We see that Europe is notably in the lead and that China barely has any UHD services at all. Though it should be noted that Omdia are counting linear services only.

Graph showing UHD Penetration per geographical market Vs. Number of Linear UHD services.

Graph and Information ©Omdia

The next part of the video is a 40-minute Q&A which includes Virginie Drugeon who explains her work in defining the dynamic metadata that is sent to the receiver so that it can correctly adapt the picture, particularly for HDR, to the display itself. The Q&A covers the impacts of Covid-19, recording formats for delivery to broadcasters, bitrates on satellite, the UltraHD Forum’s foundational guidelines, new codecs within DVB, high frame rate content and many other topics.

Watch now!

Download the presentations

Speakers

|

Jason Power Chair of the DVB Commercial Module AVC Working Group Commercial Partnerships and Standards, Dolby Laboratories |

|

Ben Schwarz Chair of Ultra HD Forum Communication Working Group |

|

Paul Gray Research Director, Omdia |

|

Virginie Drugeon Senior Engineer, Digital Standardisation, Panasonic |

|

Moderator:Ian Nock Chair of the Interoperability Working Group of the Ultra HD Forum Principal Consultant & Founder, Fairmile West |