PTP is evolving as is our ability to use it over WANs. This video explains what’s new in PTP’s second revision and the evolving techniques of using PTP over a wide area network such as the internet. As PTP was built assuming the use of LANs, the longer and more unpredictable latency of WANs throws off the timing calculations, so what can be done to compensate?

In this video from RAVENNA, Andreas Hildebrand from ALC Networx takes us through PTP 2.1, the 2019 revision of PTP following on from PTP 2.0 in 2008 and from the original 1.0 in 2002. Famously, 2.0 and 1.0 were not compatible with each other which has caused problems with some hardware implementations of DANTE which were first written when 1.0 was the only edition. Importantly, Andreas highlights, version 2.1 is backwards compatible with version 2.0. If you’re looking for a PTP primer before digging in, have a look at this intro video from Daniel Boldt, Meinberg

Andreas explains the use of PTP profiles within both AES67 and SMTPTE 2110 which standardise some of the parameters for using PTP such as message frequency. Whilst they do have different defaults, there is an overlap between the two allowing for use of AES67 streams withing both an AES67 ecosystem and with a 2110-30 ecosystem. These overlaps are detailed in the joint AES and SMPTE document, AES-R16-2016.

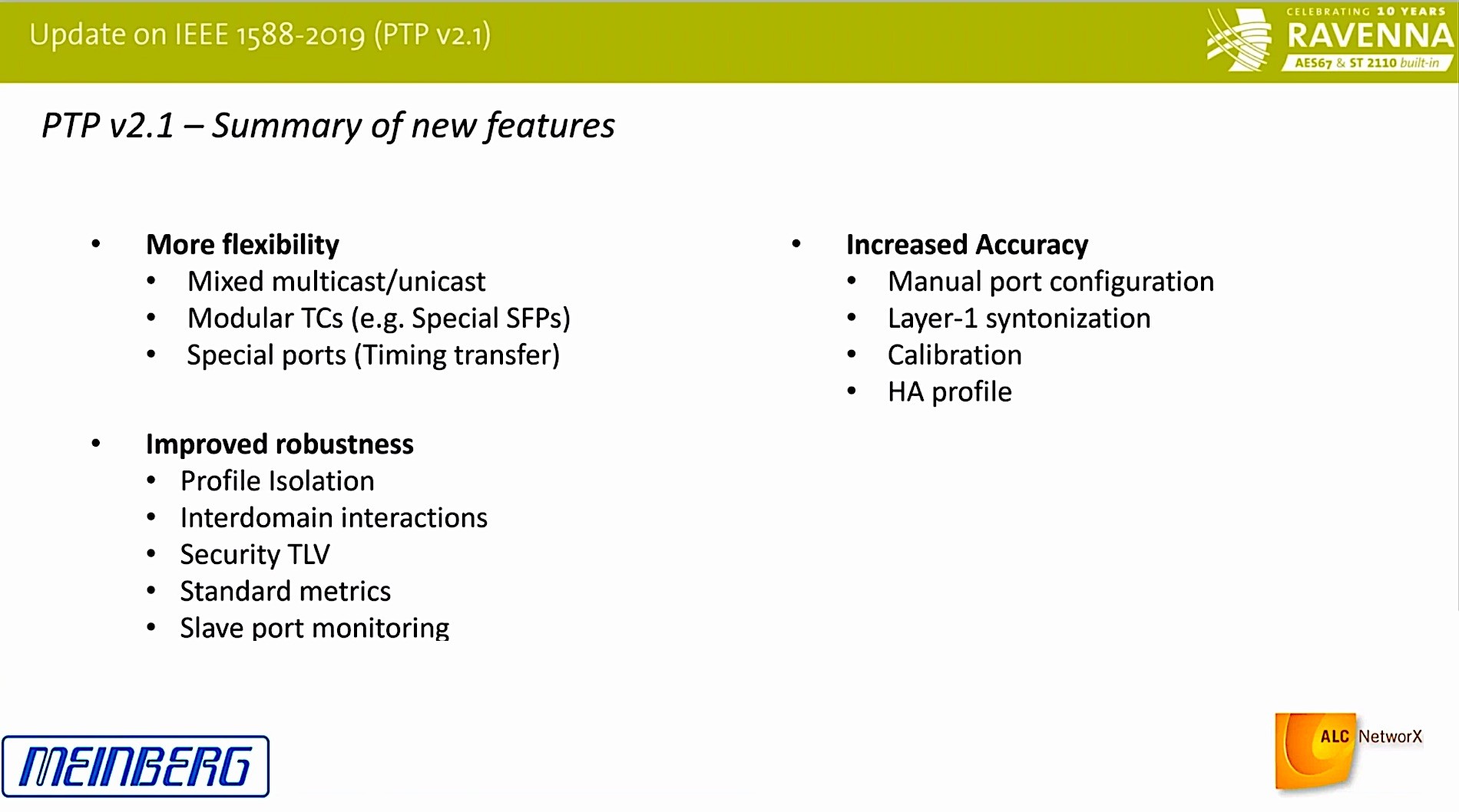

“What’s new in PTP v2.1?” asks Andreas. Apart from clearer language, accuracy, flexibility and robustness have been enhanced.

Flexibility

Flexibility comes from the ability to mixed multicast and unicast messages. Announce and sync messages are still sent in multicast, but queries like delayresponse & delayrequest can now be sent unicast which provides better scalability as, for many scenarios, the reply never needs to go back to any other computers. Another example of flexibility is modular transparent clocks i.e. ones in SFPs. Another flexibility improvement is that it’s now possible to isolate profiles without using different PTP domain numbers. This is by adding a Profile ID called ‘SdoId’.

Robustness and Security

PTP now allows inter-domain interactions effectively allowing multiple GMs to vote onto a single ‘multi-domain clock’. This becomes a very robust clock as it’s created from the insight of three grandmasters. PTP v2.1 adds source integrity checking to allow identification of false, injected, messages. A long-needed improvement as security, even of a LAN, can’t be assumed nowadays.

Performance and Accuracy

Stats reporting has been added to PTP v2.1 allowing monitoring of the average, minimum, max and standard deviation of a number of metrics from the leader clock, delay metrics and message reception counters. Accuracy has been boosted to sub-nanosecond by the CERN-related White Rabbit Project which is an overall benefit to PTP even if sub-nanosecond timing isn’t needed.

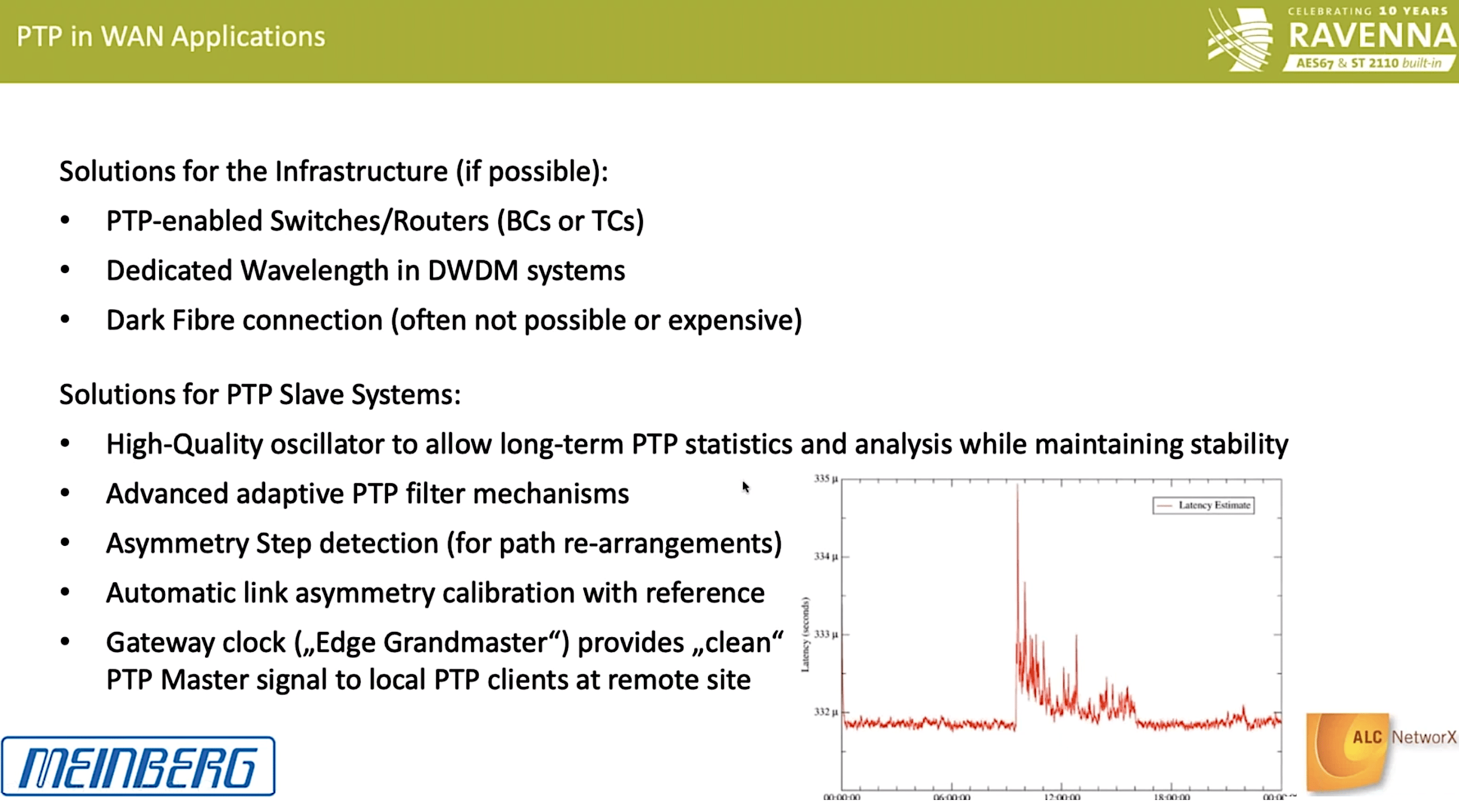

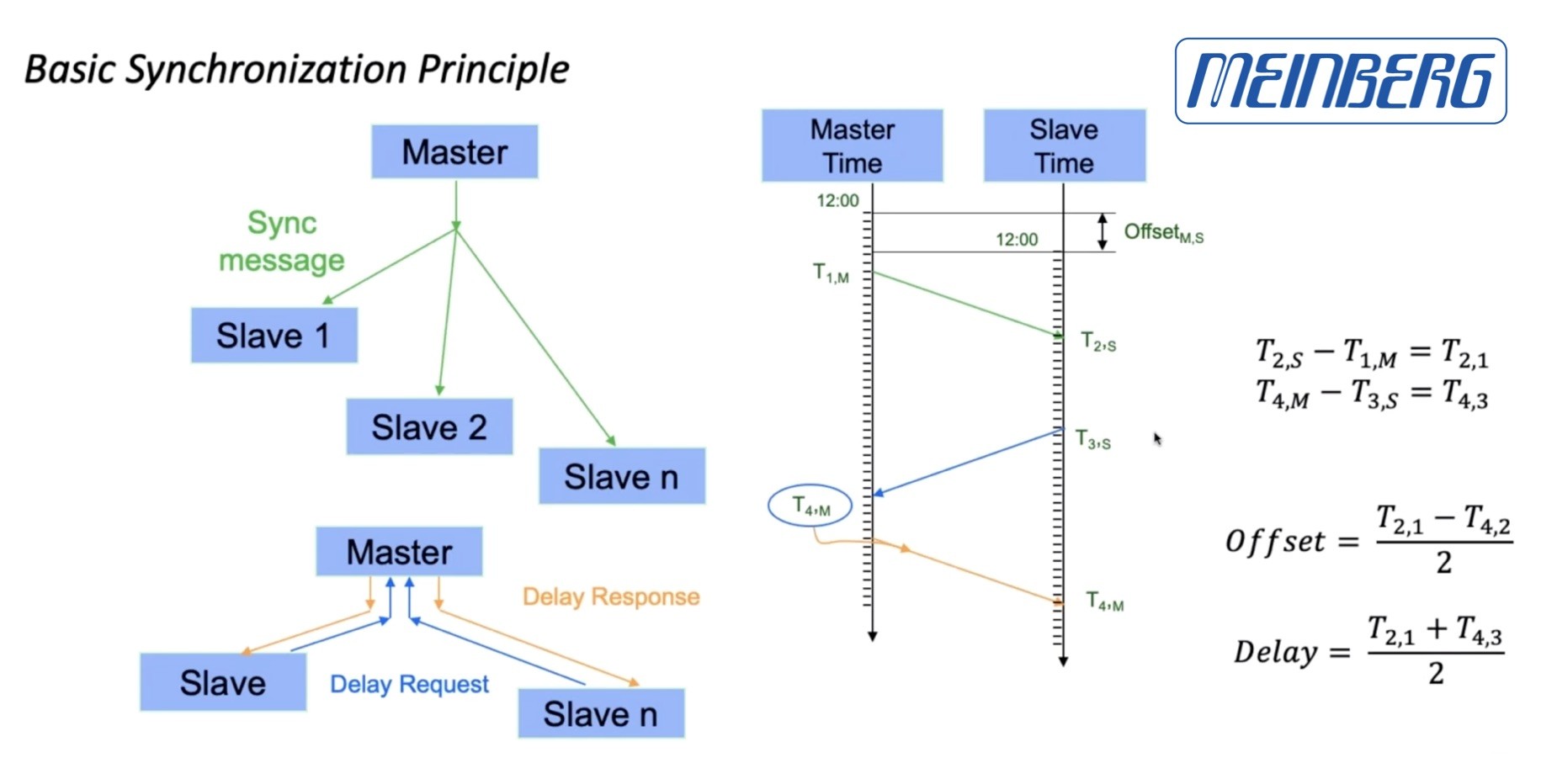

The second part of the video features Meinberg’s Daniel Boldt who discusses how to transmit PTP over the WAN. This is more challenging than a WAN because it’s more likely to be affected by queueing delays – particularly if the WAN in question is the internet. Queueing delays happen for a number of reasons but it all boils down to the fact the switches and routers often have to hold packets in a buffer, something that tends to happen more when there is more load. As such, this means that the delay is variable leading to varying jitter on the measurements.

Another problem often encountered is path changes where a switch happens in the network to divert the signal to a different path. Whilst this is a great way to route around problems, it does mean a sudden change in path length and therefore propagation delay. A conventional ping time may change from 100ms to 250ms in a second. This could have a big impact on the accuracy of a PTP timing signal if undetected.

Finally, the PTP timing algorithm assumes that it takes just as long, and no longer, to get the timing information from A to B as it does to get the follow-up reply from B to A. When one direction takes longer than the other, for instance when one direction is forced through a 100Mbps link rather than 1000Mbps, the PTP timing will have a constant timing error.

Daniel explains that these issues can be mitigated by more thorough processing of the incoming packets. For instance, having a high-quality oscillator which can maintain an accurate frequency for a long time without external input is helpful. Having a local GM on GPS is another good way to avoid problems, in the cases when this is practical, where the WAN PTP becomes an ‘aide’ to timing rather than the authority. Finally, the ‘lucky packets’ technique is demonstrated.

Daniel explains that if you look at the delay of each packet incoming over, say, a two-second period, you can collect all the packets that, based on the timestamp, were lucky enough not to be delayed a lot. By discarding those very-delayed packets, the accuracy of the PTP signal becomes much higher and jitter can reduce, as we see from two case studies, by an order of magnitude.

Watch now!

Speakers

|

Andreas Hildebrand RAVENNA Evangelist, ALC NetworX |

|

Daniel Boldt Head of Software Development, Meinberg |