“Enhance!” the captain shouts as the blurry image on the main screen becomes sharp and crisp again. This was sci-fi – and this still is sci-fi – but super-resolution techniques are showing that it’s really not that far-fetched. Able to increase the sharpness of video, machine learning can enable upscaling from HD to UHD as well as increasing the frame rate.

Bitmovin’s Adithyan Ilangovan is here to explain the success they’ve seen with super-resolution and though he concentrates on upscaling, this is just as relevant to improving downscaling. Here are our previous articles covering super resolution.

Adithyan outlines two main enablers of super-resolution, allowing it to displace the traditional methods such as bicubic and Lanczos. Enabler one is the advent of machine learning which now has a good foundation of libraries and documentation for coders allowing it to be fairly accessible to a wide audience. Furthermore, the proliferation of GPUs and, particularly for mobile devices, neural engines is a big help. Using the GPUs inside CPUs or in desktop PCI slots allows the analysis to be done locally without transferring great amounts of video to the cloud solely for the purpose of processing or identification. Furthermore, if your workflow is in the cloud, it’s now easy to rent GPUS and FPGAs to handle such workloads.

Using machine learning doesn’t only allow for better upscaling on a frame-by-frame basis, but we are also able to allow it to form a view of the whole file, or at least the whole scene. With a better understanding of the type of video it’s analysing (cartoon, sports, computer screen etc.) it can tune the upscaling algorithm to deal with this optimally.

Anime has seen a lot of tuning for super-resolution. Due to Anime’s long history, there are a lot of old cartoons which are both noisy and low resolution which are still enjoyed now but would benefit from more resolution to match the screens we now routinely used.

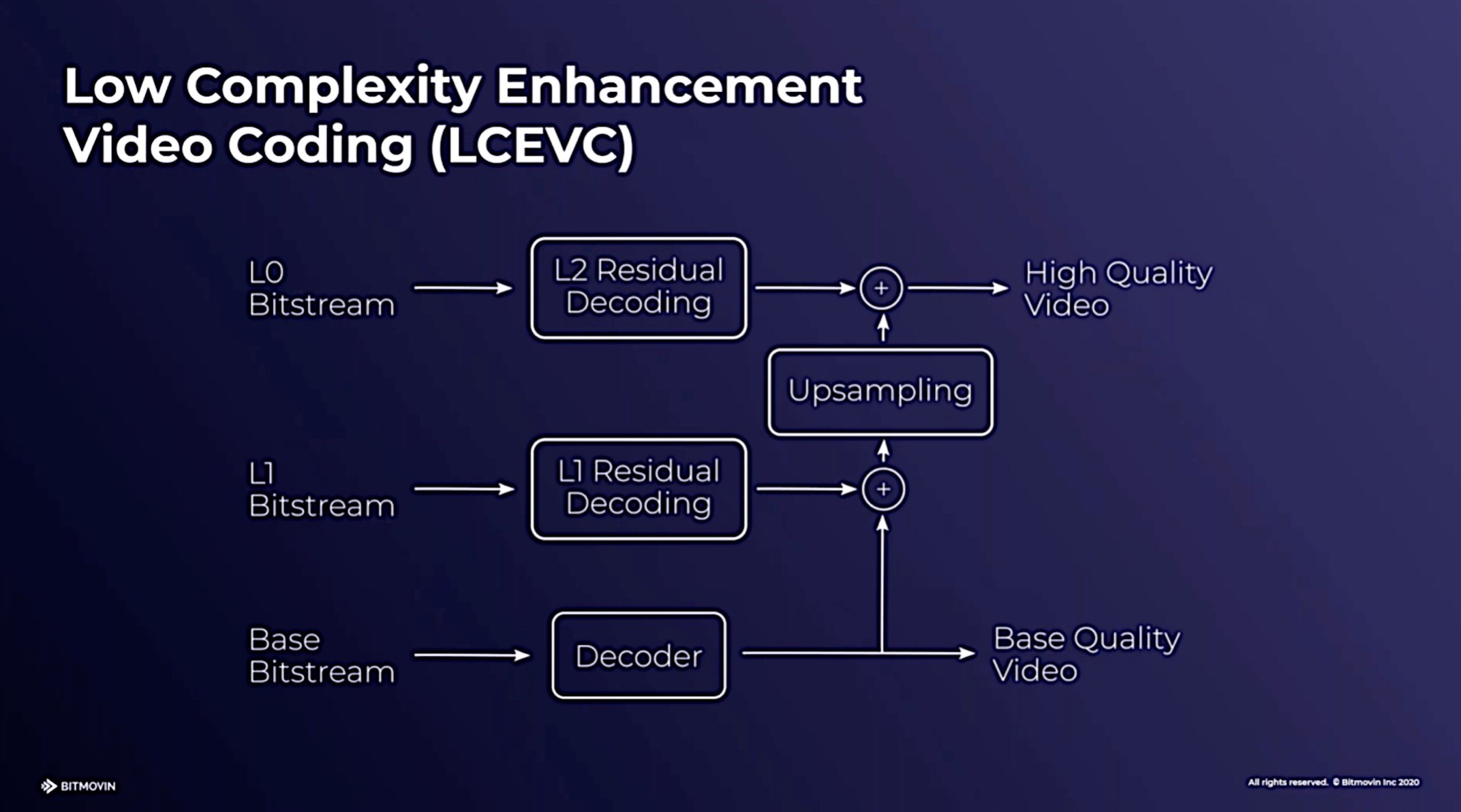

Adithyan finishes by asking how we should best take advantage of super-resolution. Codecs such as LCEVC use it directly within the codec itself, but for systems that have pre and post-processing before the encoder, Adithyan suggests it’s viable to consider reducing the bitrate to reduce the CDN costs knowing the using super-resolution on the decoder, the video quality can actually be maintained.

The video ends with a Q&A.

Watch now!

Download the slides

Speaker

|

Adithyan Ilangovan Encoding Engineer, Bitmovin |