VVC has now been released, MPEG’s successor to HEVC. But what is it? And whilst it brings 50% bitrate savings over HEVC, how does it compare to other codecs like AV1 and the other new MPEG standards? This primer answers these questions and more.

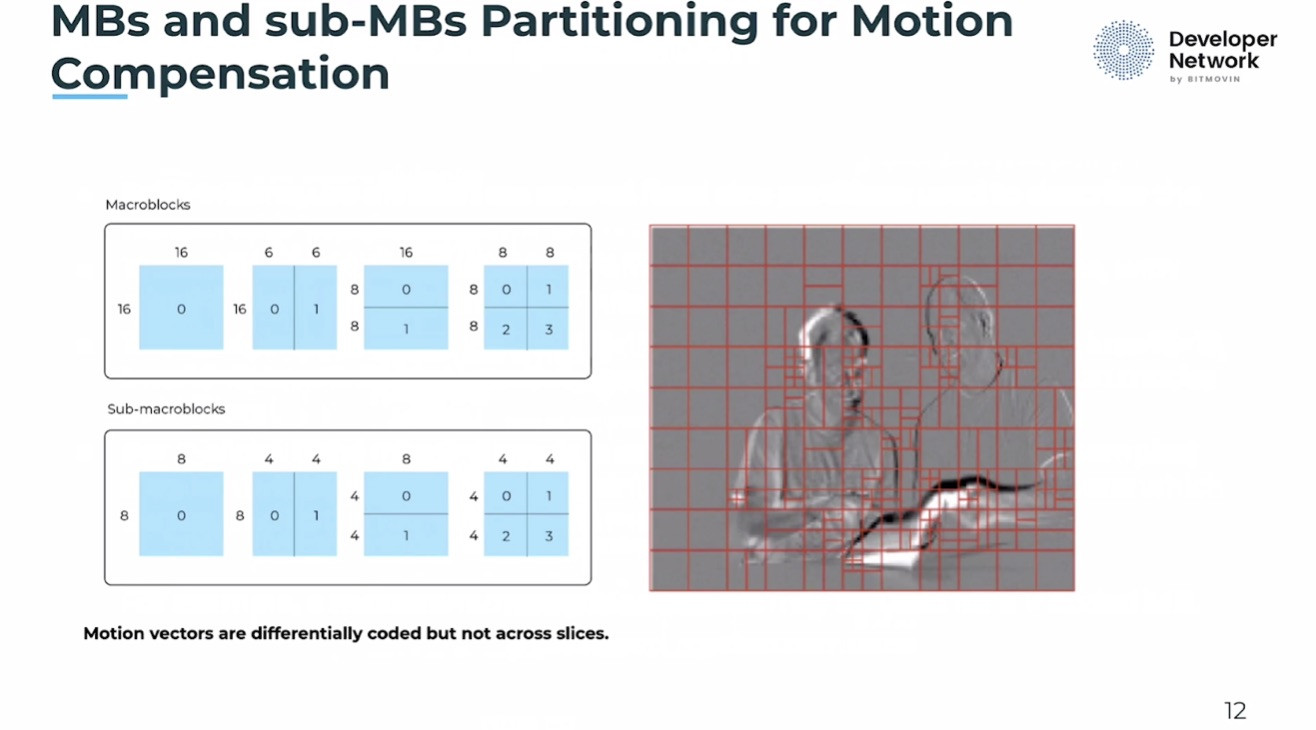

Christian Feldmann from Bitmovin starts by looking at four of the current codecs, AVC, HEVC, VP9 and AV1. VP9 isn’t often heard about in traditional broadcast circles, but it’s relatively well used online as it’s supported on Android phones and brings bitrate savings over AVC. Google use VP9 on Youtube for compatible players and see a higher retention rate. Netflix and Twitch also use it. AV1 is also in use by the tech giants, though its use outside of those who built it (Netflix, Facebook etc.) is not yet apparent. Christian looks at the compatibility of the codecs, hardware decoding, efficiency and cost.

Looking now at the other upcoming MPEG codecs, Christian examines MPEG-5 Essential Video Coding (EVC) which has two profiles: Baseline and Main. The baseline profile only uses technologies which are old enough to be outside of patent claims. This allows you to use the codec without the concern that you may be asked for a fee from a patent holder who comes out of the woodwork. The main profile, however, does have patented technology and performs better. Businesses which wish to use this codec can then pay licences but if an unexpected patent holder appears, each individual tool in the codec can be disabled, allowing you to protect continue using, albeit without that technology. Whilst it is a shame that patents are so difficult to account for, this shows MPEG has taken seriously the situation with HEVC which famously has hundreds of licensable patents with over a third of eligible companies not part of a patent pool. EVC performs 32% better than AVC using the baseline profile and 25% better than HEVC with the main profile.

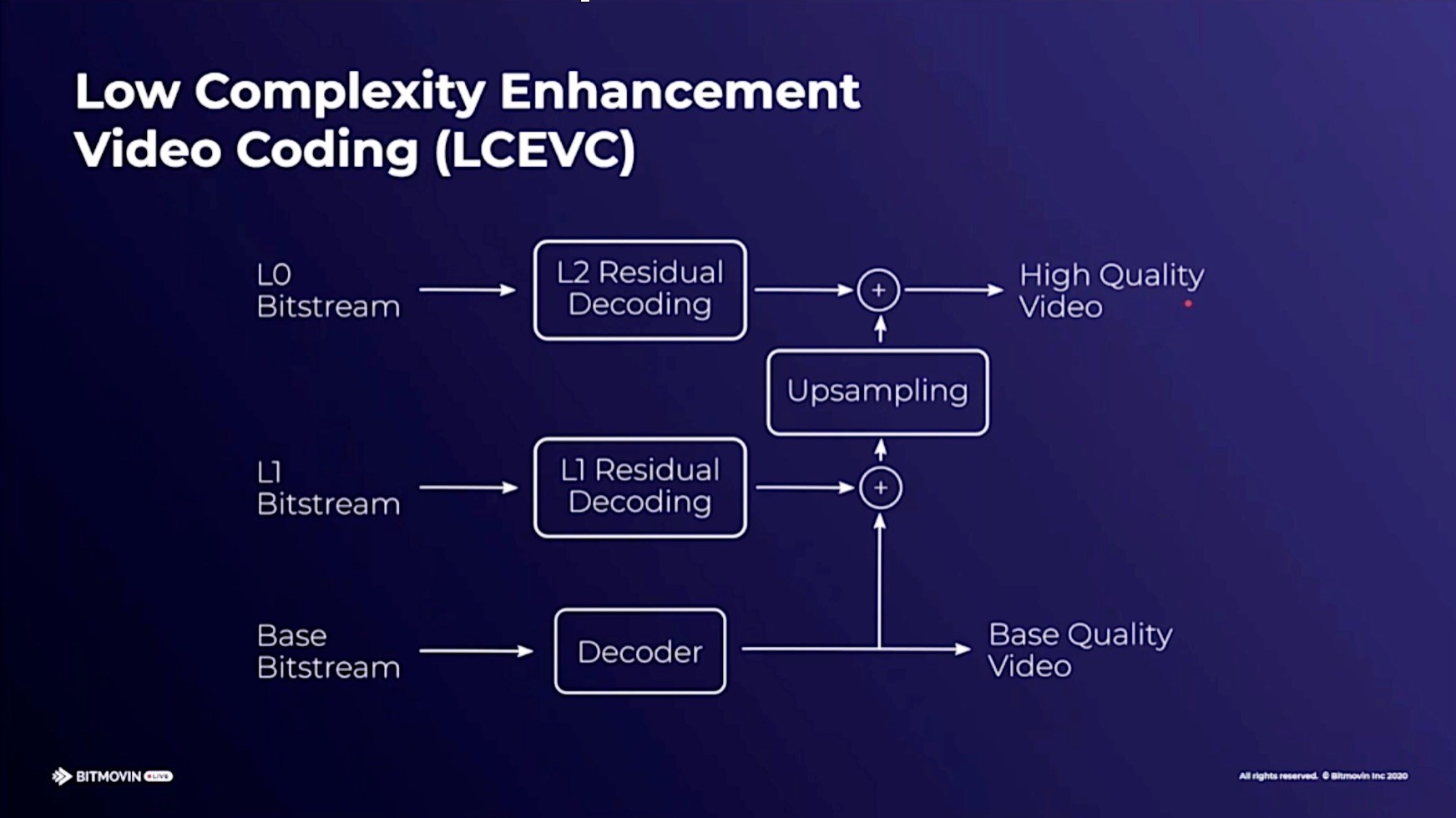

Next under the magnifying glass is Low Complexity Enhancement Video Coding (LCEVC). We’ve already heard about this on The Broadcast Knowledge from Guido, CEO of V-Nova who gave a deeper look at Demuxed 2019 and more recently at Streaming Media West. Whilst those are detailed talks, this is a great overview of the technology which is actually a hybrid approach to encoding. It allows you to take any existing codec such as AVC, AV1 etc. and put LCEVC on top of it. Using both together allows you to run your base encoder at a lower resolution (say HD instead of UHD) and then deliver to the decoder this low-resolution encode plus a small stream of enhancement information which the decoder uses to bring the video back up to size and add back in the missing detail. The big win here, as the name indicates, is that this method is very flexible and can take advantage of all sorts of available computing power in embedded technology as and in servers. In set-top boxes, parts of the SoC which aren’t used can be put to use. In phones, both the onboard HEVC decoding chip and the CPU can be used. It’s also useful in for automated workflows as the base codec stream is smaller and hence easier to decode, plus the enhancement information concentrates on the edges of objects so can be used on its own by AI/machine learning algorithms to more readily analyse video footage. Encoding time drops by over a third for AVC and EVC.

Now, Christian looks at the codec-du-jour, Versatile Video Codec (VVC), explaining that its enhancements over HEVC come not just from bitrate improvements but techniques which better encode screen content (i.e. computer games), allow for better 360 degree video and reduce delay. Subjective results show up to 50% improvement. For more detail on VVC, check out this talk from Microsoft’s Gary Sullivan.

The talk finishes with answers so audience questions: Which will be the winner, what future device & hardware support will be and which is best for real-time streaming.

Watch now!

Speakers

|

Christian Feldmann Team lead, Encoding, Bitmovin |