SRT is an enabler for contribution over the internet – whether point to point, or cloud egress/ingress. In recent weeks here on The Broadcast Knowledge we have seen different takes on how SRT, short for Secure Reliable Transport, and RIST can be used including from Open Broadcast Systems.

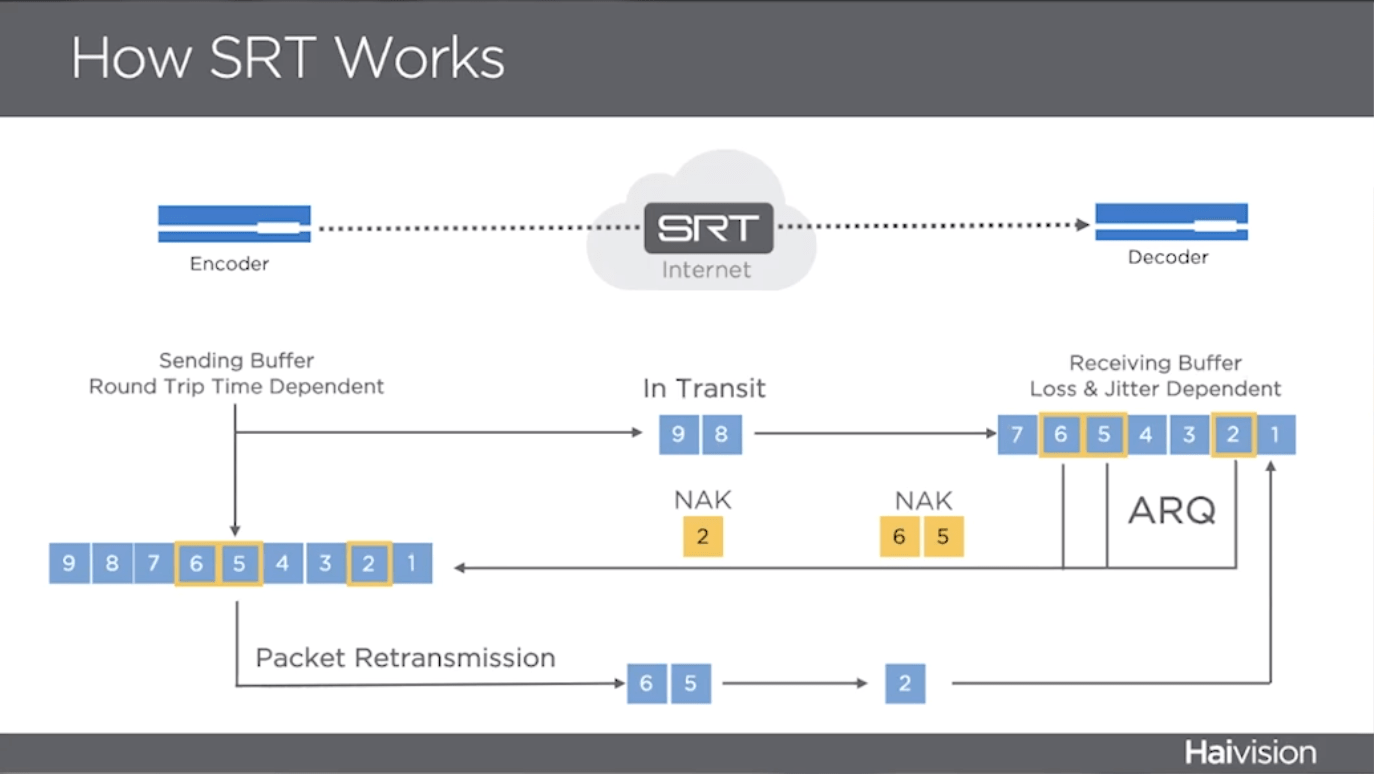

Here, Karel Boek, CEO of Raskenlund a Norwegian consultancy company for streaming, explains SRT and builds a workflow as a live demo showing how you can implement it quickly and easily. He starts by explaining where SRT sits and what it’s for. SRT makes contribution over the internet possible because it has a very light-touch away of recovering missing packets which are inevitable on internet links. Karel covers Haivision’s creation of SRT and the SRT Alliance that has grown out of that which now boast 350 members. The protocol being Open Source – and now an IETF Draft – means that a lot of companies have been happy to adopt the protocol. There are frequent plugfests, one has just concluded, where vendors test compatibility with the increasing set of features offered in SRT.

‘Secure’ is the ‘S’ in SRT’s name which is because the stream can be easily encrypted as part of the protocol. This is an important aspect in enabling sports and enterprise contribution in the cloud given the security that no-one can watch the feed before it gets to its destination.

‘Reliable’ is the key offer for SRT as that’s the number one problem with the internet and other networks where not all packets get delivered. TCP/IP is a great protocol on which most webpages are delivered. It’s fantastic for file delivery since every single packet gets acknowledged and there really isn’t any way that a file can get to the other end without being completely intact. Live streams can’t afford the overhead of counting in and counting out every packet so SRT’s ability to request only the missing packets is very important. It should be noted that this ability is also in Zixi, ARQ and RIST.

Karel compares SRT with other protocols including RTMP, MPEG-2 Transport Streams amongst others. He is careful to separate HLS, MPEG-DASH and WebRTC as ‘last-mile protocols’ in order to make a differentiation between those which content providers use to move video around as part of production and those which are used for distribution. RTMP’s use is still notable but diminishing particularly in Europe and the American markets. But the idea of MPEG-TS over UDP is still the best way to deliver within a building. Outside of the building, you would then want to protect it at least with FEC, with SMPTE 2022-7 or, better, with a protocol such as RIST or SRT. Karel mentions the details of the Simple Profile of RIST which was, by design, missing the features Karel notes are absent. We’ve heard here on The Broadcast Knowledge that these features have been delivered as planned in the Main Profile.

In the final part of this talk, Karel builds, live, an example workflow which combines both Wowza and SRTHub to create an end-to-end workflow. This is a great way of demonstrating how quickly you can create a workflow with SRT. There are plenty of SRT-enabled encoders and senders which is one of the ways we can judge the success of the SRT Alliance. Similarly whilst Haivision’s SRTHub is a useful product which brings things together in the cloud or on-prem, but Techex’s MWEdge and Videoflow’s DVG can do similar or more, each with their own advantages.

Overwell the takeaway from this talk from Raskenlund is that internet contribution is sorted, it’s now for your to choose how to do it and with whom. To that end, the talk ends with a Q&A from people wondering exactly that.

Watch now!

Speaker

|

Karel Boek CEO, Raskenlund |