These next 12 months are going to see 3 new MPEG standards being released. What does this mean for the industry? How useful will they be and when can we start using them? MPEG’s coming to the market with a range of commercial models to show it’s learning from the mistakes of the past so it should be interesting to see the adoption levels in the year after their release. This is part of the second session of the Vienna Video Tech Meetup and delves into startup time for streaming services.

In the first talk, Dr. Christian Feldmann explains the current codec landscape highlighting the ubiquitous AVC (H.264), UHD’s friend, HEVC (H.265), and the newer VP9 & AV1. The latter two differentiate themselves by being free to used and are open, particularly AV1. Whilst slow, both the latter are seeing increasing adoption in streaming, but no one’s suggesting that AVC isn’t still the go-to codec for most online streaming.

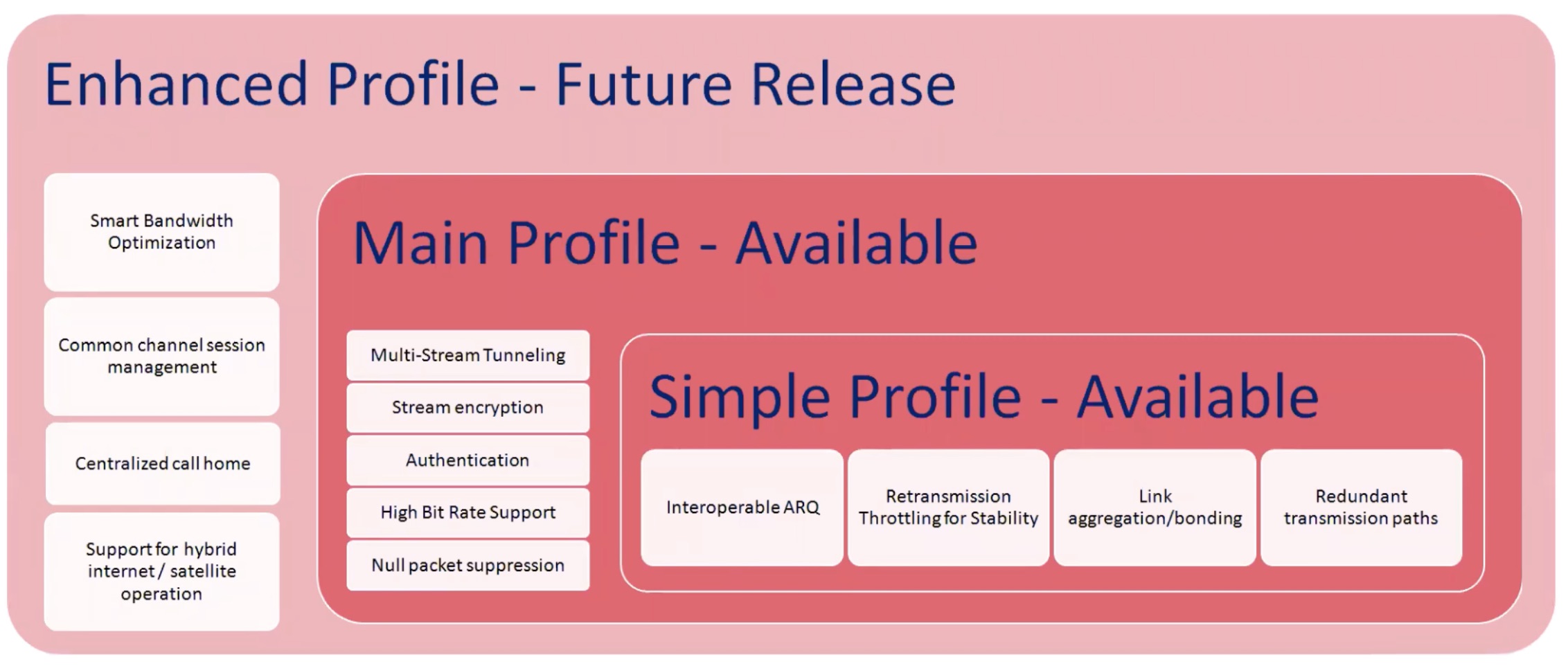

Christian then introduces the three new codecs, EVC (Essential Video Coding), LCEVC (Low-Complexity Enhancement Video Coding) and VVC (Versatile Video Coding) all of which have different aims. We start by looking at EVC whose aim is too replicate the encoding efficiency of HEVC, but importantly to produce a royalty-free baseline profile as well as a main profile which improves efficiency further but with royalties. This will be the first time that you’ve been able to use an MPEG codec in this way to eliminate your liability for royalty payments. There is further protection in that if any of the tools is found to have patent problems, it can be individually turned off, the idea being that companies can have more confidence in deploying the new technology.

The next codec in the spotlight is LCEVC which uses an enhancement technique to encode video. The aim of this codec is to enable lower-end hardware to access high resolutions and/or lower bitrates. This can be useful in set-top boxes and for online streaming, but also for non-broadcast applications like small embedded recorders. It can achieve a light improvement in compression over HEVC, but it’s well known that HEVC is very computationally heavy.

LCEVC reduces computational needs by only encoding a lower resolution version (say, SD) of the video in a codec of your choice, whether that be AVC, HEVC or otherwise. The decoder will then decode this and upscale the video back to the original resolution, HD in this example. This would look soft, normally, but LCEVC also sends enhancement data to add back in the edges and detail that would have otherwise been lost. This can be done in CPU whilst the other decoding could be done by the dedicated AVC/HEVC hardware and naturally encoding/decoding a quarter-resolution image is much easier than the full resolution.

Lastly, VVC goes under the spotlight. This is the direct successor to HEVC and is also known as H.266. VVC naturally has the aim of improving compression over HEVC by the traditional 50% target but also has important optimisations for more types of content such as 360 degree video and screen content such as video games.

To finish this first Vienna Video Tech Meetup, Christoph Prager lays out the reasons he thinks that everyone involved in online streaming should obsess about Video Startup Time. After defining that he means the time between pressing play and seeing the first frame of video. The longer that delay, the assumption is that the longer the wait, the more users won’t bother watching. To understand what video streaming should be like, he examines Spotify’s example who have always had the goal of bringing the audio start time down to 200ms. Christophe points to this podcast for more details on what Spotify has done to optimise this metric which includes activating GUI elements before, strictly speaking, they can do anything because the audio still hasn’t loaded. This, however, has an impact of immediacy with perception being half the battle.

“for every additional second of startup delay, an additional 5.8% of your viewership leaves”

Christophe also draws on Akamai’s 2012 white paper which, among other things, investigated how startup time puts viewers off. Christophe also cites research from Snap who found that within 2 seconds, the entirety of the audience for that video would have gone. Snap, of course, to specialise in very short videos, but taken with the right caveats, this could indicate that Akamai’s numbers, if the research was repeated today, may be higher for 2020. Christophe finishes up by looking at the individual components which go towards adding latency to the user experience: Player startup time, DRM load time, Ad load time, Ad tag load time.

Watch now!

Speakers

|

Dr. Christian Feldmann

Team Lead Encoding,

Bitmovin |

|

Christoph Prager

Product Manager, Analytics

Bitmovin |

|

Markus Hafellner

Product Manager, Encoding

Bitmovin |