ATSC 3.0 is bringing IP delivery to terrestrial broadcast. Streaming data live over the air is no mean feat, but nevertheless can be achieved with standard protocols such as MPEG DASH. The difficulty is telling the other end what’s its receiving and making sure that security is maintained ensuring that no one can insert unintended media/data.

In the second of this webinar series from the IEEE BTS, Adam Goldberg digs deep into two standards which form part of ATSC 3.0 to explain how security, delivery and signalling are achieved. Like other recent standards, such as SMPTE’s 2022 and 2110, we see that we’re really dealing with a suite of documents. Starting from the root document A/300, there are currently twenty further documents describing the physical layer, as we learnt last week in the IEEE BTS webinar from Sony’s Luke Fay, management and protocol layer, application and presentation layer as well as the security layer. In this talk Adam, who is Chair of a group on ATSC 3.0 security and vice-chair one on Management and Protocols, explains what’s in the documents A/331 and A/360 which between them define signalling, delivery and security for ATSC 3.0.

Security in ATSC 3.0

One of the benefits of ATSC 3.0’s drive into IP and streaming is that it is able to base itself on widely developed and understood standards which are already in service in other industries. Security is no different, using the same base technology that secure websites use the world over to achieve security. Still colloquially known by its old name, SSL, the encrypted communication with websites has seen several generations since the world first saw ‘HTTPS’ in the address bar. TLS 1.2 and 1.3 are the encryption protocols used to secure and authenticate data within ATSC 3.0 along with X.509 cryptographic signatures.

Authentication vs Encryption

The importance of authentication alongside encryption is hard to overstate. Encryption allows the receiver to ensure that the data wasn’t changed during transport and gives assurance that no one else could have decoded a copy. It provides no assurance that the sender was actually the broadcaster. Certificates are the key to ensuring what’s called a ‘chain of trust’. The certificates, which are also cryptographically signed, match a stored list of ‘trusted parties’ which means that any data arriving can carry a certificate proving it did, indeed, come from the broadcaster or, in the case of apps, a trusted third party.

Signalling and Delivery

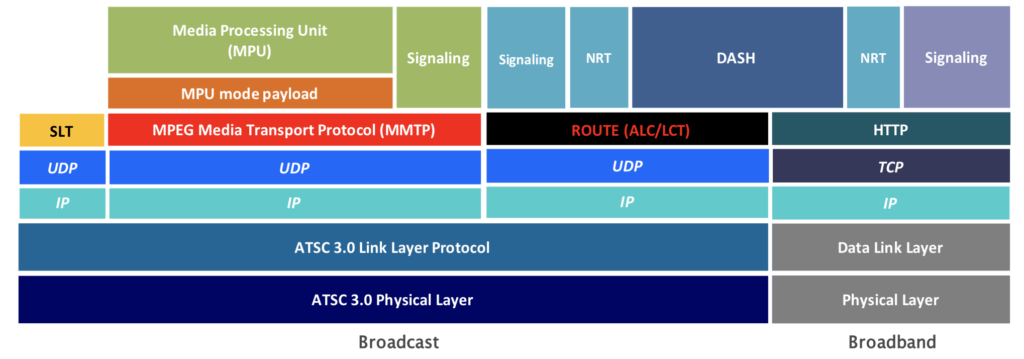

Telling the receiver what to expect and what it’s getting is a big topic and dealt with in many places with in the ATSC 3.0 suite. The Service List Table (SLT) provides the data needed for the receiver to get handle on what’s available very quickly which in turn points to the correct Service Layer Signaling (SLS) which, for a specific service, provides the detail needed to access the media components within including the languages available, captions, audio and emergency services.

Media delivery is achieved with two technologies. ROUTE (Real-Time Object Delivery over Unidirectional Transport ) which is an evolution of FLUTE which the 3GPP specified to deliver MPEG DASH over LTE networks. and MMTP (Multimedia Multiplexing Transport Protocol) an MPEG standard which, like MPEG DASH is based on the container format ISO BMFF which we covered in a previous video here on The Broadcast Knowledge

Register now for this webinar to find out how this all connects together so that we can have safe, connected television displaying the right media at the right time from the right source!

Speaker

|

Adam Goldberg Chair, ATSC 3.0 Specialist Group on ATSC 3.0 Security Vice-chair, ATSC 3.0 Specialist Group on Management and Protocols Director Technical Standards, Sony Electronics |