We have NMOS IS-04,-05, 6, 7…all the way to 10. Is it possibly too complex? Each NMOS specification brings an important feature to an IP/SMPTE ST-2022 workflow and not every system needs each one so life can become confusing. To help, NVIDIA (who own Mellanox) have been developing an open-source project which allows for quick and easy deployment of an NMOS test system.

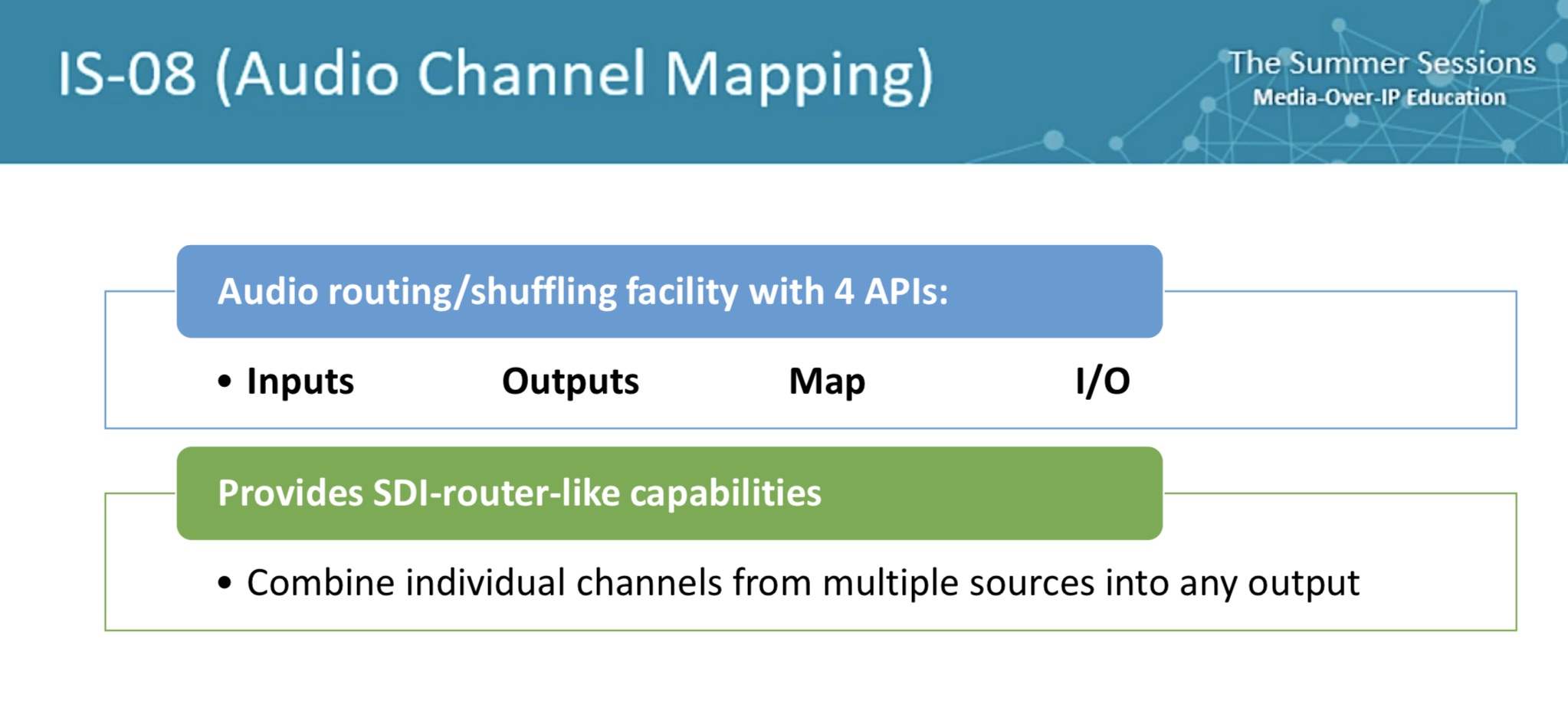

Kicking off the presentation, Félix Poulin, explains how the EBU Pyramid for Media Nodes shows how SMPTE ST 2110 depends on a host of technologies surrounding it to create a large system. These are such as ‘Discovery and registration; channel mapping, event and tally, Network control, security and more. Félix shows how AMWA’s BCP-003-01 gives guidelines on securing NMOS comms. How IS-09 allows nodes to join the system and collect system parameters and then register itself in the IS-04 database. IS-05 and IS-06 allow end-points to be connected either through IGMP with IS-05 or by an SDN controller, using -06. IS-08 allows for audio mapping/shuffling with BCP-002-01 marking which streams belong to each other and can be taken as a bundle. IS-07 gives a way for event and tally information to be passed from place to place.

There’s a lot going on, already published and getting started can seem quite daunting. For that reason, there is an ‘NMOS at a glance‘ document now on the NMOS website. Gareth Sylvester-Bradley from Sony looks at the ongoing work within NMOS such as finalising IS-10 and BCP-003-02 both of which will enable secure authorisation of clients in the system and explains how AMWA works and ensures the correct direction of the NMOS activity groups with sufficient business cases and participation. He also outlines the importance of the NMOS testing tool and the criteria used for quality and adoption. Gareth finishes by discussing the other in-progress work from NMOS including work on EDID connection management as part of the pro AV IPMX project.

Finally, Richard Hastie introduces the ‘Easy-NMOS’ which provides very easy deployment of IS-04, 05 & 09 along with BCP-003-01 and BCP-002-01. Introduced in 2019, Mellanox – now part of NVIDIA – developed this easy-to-deploy, containerised set of 3 ‘servers’ which quickly and easily deploy these technologies including a test suite. This doesn’t move media, but it creates valid NMOS nodes and includes an MQTT broker. One container contains the NMOS Registry, controller and MQTT broker. One is a virtual mode and the last is an NMOS testing service. Richard walks us through the 4-line install and brief configuration ahead of installing this and demonstrating how to use it.

Watch now!

Speakers

|

Félix Poulin Director, Media Transport Architecture & Lab CBC/Radio-Canada |

|

Gareth Sylvester-Bradley Principal engineer, Sony EPE |

|

Richard Hastie Senior Sales Director, Mellanox Business Development NVIDIA |