We’re “past the early-adopter stage” of SMPTE 2110, notes Andy Rayner from Nevion as he introduces this case study of a multi-national broadcaster who’s created a 2110-based live production network spanning ten countries.

This isn’t the first IP project that Nevion have worked on, but it’s doubtless the biggest to date. And it’s in the context of these projects that Andy says he’s seen the maturing of the IP market in terms of how broadcasters want to use it and, to an extent, the solutions on the market.

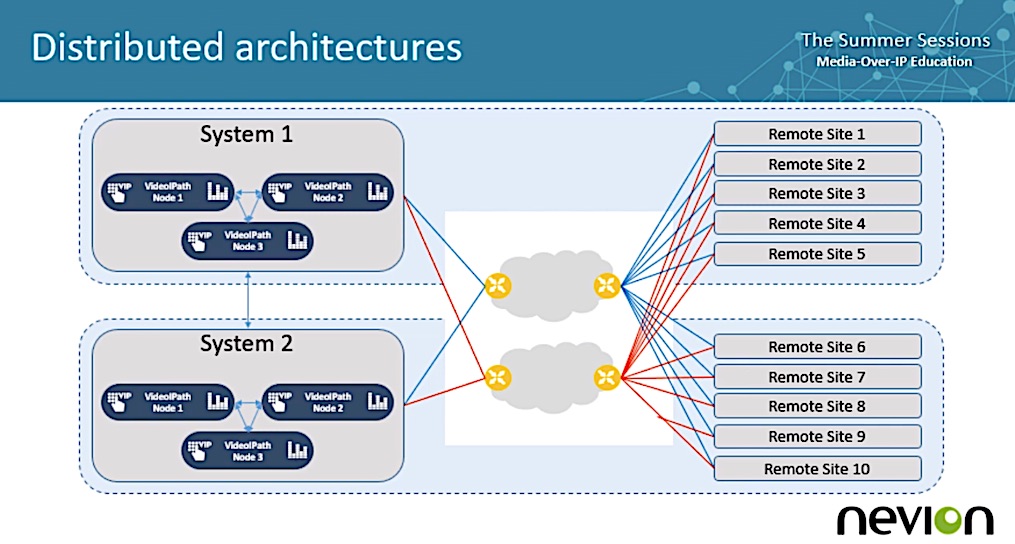

Fully engaging with the benefits of IP drives the demand for scale as people are freer to define a workflow that works best for the business without the constraints of staying within one facility. Part of the point of this whole project is to centralise all the equipment in two, shared, facilities with everyone working remotely. This isn’t remote production of an individual show, this is remote production of whole buildings.

SMPTE ST-2110, famously, sends all essences separately so where an 1024×1024 SDI router might have carried 70% of the media between two locations, we’re now seeing tens of thousands of streams. In fact, the project as a whole is managing in the order of 100,000 connections.

With so many connections, many of which are linked, manual management isn’t practical. The only sensible way to manage them is through an abstraction layer. For instance, if you abstract the IP connections from the control, you can still have a panel for an engineer or operator which says ‘Playout Server O/P 3’ which allow you to route it with a button that says ‘Prod Mon 2’. Behind the scenes, that may have to make 18 connections across 5 separate switches.

This orchestration is possible using SDN – Software Defined Networking – where router decisions are actually taken away from the routers/switches. The problem is that if a switch has to decide how to send some traffic, all it can do is look at its small part of the network and do its best. SDN allows you to have a controller, or orchestrator, which understands the network as a whole and can make much more efficient decisions. For instance, it can make absolutely sure that ST 2022-7 traffic is routed separately by diverse paths. It can do bandwidth calculations to stop bandwidths from being oversubscribed.

Whilst the network is, indeed, based on SMPTE ST 2110, one of the key enablers is JPEG XS for international links. JPEG XS provides a similar compression level to JPEG 2000 but with much less latency. The encode itself requires less than 1ms of latency, unlike JPEG 2000’s 60ms. Whilst 60ms may seem small, when a video needs to move 4 or even 10 times as part of a production workflow, it soon adds up to a latency that humans can’t work with. JPEG XS promises to allow such international production to feel responsive and natural. Making this possible was the extension of SMPTE ST 2110, for the first time, to allow carriage of compressed video in ST 2110-22.

Andy finishes his overview of this uniquely large case study talking about conversion between types of audio, operating SDN with IGMP multicast islands, and NMOS Control. In fact, it’s NMOS which the answer to the final question asking what the biggest challenge is in putting this type of project together. Clearly, in a project of this magnitude, there are challenges around every corner, but problems due to quantity can be measured and managed. Andy points to NMOS adoption with manufacturers still needing to be pushed higher whilst he lays down the challenge to AMWA to develop NMOS further so that it’s extended to describe more aspects of the equipment – to date, there are not enough data points.

Watch now!

Speakers

|

Andy Rayner Chief Technologist, Nevion |