Once you’ve implemented SMPTE ST 2110‘s suite of standards on your network, you’ve still got all your work ahead of you in order to implement large-scale workflows. How are you doing to discover new devices? How will you make or change connections between devices? How will you associate audios to the video? Creating a functioning system requires an whole ecosystem of control protocols and information exchange which is exactly what AMWA, the Advanced Media Workflow Association has been working on for many years now.

Jed Deame from Nextera introduces the main specifications that have been developed to work hand-in-hand with uncompressed workflows. All prefixed with IS- which stands for ‘Interface Specificaion’, they are IS-04, IS-05, IS-08, IS-09 and IS-10. Between them they allow you to discover new devices, create connections between then, manage the association of audio with video as well as manage system-wide information. Each of these, Jed goes through in turn. The only relevant ones which are skipped are IS-06 which allows devices to communicate northbound to an SDN controller and IS-07 which manages GPI and tally information.

Jed sets the scene by describing an example ST-2110 setup with devices able to join a network, register their presence and be quickly involved in routing events. He then looks at the first specification in today’s talk, NMOS IS-04. IS-04’s job is to provide an API for nodes (cameras, monitors etc.) to use when they start up to talk to a central registry and lodge some details for further communication. The registry contains a GUID for every resource which covers nodes, devices, sources, flows, senders and receivers. IS-04 also provides a query API for controllers (for instance a control panel).

While IS-04 started off very basic, as it’s moved to version 1.4, it’s added HTTPS transport, paged queries and support for connection management with IS-05 and IS-06. IS-04 is a foundational part of the system allowing each element to have an identity, track when entities are changes and update clients accordingly.

IS-05 manages connections between senders and receivers allowing changes to be immediate or set for the future. It allows, for example, querying of a sender to get the multicast settings and provides for sending that to a receiver. Naturally, when a change has been made, it will update the IS-04 registry.

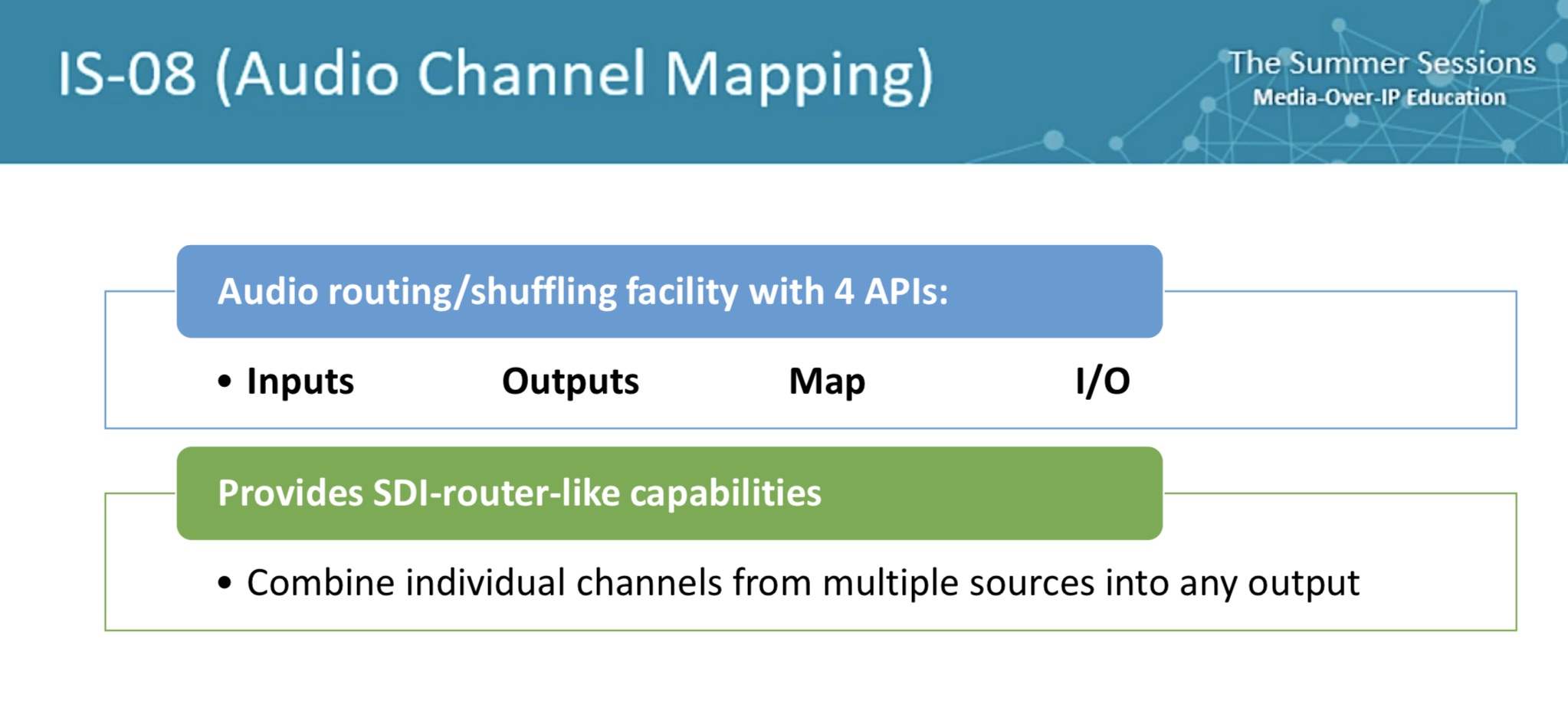

IS-08 helps manage the complexity which is wrought by allowing all audios to flow separately from the video. Whilst this is a boon for flexibility and reduces much unnecessary processing (in extracting and recombining audio) it also adds a burden of tracking which audios should be used where. IS-08 is the answer from AMWA on how to manage this complexity. This can be used in association with BCP-002 (Best Current Practice) which allows for essences in the IS-04 registry to be tagged showing how they were grouped when they were created.

Jed looks next at IS-09 which he explains provides a way for global facts of the system to be distributed to all devices. Examples of this would be whether HTTPS is in use in the facility, syslog servers, the registration server address and NMOS versions supported.

Security is the topic of the last part of talk. As we’ve seen, IS-04 already allows for encrypted API traffic, and this is mandated in the EBU’s TR-1001. However BCP 003 and IS-10 have also been created to improve this further. IS-10 deals with authorisation to make sure that only intended controllers, senders and receivers are allowed access to the system. And it’s the difference between encryption (confidentiality) and authorisation which Jed looks at next.

It’s no accident that security implementations in AMWA specifications shares a lot in common with widely deployed security practices already in use elsewhere. In fact, in security, if you can at all avoid developing your own system, you should avoid it. In use here is the PKI system and TLS encryption we use on every secure website. Jed steppes through how this works and the importance of the cipher suite which lives under TLS.

The final part of this talk is a case study where a customer required encrypted control, an authorisation server, 4K video over 1GbE, essence encryption, unified routing interface and KVM capabilities. Jed explains how this can all be achieved with the existing specifications or an extension non top of them. Extending the encryption methods for the API to essences allowed them to meet the encryption requirements and adding some other calls on top of the existing NMOS provided a unified routing interface which allowed setting modes on equipment.

Watch now!

For more information, download these slides from a SMPTE UK Section meeting on NMOS

Speakers

|

Jed Deame CEO, Nextera Video |