MPEG’s VVC is the next iteration along from HEVC (H.265). Whilst there are other codecs being finalised such as EVC and LCEVC, this talk looks at how VVC builds on HEVC, but also lends its hand to screen content and VR becoming a more versatile codec than HEVC, meeting the world’s changing needs. For an overview of these emerging codecs, this interview covers them all.

VVC is a joint project between ITU-T and MPEG (AKA ISO/IEC). Its aim is to create a 50% encoding efficiency in bitrate for the same quality picture, with the emphasis on higher resolutions, HDR and 10-bit video. At the same time, acknowledging that optimising codecs on natural video is no longer the core requirement for a lot of people. Its versatility comes from being able to encode screen content, independent sub-picture encoding, scalable encoding among others.

Gary Sullivan from Microsoft Technology & Research talks us through what all this means. He starts by outlining the case for a new codec, particularly the reach for another 50% bitrate saving which may come at further computational cost. Gary points out that, as video use continues to increase, anything that can be done to significantly reduce bitrates will either drive down costs or allow people to use video in better ways.

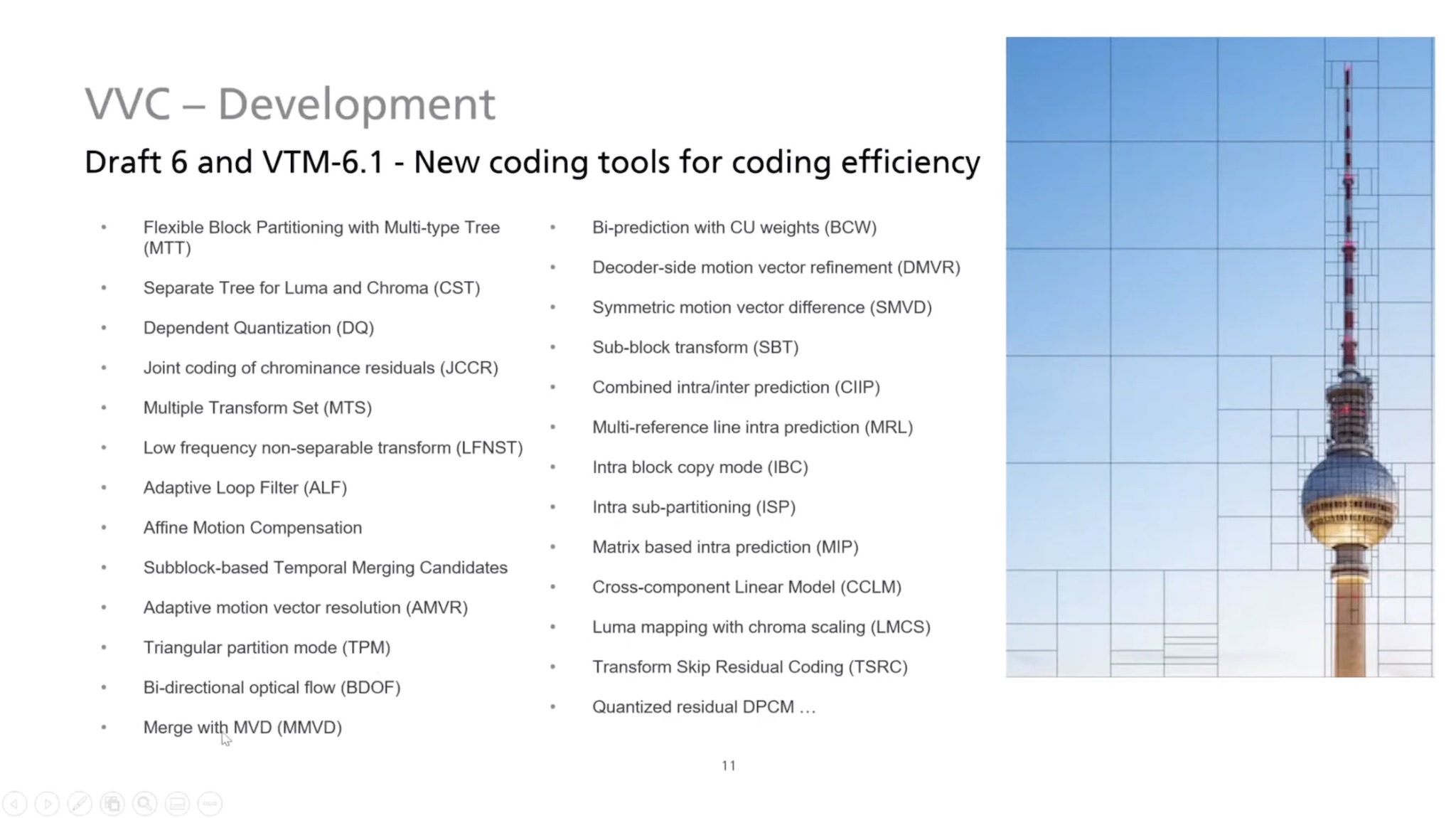

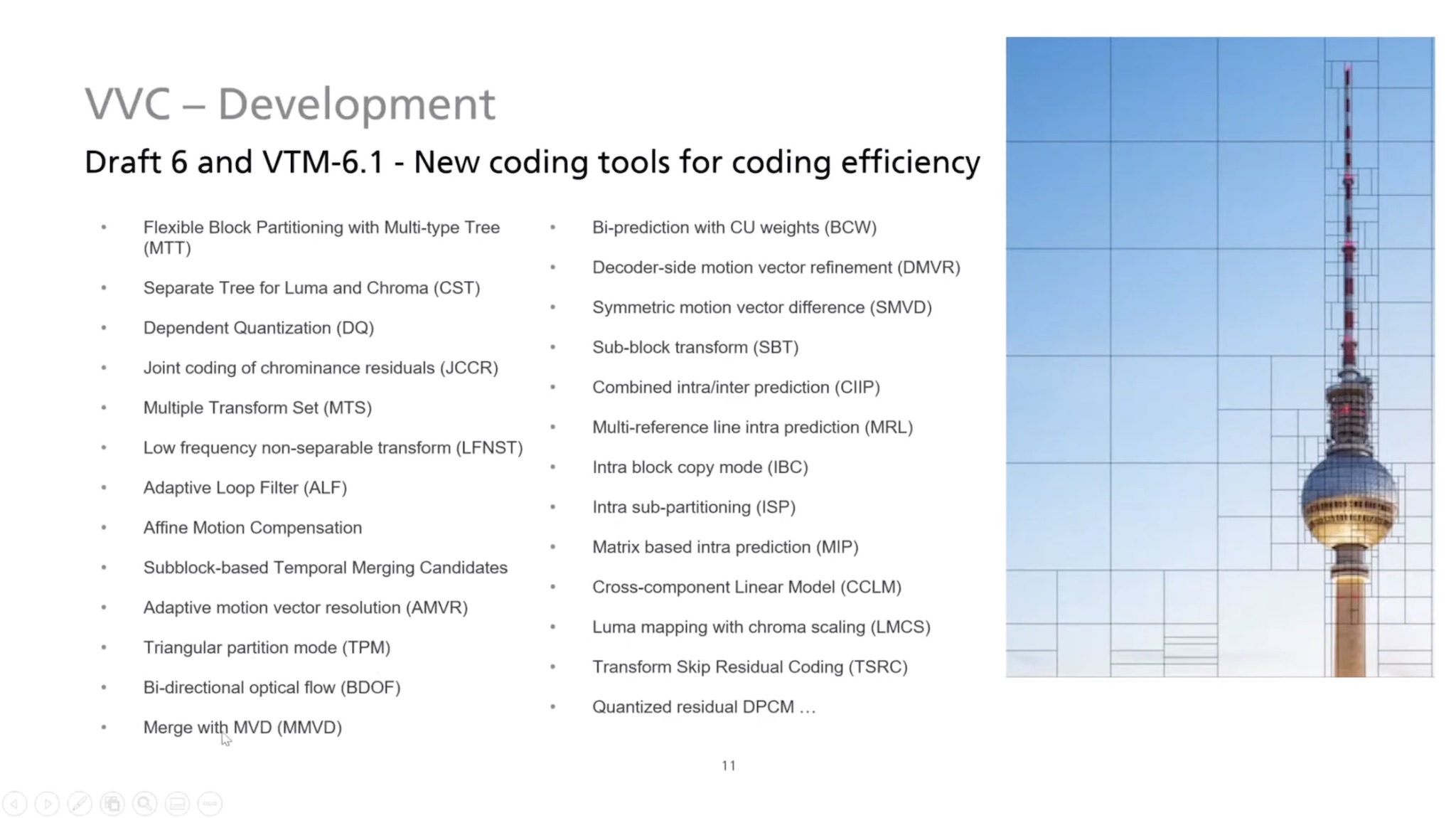

Any codec is a set of tools all working together to create the final product. Some tools are not always needed, say if you are running on a lower-power system, allowing the codec to be tuned for the situation. Gary puts up a list of some of the tools in VVC, many of which are an evolution of the same tool in HEVC, and highlights a few to give an insight into the improvements under the hood.

Gary’s pick of the big hitters in the tool-set are the Adaptive Loop Filter which reduces artefacts and prediction errors, affine motion compensation which provides better motion compensation, triangle partitioning mode which is a high-computation improvement in intra prediction, bi-directional optical flow (BIO) for motion prediction, intra-block copy which is useful for screen content where an identical block is found elsewhere in the same frame.

Gary highlights SCC, Screen Content Coding, which was in HEVC but not in the base profile, this has changed for VVC so all VVC implementations will have SCC whereas very few HEVC implementations do. Reference Picture Resampling (RPR) allows changing resolution from picture to picture where pictures can be stored at a different resolution from the current picture. And independent sub-pictures which allow parts of the video frame to be re-arranged or only for only one region to be decoded. This works well for VR, video conferencing and allows the creation of composite videos without intermediate decoding.

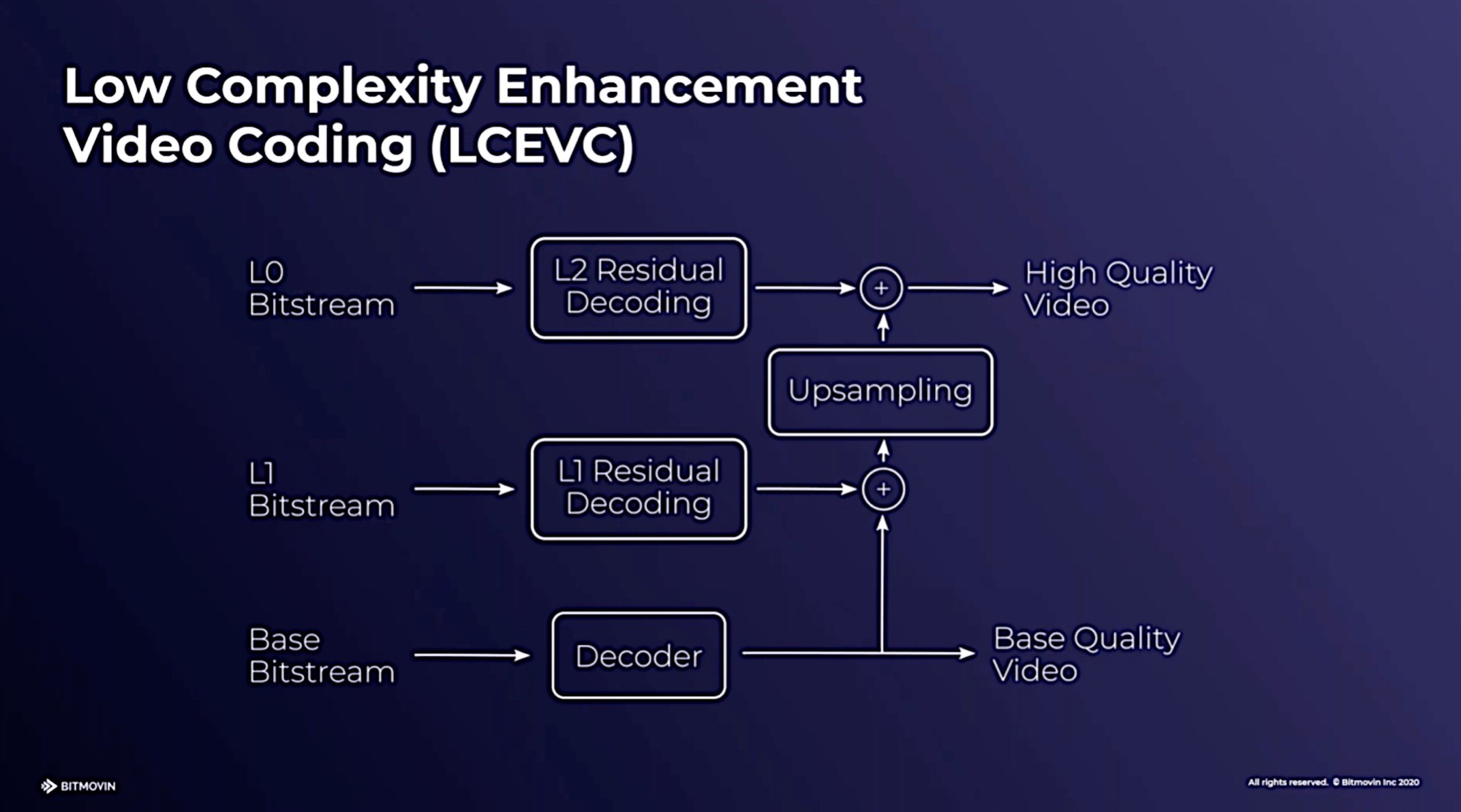

As usual, doing more thinking about how to compress a picture brings further computational demands. MPEG’s LCEVC is the standards body’s way of fighting against this, as notable bitrate improvements are possible even for low-power devices. With VVC, versatility is the aim, however. Decoders see a 60% increase in decode complexity. Whilst MPEG specifications are all about the decoder – hence allowing a lot of ongoing innovation in encoding techniques – current examples are about 8 or 9 times slower. Performance is better for screen content and on higher resolutions. Whilst the coding part of VVC is mature, versatility is still being worked on but the aim is to publishing within about 2 months.

The video finishes with a Q&A that covers implementing DASH into a low-latency video workflow. How CMAF will be specified to use VVC. Live workflows which Gary explains always come after the initial file-based work and is best understood after the first attempts at encoder implementations, noting that hardware lags by 2 years. He goes on to explain that chipmakers need to see the demand. At the moment, there is a lot of focus from implementors on AV1 by implementors, not to mention EVC, so the question is how much demand can be generated.

This talk is based on talk from Benjamin Bross originally given to an ITU workshop (PDF), then presented at Mile High Video by Benjamin and was updated by Gary for this conversation with the Seattle Video Tech community.

Bitmovin has an article highlighting many of the improvements in VVC written by Christian Feldmann who has given many talks on both AV1 and VVC.

Watch now!

Speakers

|

Gary Sullivan

Microsoft Technology & Research

|